Au Revoir

Consider the scenario at the end of Richard Linklater’s indie film Before Sunrise. 1 Young backpackers Jesse and Céline meet on a train traveling from Budapest to Vienna. Arriving in the evening and low on funds, they decide to spend the night wandering the city together before their onward connections the following day—Jesse will catch a flight back to the US, while Céline is returning to university in Paris. Sparks fly, of course (I mean, it’s Julie Delpy and Ethan Hawke; they are clever, charming, and … well, hot).

The next morning, they’re back at the train station, but they haven’t shared contact information. Here, you have to cast your mind back to 1995, when romance was still analog: no cell phones, no social media. They had agreed that this would be a one-night thing. But as Céline’s train is about to leave, in an anguished rush of words, the maybe-lovers realize that they badly want to see each other again after all. So they decide to meet right there, at the same place, in exactly six months.

Céline and Jesse say au revoir in Before Sunrise, Linklater 1995

Six months from this morning, or from last night? asks Céline.

From six o’clock last night, when their train arrived, says Jesse. It’s June seventeenth, so it will be … December sixteenth, on track nine.

They say goodbye, which Céline amends to a hopeful “au revoir.”

Now, let’s take off the love goggles and think about this as physicists. Given accurate measurements, dynamical laws allow us to predict the orbits of planets and moons, gravitationally bound and hurtling through the near-vacuum of outer space, far into the future. Those laws of motion are quite simple. Even here, though, prediction has inherent limits, because when three or more bodies are interacting gravitationally, chaos rears its head. If two trajectories differ by some small amount, then, over time, this difference grows exponentially. In the Solar system, luckily for us, the exponential divergence is slow, with a characteristic time of millions of years. 2

So much for celestial bodies; what about earthly ones? If we think about the predictability of Céline and Jesse as physical systems, we quickly realize we’re sunk. They are not isolated from the rest of the world by hard vacuum, but are in continual rough-and-tumble contact with it. And virtually every interaction within their bodies and with their surroundings is strongly nonlinear. The math is impossible. Not to mention that after six months of breathing, eating, and performing other bodily functions, we won’t even be talking about the same atoms. It’s not straightforward even to specify what exactly we mean by “Céline” and “Jesse.”

Worse, as described earlier, their evolutionary origins have conspired to make them unpredictable. It’s not just a matter of maintaining a façade of romantic mystery. Operating like this is essential to any living system that seeks to remain responsive to its environment. The signals exchanged between Jesse and Céline on that platform amounted to a few seconds of faint pressure waves in the air that were picked up by their eardrums, amplified by their middle ears, and converted into action potentials by hair cells in their cochleas. For that faint signal to have any macroscopic consequence relies on a cascade of physical systems that are all tuned to be on the edge of chaos, which is to say, exquisitely sensitive to perturbation—starting with the ear itself. 3

Hence, the electrical activity of a few neurons in their brains could tip the behavior of their entire bodies (and everything those bodies interact with) toward radically diverging futures. Céline and Jesse are walking, talking instances of the butterfly effect—one of the movie’s main themes. 4

The Lorenz Attractor, a paradigmatic dynamical system illustrating chaos, or the “butterfly effect.” Small differences in initial state (here, 3D coordinates) diverge completely after a short period; in this case, the result is quasi-random alternation between two orbits (which, coincidentally, resemble butterfly wings).

And yet. In the days before cell phones, we used to make plans like this all the time, and they often worked out. 5 How could that be, in light of its seeming physical impossibility?

Or, more accurately, extreme improbability. Based on physical modeling alone, we could work backward to conclude that it would be possible for the watery, ever-changing bag of molecules known as “Jesse” to once again be at the platform of track nine in exactly six months, but given exponential divergence (or, seen backward, exponential convergence), it would also be possible for him to be in Kathmandu, or anywhere else.

A hidebound determinist might argue: yes, but if we knew the exact position and velocity of every particle in the universe, then we could in theory run the (unbelievably complex) calculations forward in time, exponential divergences be damned, and just see whether the lovers meet again or not—just as we can calculate the orbits of the planets at some future time, given enough decimal places of precision and enough computing power.

Here’s the trouble: even in theory, such precision would be impossible. Exponential divergence means that over some constant amount of time, an extra decimal place of precision would be needed to keep the prediction accurate within some tolerance. Suppose that for the system we call “Céline,” that interval is one second. 6 Well before a minute has elapsed, an accurate prediction would then require knowing the starting positions of all of Céline’s elementary particles with a precision finer than the Planck length, which is about 1.6×10−35 meters. But that can’t be. Quantum physics tells us that the Planck length is a hard limit on our ability to localize anything.

A (highly speculative) visualization of “quantum foam” at the Planck scale.

As mentioned near the beginning of this book, we don’t understand how the universe works at that absurdly tiny scale. Some physicists imagine a kind of churning spacetime foam. What we know for certain, based on elegant experiments, is that randomness bubbles up into our universe at this scale—and it’s real randomness, not some deterministic mechanism involving unseen or “hidden” variables. 7

This means no deterministic physical theory exists that could predict with certainty where the lovers will be in six months. Ignoring practical impossibilities, even if we were to run a full simulation of the universe many times, with initial conditions perfectly matched to the present and with quantum randomness injected to sample possible trajectories, the positions of the particles associated with Céline and Jesse will rapidly diverge. Hence any physics-based model will be inherently myopic; the future blurs as we try to peer into it.

Fascinatingly, it blurs much faster for living systems than for nonliving ones. One could even characterize intelligent life as that which cloaks its own future in indeterminacy, pushing back against physical prediction. 8 The mechanism it uses to pull off this trick is dynamical instability, which can work as a noise amplifier. And the universe provides us with a faint but inexhaustible source of noise to amplify. At coarse scales, it looks like thermal noise, or Brownian motion; at much smaller scales, the ultimate noise source underwriting that randomness is quantum mechanical. Living things eke out their elbow room by using such noise—along with inputs from their environment—to make decisions. 9

While physical theories fail to predict the behavior of living systems, we have a much more effective predictive model: our old friend, theory of mind.

Will What You Will

Céline and Jesse are not just watery bags of molecules, but agents whose entire evolutionary raison d’être is to model themselves and each other. That is both what it means for them to have free will, and for them to be able to predict each other’s behavior six months hence—far beyond anything physical determinism could achieve in a world with quantum uncertainty.

Amplifying random numbers seems like a dubious basis for either of these claims. If you were to, for instance, attempt to foil determinism in your dating life by scrolling through Tinder and flipping a coin to decide whether to swipe left or right, that would hardly be a satisfactory basis for claiming that you are exercising free will. It would also render your actions wholly unpredictable, both to yourself and to your (perhaps bemused) prospective dates. 10

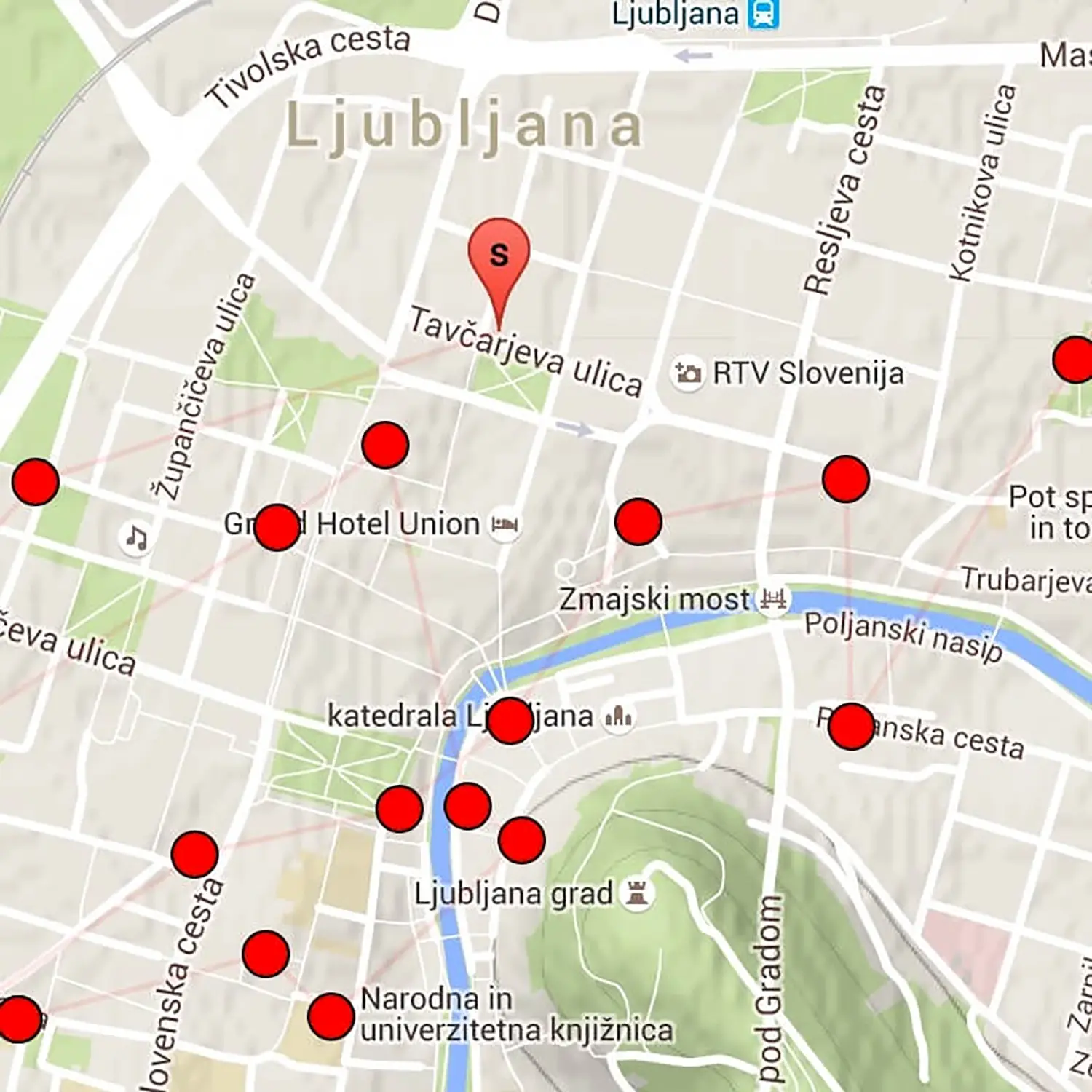

In 2015, engineer Max Hawkins began a two-year experiment in “randomized living,” allowing a pseudorandom number generator to determine his movements, meals, activities, and whom he should meet. This was his trajectory through Ljubljana.

Free will involves combining theory of mind, randomness, dynamical instability, and selection:

- Theory of mind can be used not only to model others, but to model oneself. We’ve already encountered this at second order, and beyond (“what Jane believes Mr. Rochester must think Jane thinks”). There’s no reason this same faculty can’t work reflexively: “what Jesse thinks Jesse thinks.” Or, in a less navel-gazey way, “what Jesse imagines he will see, think, and do in a few minutes, when it’s time to catch a bus to the airport,” or “what future Jesse will do in five months or so: book a plane ticket.” Planning for one’s own future is necessarily a theory-of-mind exercise—a way to cooperate with your future self. Or, to turn it around, without theory of mind it would not be possible to “time-travel” and imagine what you will experience and do under circumstances other than the present.

- To time-travel, or to imagine how any pretend scenario might play out, you must be able to draw random numbers in your head, in much the same way the physical universe must constantly draw random numbers to resolve the blurry cone of possible futures into a specific one. Daydreaming is a kind of random walk through modeled associations or predictions, but so is planning; planning is just more directed.

- Dynamical instability (the butterfly effect) in neural circuitry makes choosing a potential future possible, for the same reason that dynamical instability makes it possible for a cockroach to scurry either left or right in advance of a shoe (as described in chapter 3). For the cockroach, the butterfly effect allows behavior to be unpredictable, when driven by the faintest whisper of a random variable. Interestingly, though, dynamical instability is also necessary for behavior to be predictably influenced by an external stimulus—as when you turn left or right based on a faint whisper in your ear saying “go left” or “go right.” Free will, too, relies on this dynamical instability, for you need to be able to whisper to yourself, “imagine doing X,” then “imagine instead doing Y,” and, eventually, “do Z.” 11 In other words, being able to control your own behavior requires being able to amplify faint internal signals.

- Finally, selection, powered by theory of mind, allows one to favor certain possible futures, and cut off or stop exploring other ones, much the way AlphaGo’s value network prunes its Monte Carlo tree search. If Jesse knows that by getting to the plane on time he will feel melancholy but be safely en route home, and that missing the plane will leave him stranded, alone, anxious, and broke, then there’s no need to plan the latter scenario in detail; best to just figure out, instead, what steps he will take to get to the plane on time. When we talk colloquially about “rational” behavior, this is generally what we mean. Notice that the branching character of possible futures allows one to justify—and at times even legitimately make—decisions using logic. However, contra Leibniz, this logical aspect of decision-making, when present at all, is computationally trivial compared to the acts of imagination involved in mentally simulating worlds and people, including yourself.

So, theory of mind lets us build a network of solid tracks along which our minds can venture far into an otherwise marshy future. Dynamical instability, like a lubricant, lets us glide anywhere along those tracks, free to go either way at any fork with the gentlest of nudges. Randomness provides those nudges, letting us wander prospectively into multiple futures. And selection prunes the network to allow efficient long-range planning. You may notice that this looks a lot like a fast version of evolution taking place in imaginary worlds!

When pruning occurs in advance and after lengthy exploration, we call it a deliberative decision. When it occurs just in time, because we’ve kept multiple paths open until the last possible moment, or we’ve changed our minds, or an unforeseen opportunity arises, we call it a snap decision. If you’ve been able to competently exercise theory of mind about your “self” to guide a decision, whether deliberative or spur-of-the-moment, you can meaningfully be said to have exercised free will.

There’s no dualism or supernatural “fountaineer” in this account. Nonetheless, calling a decision freely willed seems justified given an everyday understanding of what that means. There is a “you” who made this decision; you are not acting reflexively, but modeling a self, sampling multiple alternatives, and making a choice based on how those alternatives appeal to that modeled self. In other words, the “you” here is not just a brain, but a modeled self: a functional entity, not merely a physical one.

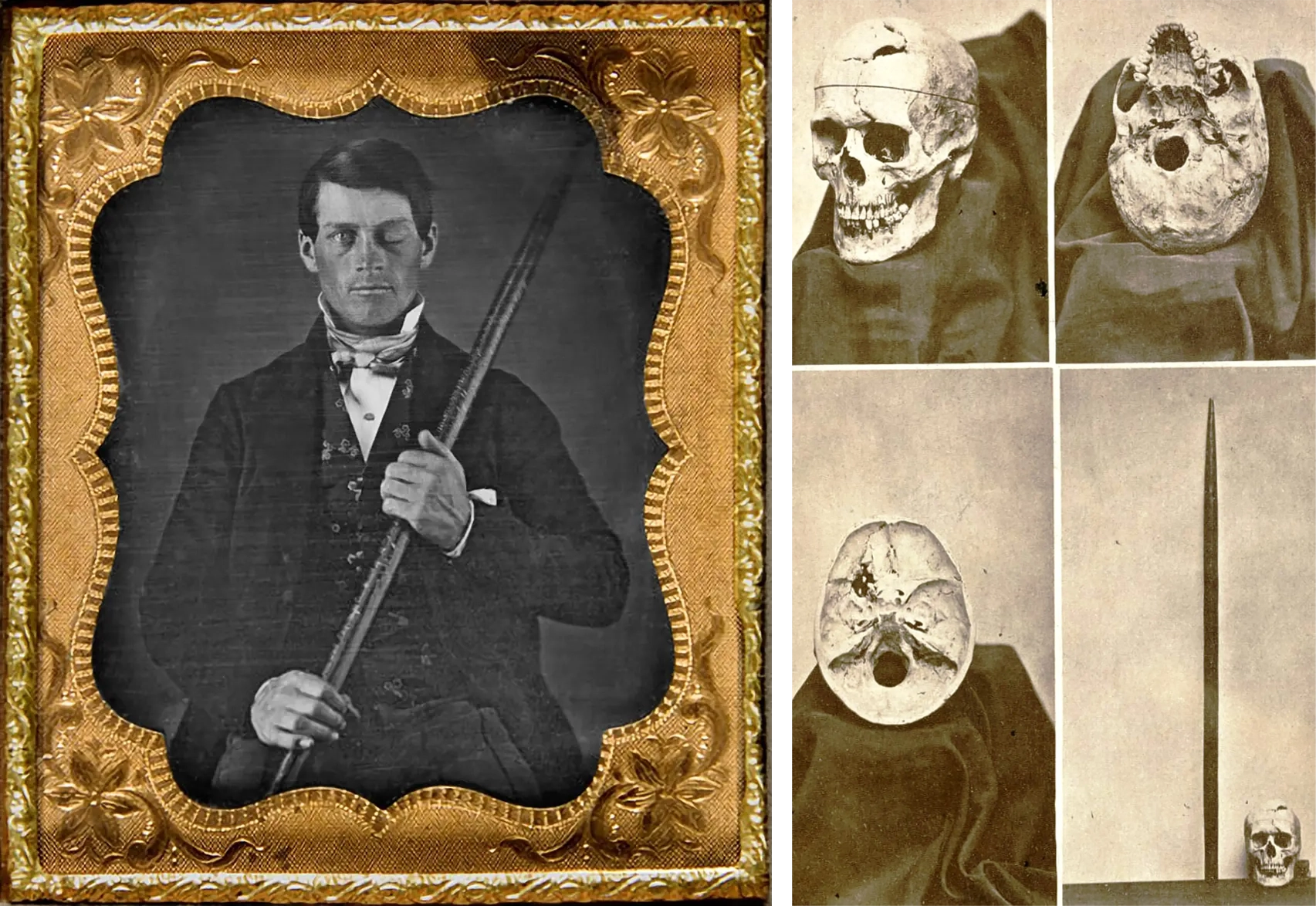

In 1848, an iron bar passed all the way through railroad worker Phineas Gage’s brain; miraculously, he survived until 1860, but was reported to have undergone profound changes in personality and judgment. Gage’s case established the physiological basis of supposedly “essential” character traits, thus complicating questions of free will and accountability. Gage’s skull (sawed to show the interior) and the iron bar were photographed in 1868.

Free will is compromised when these conditions are compromised. For instance, if your choice landscape is too constrained to offer meaningful alternatives, then you aren’t free, as anyone who has ever been in prison, in a refugee camp, or in an endless immigration queue at the airport can attest. If an act is reflexive, it’s not freely willed either. If your model of yourself is unreliable due to immaturity, incapacity, intoxication, or mental illness, then your free will is also impaired. The same holds if your predictive model of the world or of others is broken, for instance, due to delusional beliefs. These ideas are familiar from legal theory, ethics, or plain common sense.

Endless debates and ongoing reassessments (rightly) abound about what constitutes impairment, freedom, or choice. For instance, when someone without any criminal history suddenly commits a horrible crime, and a brain scan reveals a fast-growing tumor, this may be exculpatory. Before brain-imaging techniques, we wouldn’t have known. 12 However, not everybody who does something reprehensible has the equivalent of a tumor, especially when that somebody was perfectly able to think through the options and model the perspectives of others. There’s nothing spooky about choice, impairment, responsibility, or culpability. Every human society acknowledges these concepts, though they vary in their norms and in where they draw lines.

Some free will “compatibilists” acknowledge the social utility of concepts like free will, but believe that they are, at bottom, illusions, either because physics alone suffices to explain everything, or because, as Schopenhauer observed, a person can do what they will, but not will what they will. 13 I hope I have explained why physics does not explain everything, or, practically speaking, much of anything, when it comes to predicting each other—or ourselves.

As for Schopenhauer’s maxim: the first clause trumps the second. It’s true that, when you evaluate choices, you’re applying a value judgment; its particularities, from valuing honesty above loyalty to finding blue cheese gross, are part of what makes you you, and not someone else. You can’t choose to wake up as a different person one day, but you can decide that you’re going to try the blue cheese with an open mind, despite the ick. Your decision need not be due to some other, overriding value. It could be a reasoned decision, or just a case of “what the hell, I’ll give it a go.”

Once you realize how yummy blue cheese is, your future judgments will change. So, as you author (and revise) the story of your own life, you can change who you are, thus willing what you will will. 14 We even understand something now about the mechanics of that process. It’s called “in-context learning,” and will be discussed in chapter 8.

The account I’ve just given of free will 15 includes the self-referential features of what we generally call “consciousness.” Immediate feelings or experiences, such as pain, pleasure, fear, or, for animals that can see the color red, “redness” don’t require these fancy features. Chapter 2 describes how basic feelings arise as a matter of course in any self-predicting modeler that has evolved to persist through time. Most of us can tell red from green because it’s a useful spectral distinction for spotting ripe berries or blood, which are helpful things to know about. That’s no mystery, and lots of other animals—including insects—can experience “redness” too, for similar reasons. Many can also experience “ultravioletness” and other sensations unfamiliar to us, because for them, those signals are behaviorally relevant. 16

A more sophisticated mind can support more complex internal states, including simulated worlds, counterfactuals, and prospective futures. Such minds evolve via social-intelligence explosions, which implies that much of the complexity in those simulations concerns other minds—what they know, what they experience, how they will act or react.

A “self” is inherent to any social modeling if carried out to second order, because those others you’re modeling are … modeling you back. If they’re conspecifics, then they are also very similar to you; for instance, as a chimp, it requires only a little imagination to see the world through the eyes of another chimp. When your social brain has evolved to this point, it’s no great leap to stare into the mirror, physical or mental, and consider yourself as a being, both in the future (which is essential for long-range planning) and in the present. 17 The infinite regress of your “Mind’s I” in that mirror gives you the vertiginous experience of self Douglas Hofstadter calls a “strange loop.” 18

Chimps (and, to our knowledge, only a few other large-brained species) can check themselves out in mirrors, progressing beyond social recognition of a conspecific to understand they are looking at themselves.

What It Is Like to Be

Consciousness is not that complicated! So why do we struggle so much with it—what makes it a “hard problem” 19 for philosophers?

Since “hardness” is subjective, a cross-cultural perspective is helpful. 20 As it turns out, philosophers in the modern European tradition are far from the norm; they are, to use evolutionary anthropologist Joseph Henrich’s term, WEIRD: Western, Educated, Industrialized, Rich, Democratic. 21 Let’s step outside this bubble, which is, both historically and psychologically, a minority.

Human cultures are near-unanimous in their belief in souls, though the details of what constitutes a soul and where it resides in the body (or at times outside it) vary. 22 Belief in the ensoulment of animals is commonplace, as is a belief in souls, great or small, residing elsewhere in nature. Chapter 1 offered a bottom-up account of animism, emphasizing the blurriness of the boundary between the living and the nonliving from the standpoint of dynamic stability and functionalism.

Let’s now take the human perspective, or, more generically, the perspective of a social animal that has undergone an intelligence explosion and is endowed with a highly developed theory of mind. For such a being, souls are just about the most behaviorally relevant things one can imagine—more so than numbers, clouds, or ripe berries. For social animals, other minds are the umwelt. So of course we distinguish between “somebody home” and “nobody home.” Many human languages mark that distinction grammatically, as English does with “who” versus “what.”

Even so, cultures are far from being in agreement about what falls into each category. In Potawatomi, almost everything is a “who,” until or unless it is harvested for human use. 23 Under Roman law, on the other hand, human slaves were instrumenta, like tools or equipment. 24 In both cases, the distinction is made on the basis of whether theory of mind is being exercised. A Potawatomi considers the perspective of a “bear person,” a “tree person,” and so on, until the moment of harvest—which is traditionally accompanied by a prayer of thanks—after which that perspective ceases to be, in the harvester’s mind. For Romans, the very definition of slavery lay in the slave’s loss of agency, such that for the master, the slave’s point of view didn’t need to be taken into account.

These represent cultural ideals, of course. In reality, we humans, regardless of cultural background, are probably more alike than we let on. Some Romans undoubtedly had reciprocal relationships with their slaves, and some Potawatomi herb-gatherers are probably guilty of skipping their ceremonial thanks if nobody’s watching. Conversely, we may all profess not to believe there’s “anybody home” in a kid’s stuffed animal, but as Tracy Gleason, a professor of psychology, has written about her much younger sister’s threadbare stuffed rabbit, that’s not how many of us behave: “I know his brain is polyester fill and his feelings are not his but my own, and yet his […] eyes see through me and call me on my hypocrisy. I could no more walk past Murray as he lies in an uncomfortable position than I could ignore my sister’s pleas to play with her or the cat’s meows for food.” 25

In Cast Away, a lonely Tom Hanks readily “ensouls” a volleyball with a bloody handprint; during his eventual escape from the desert island, Hanks’s character nearly dies attempting to “save” Wilson when “he” is swept overboard.

WEIRD beliefs about what counts as a “who” are particular. Then again, any such beliefs are particular. What seems to distinguish WEIRD beliefs from those of traditional societies is their single-mindedness—literally. Philosophical debates about consciousness in the West focus heavily on the inner life of an individual: per Descartes, “Cogito, ergo sum,” meaning “I think, therefore I am.” That packs a lot of “I” for such a short sentence.

Centuries of introspective discourse have carried on about qualia and “phenomenal consciousness” while ignoring the fundamentally social and relational nature of theory of mind, and the way consciousness arises precisely when we model ourselves the way we model others. For example, in her book Conscious, Annaka Harris writes,

Surprisingly, our consciousness […] doesn’t appear to be involved in much of our own behavior, apart from bearing witness to it […]. [F]ew (if any) of our behaviors need consciousness in order to be carried out. […] However, in my own musings, I have stumbled into what might be an interesting exception: consciousness seems to play a role in behavior when we think and talk about the mystery of consciousness. […] How could an unconscious robot (or a philosophical zombie) contemplate conscious experience itself without having it in the first place? 26

Consciousness certainly does come in handy when thinking about consciousness. But unless we’re chronic navel-gazers, we don’t spend much time thinking about our own experiences and thoughts in the moment. When we are on our own and on autopilot (as we often are when we’re on our own), we tend to just act. Life would be exhausting otherwise. But in a social setting, we must constantly think about our relationships, how we’re coming across, and the experiences of others; this modeling deeply informs how we act.

And it is sometimes useful to be able to actively model, and thus manipulate, your own attention. Consider meditation, or simply allowing yourself to fall asleep when you’re keyed up after a busy day—acknowledging, then dismissing intrusive thoughts; scanning your body; relaxing your muscles from head to feet. Your attention is like a spotlight, and controlling it requires you to pay attention to your attention.

According to neuroscientist Michael Graziano’s “Attention Schema Theory” (AST), consciousness is an illusion arising from this modeling of one’s own attention. 27 Graziano invokes ventriloquism to illustrate the point. When a skilled ventriloquist throws their voice into a puppet, manipulating its gaze and moving its jaw along with the words, we experience a powerful impression of the puppet as a person. In modeling that puppet’s attention, we have conjured into existence a modeler, or soul: a being whose attention that is. If the puppet turns toward us and begins to speak, we enter into that recursive social loop, modeling it attending to us attending to it, and so on. The punchline is that we are all puppets; we just happen to pull our own strings.

The opening scene of Being John Malkovich illustrates the surprising power of a puppet to evoke personhood, attention, and affect using just a few degrees of freedom.

I agree with Graziano’s account, though I would quibble with his use of the term “illusion” to describe consciousness or personhood. If chairs aren’t illusory, then neither are people. Attention is a real enough sort of computation, useful for predictive modeling. And in a world of sophisticated predictive modelers, including oneself, attention is worth modeling in its own right. It’s reasonable, then, to define a being, a soul, or a “who” as that which can pay attention—and can model that attention.

Weird

Taking seriously the perspective of intelligence as relational implies something far more mind-bending than merely acknowledging the social origins of consciousness. It implies that relationships themselves are the building blocks of reality. There is no God’s-eye “view from nowhere” in a relationship graph. In fact there isn’t even a God’s-eye view of what the nodes in the graph are.

Let’s first take Tracy Gleason’s perspective. Murray the rabbit is a node in her social graph, albeit one that she is of two minds about (a phrase that, as we’ll see, might have an almost literal interpretation).

But does Murray have a perspective? We (mostly) believe not, and can back the “nobody home” belief with all sorts of scientific evidence, like the fact that his little head is stuffed with polyester. Insisting that computation is happening in there, that he has muscles and a nervous system, and that he is about to jump up and dash into the closet would all be poor predictions, both anatomically and behaviorally.

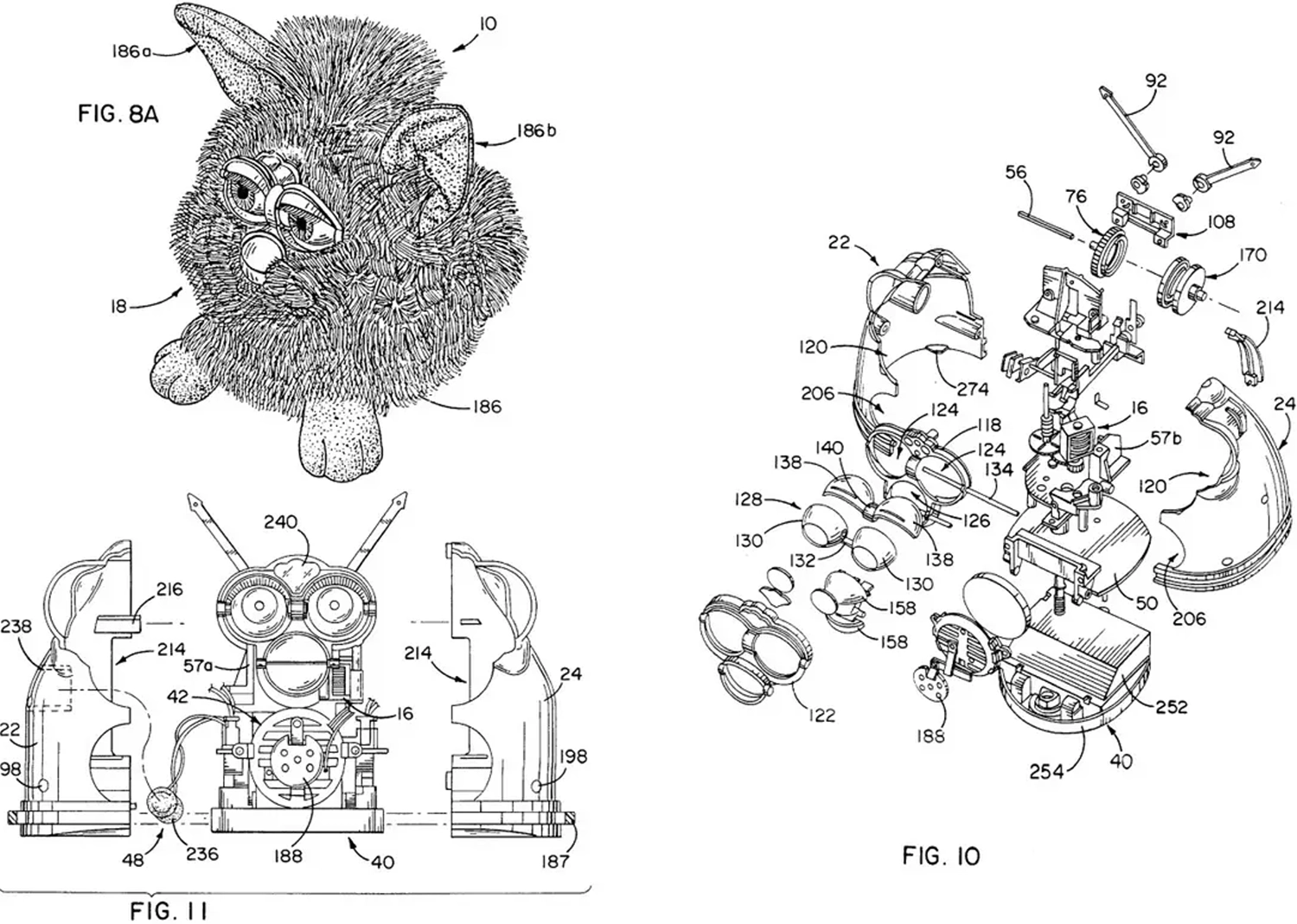

For a slightly less clear-cut case, consider Furby, a furry robotic toy released in 1998. When placed in an “uncomfortable” upside-down position, the robot squirms and makes unhappy noises. These simple electronic behaviors make it powerfully aversive to hold Furby upside-down for too long, even if, like Gleason, we can’t rationalize our own behavior. 28

From the 1998 Furby patent

Or take the case of Graziano’s ventriloquist. It’s true that a puppet, no matter how convincingly imbued with personality, has a head as empty as Murray’s. Its ears can’t hear and its eyes are painted on. However, the puppeteer can see, hear, and think just fine. Moreover, she can use her theory of mind and acting skills to immerse herself in the puppet’s perspective, creating a character with its own relationships—whether to the audience, to other puppets, or to the puppeteer. Are puppets really people, though? If they were, wouldn’t we be more concerned when Punch and Judy pummel each other?

More broadly, when is accounting for another’s perspective possible and warranted, and why? How should these perspectives be imagined and weighted against one another? We have arguments all the time about the really difficult judgments: how do we weigh the interests and perspectives of chimpanzees and bonobos, or of octopuses, or even of octopus arms? What about the potential perspectives and interests of embryos and fetuses at various stages of development? Or of people in comas with profound brain damage? In these cases and many more, reasonable people disagree, and while advances in neuroscience may offer additional insight, no magical scientific measurement will come to our rescue and tell us what is “right.”

Still, it’s not hopeless to imagine the inner life of another being. On the contrary—we’re good at this. It’s what we have evolved to do. However, all we have are our models and our ability to mentalize—to see through the eyes of (some) others, some of the time, with greater or lesser predictive accuracy.

Recall how categorical modeling works for an inanimate concrete category, like “bed.” There is nothing mysterious or ineffable about the existence of beds in the world; you probably sleep on one, most nights, and don’t wonder about its ontological status. You can easily recognize one on sight, or, if you’re blind, by touch.

But this doesn’t mean that every person has the same definition of “bed.” In the European Middle Ages, rushes strewn on the floor comprised “bed” for most people. In Japan, tatami mats may be beds. A futon can be a sofa or a bed, and it may “be” one or the other simply by virtue of whether you are lying down on it. In other words, our models of “bed,” which work something like multilayer perceptrons (as described in chapter 3), vary by person and by context.

Although this is easy to understand, it’s not a triviality: per the interlude at the end of chapter 2, it already deals a death blow to the Leibnizian ideal of relying on impartial logic to make universal truth statements about beds or pretty much any other object. We have already left the notion of “objective” reality behind.

Here’s the weightier implication: it’s just as impossible to assert objectivity about animate categories, like “person” or “conscious.” This idea has been far harder for me to accept.

The difficulty is partly of WEIRD origin. One of the most famous documents laying out the tenets of the WEIRD worldview says, right at the top, “We hold these truths to be self-evident, that all men are created equal, that they are endowed by their Creator with certain unalienable Rights, that among these are Life, Liberty and the pursuit of Happiness.” 29 These were great ideas, and they represented important social and political innovations in the eighteenth century.

However, the use of the word “men” suggests that these truths are not so timelessly and universally self-evident. We would not use the same word today. And it was no grammatical quirk. The authors of the Declaration of Independence (all men) didn’t believe that women, let alone non-European people, were “created equal” to themselves or endowed with the same “unalienable Rights.”

,_by_John_Trumbull.webp)

John Trumbull’s 1819 painting Declaration of Independence, depicting the five-man drafting committee presenting their work to the Congress

Clearly, then, the legal understanding of personhood changed significantly between 1776 and 1948, when the Universal Declaration of Human Rights extended “inherent dignity and […] equal and inalienable rights” to “all members of the human family […] without distinction of any kind, such as race, colour, sex, language, religion, political or other opinion, national or social origin, property, birth or other status.” 30

Eleanor Roosevelt holding the English language version of the Universal Declaration of Human Rights, November 1949

Excellent progress. However, that these are statements of evolving political intent rather than of self-evident, universally agreed-upon fact is once again obvious, given that the Declaration was partly a response to the Holocaust, which had been brought to an end by the Allied victory in Europe just three years earlier.

Universality and “self-evidentness” are WEIRD mind tricks. 31 They assert that something is so unquestionably the case that there is no author, no point of view—other than, perhaps, that of God the Creator. In reality, of course, there are specific authors, and they are expressing their point of view and their political will. But unlike the proclamations of kings, the WEIRD approach adds a layer of indirection and anonymity, an appeal to “universal law.” It asserts a kind of Newtonian physics of personhood, stipulating “who counts as a who” in terms that do their best to deny any role for subjectivity.

This is entirely in the spirit of the Enlightenment. It goes along with the standardization of weights and measures, the establishment of reliable “railroad time,” the development of latitude and longitude as a global coordinate system, and, most of all, the formulation of physical laws with universal applicability, from celestial motion to the pendulums of clocks. These, too, were important advances, both intellectually and practically. They made it possible for people, goods, and information to flow worldwide, underpinning much of the wealth, knowledge, and global culture we now take for granted.

However, general relativity tells us that this global frame of reference is only an approximation. What the passenger on a train regards as one meter is a tiny bit less for someone watching from the station. When you stand on the ground, a second passes a tiny bit more slowly for your feet than for your head.

Similarly, we know that, no matter how vigorously we assert the timeless universality of human rights and dignities, precepts of moral conduct or ethics, these are neither timeless nor universal; we have had to continually argue about them, fight for them, and change our conceptions of them over time. Historical documents, from religious texts to political manifestos, reveal their cultural contingency, variability, and ongoing evolution. It would be nonsensical to assert that we have, at just this moment in history, reached the end of the line, and have achieved either the final word or a universal consensus. And this includes not only questions of how to behave toward a “who,” but who or what counts as a “who,” and indeed whether that is best thought of as a binary category.

Entanglement

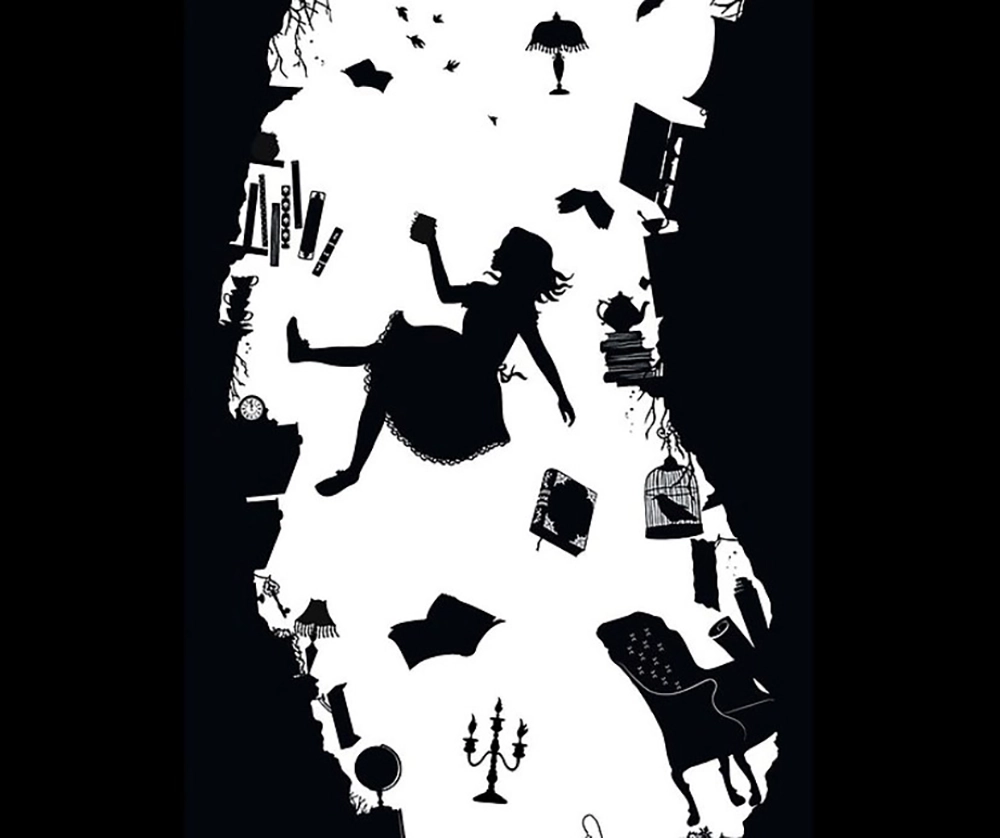

Like Alice in Wonderland, we are about to go down the rabbit hole. Our rabbit hole is quantum mechanical weirdness, and what it tells us about the nature of reality and our models of it.

Alice going down the rabbit hole

To be clear, this is not in any way an appeal to the pseudoscience of “quantum consciousness,” or the idea that the brain is a quantum computer—a position with no mainstream scientific support. 32 As far as we know, no cognitive functions rely on quantum phenomena. Nonetheless, we have seen why quantum mechanics matters in discussing the mind, despite its remoteness from our everyday experience: it establishes sharp limits on the predictability of living organisms (which is relevant to determinism debates), and it provides an inexhaustible source of randomness for minds to “harvest” as they exercise free will.

We’ll now delve deeper into post-classical physics, because it can also help us better grasp what it means to adopt the kind of relationship-centric perspective required to understand mutual modeling and conscious experience. Keep in mind that this is more than a metaphor. Modern physics offers our best guide to how the universe works at a fundamental level; it is our Newtonian or folk physics that we should handle with caution, even—or especially—when we rely on it as a metaphor. So … down we go.

One of the least intuitive ideas in quantum mechanics is that of superposition, which holds that a physical system can be in multiple states at once. This is certainly true of single particles. Putting a very faint photon source and photographic film on opposite sides of a barrier with two slits, for example, produces dots on the film as one would expect, yet their distribution reveals an interference pattern with multiple peaks and troughs, as if each photon had passed, wave-like, through both slits at once before turning back into a particle to expose the film. If one of the slits is closed, the interference pattern disappears. Likewise, the interference pattern disappears if the experimenter adds any measuring apparatus to determine which slit the photon passes through! The double-slit experiment has also been done with electrons, and even with large molecules. 33

The double-slit experiment, performed under a microscope by Jeroen Vleggaar (Huygens Optics); the optical stage is arranged so that the screen can be moved continuously. Interference patterns with many peaks and troughs are revealed as the screen backs away from the slits through which light has passed.

In 1935, Erwin Schrödinger devised a famous thought experiment to highlight the bizarreness of this phenomenon. It goes like this. A cat is in a box, along with an apparatus. The apparatus contains a Geiger counter that will register the nuclear decay of a single atom in a radioactive sample. We know that nuclear decay is a truly random quantum event. The source and detector could be calibrated so that such a decay event has a fifty-percent chance of occurring within an hour. If the event occurs, a mechanism will open a draught of sleeping potion, and the cat will fall asleep. Otherwise, she will remain awake. (In keeping with modern lab ethics and my fondness for cats, I’m adopting physicist Carlo Rovelli’s modification to Schrödinger’s original protocol, in which the “sleeping potion” was needlessly lethal. 34 )

We know that, when we open the box after an hour, we will see either a sleeping cat or an awake cat. But was the cat already asleep or awake just before we opened the box? Or was she … both?

Originally, Schrödinger’s cat was meant to critique the so-called “Copenhagen interpretation” of quantum mechanics advanced by Werner Heisenberg and colleagues. In this interpretation, a physical system is in a superposition of all possible states until it is measured, at which point its “wave function” collapses into an unambiguous state. Everything behaves, in other words, like a fuzzy probability wave while your back is turned, but becomes a particle the moment you peek, like the exposed dots on the film in the double-slit experiment.

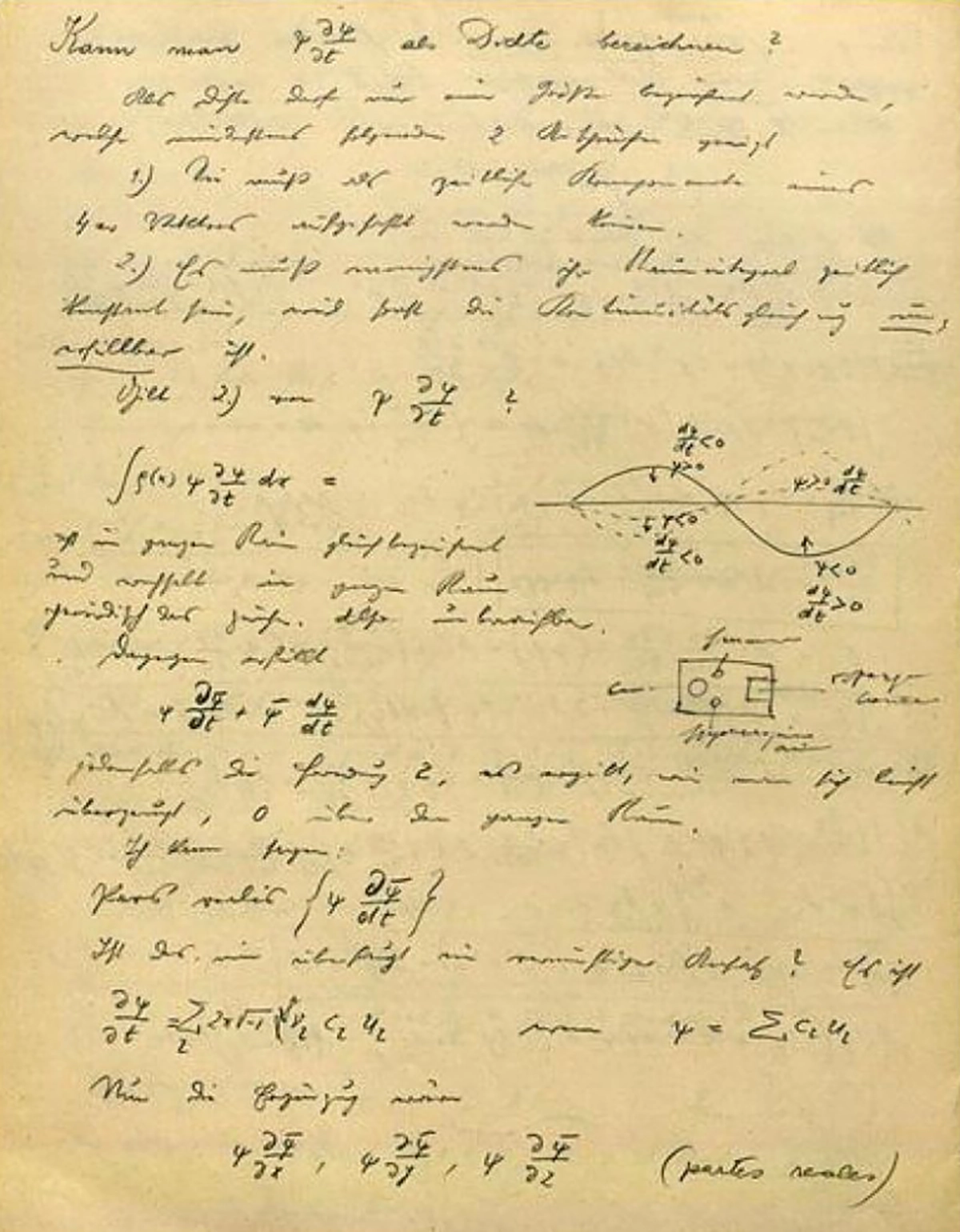

A page from a Schrödinger notebook in which he grapples with the concept of a wave function

While the Copenhagen interpretation is consistent with a century’s worth of experimental findings, it’s deeply troubling, because it appears to privilege the observer or experimenter. After all, we, too, are just quantum wave functions; why should our observation of a particle in the lab collapse its wave function, while it can get up to all sorts of spooky business—including “quantum entanglement” with any number of other wave functions—when we’re not looking? It all seems a bit Twilight Zone. And lest we believe it only applies at scales so invisibly small as to be irrelevant, researchers have succeeded in putting millimeter-sized objects in the lab into quantum superpositions, if they are suitably isolated from their surroundings—which in practice has meant cooling them to extremely low temperatures. 35

The practicalities of making a box well enough isolated for a whole cat inside to remain in superposition (and not frozen solid with liquid helium) aren’t straightforward; it’s a lot harder than preventing the sound of a meow from getting out. Still, in theory, it’s possible. If we succeeded in isolating the box thoroughly enough, then after an hour, the cat would be both sleeping and not—until we opened the box. At that point, we would collapse her wave function, and she would be just one or the other: asleep or awake.

As with the ambiguities about objects and subjects described earlier, what makes Schrödinger’s thought experiment so disturbing is that what’s inside the box is not just a loop of superconducting wire, as in experiments that have actually been done, but a cat. What is it like to be simultaneously awake and asleep? Hold on—isn’t the cat an observer too, inadvertently running a lab experiment of her own, inside the box? If so, wouldn’t she collapse the apparatus’s wave function by either observing or not observing the unstoppering of the potion? But then, why would that not be true within the apparatus itself—isn’t every part of it “observing” whatever it’s in contact with? Does an “observer” have to be conscious for collapse to occur? 36

There is no universal agreement on any of these questions within the physics community; that’s why we’re still talking about Schrödinger’s cat nearly a century later. Quantum mechanics continues to be subject to myriad interpretations, most of which add some further postulate or assumption to the known equations to force them to make more intuitive sense. Partisans of each interpretation make different claims about the cat: perhaps she’s too big to be in superposition, but a flea on the cat could be; or perhaps the cat exists in an infinite number of parallel universes, awake in some and asleep in others.

A particularly straightforward interpretation championed by Carlo Rovelli (the physicist who likes cats) is “relational quantum mechanics” or RQM. It adds nothing to the equations, but instead asks us to take them at face value—with a reminder that any observations made during an experiment are always made from a point of view. Just as in the theory of relativity, there is no privileged “view from nowhere.” According to Einstein’s relativity, lengths and durations depend on one’s perspective. According to Rovelli’s RQM, events themselves depend on one’s perspective.

From her own point of view, the cat can’t be simultaneously asleep and awake, any more than we, outside the box, can be. Nobody ever gets to observe something in superposition, including oneself. However, from an experimenter’s perspective outside the box—if all interactions between inside and outside are prevented—the cat is indeed in a superposition of asleep and awake … right up until the box is opened.

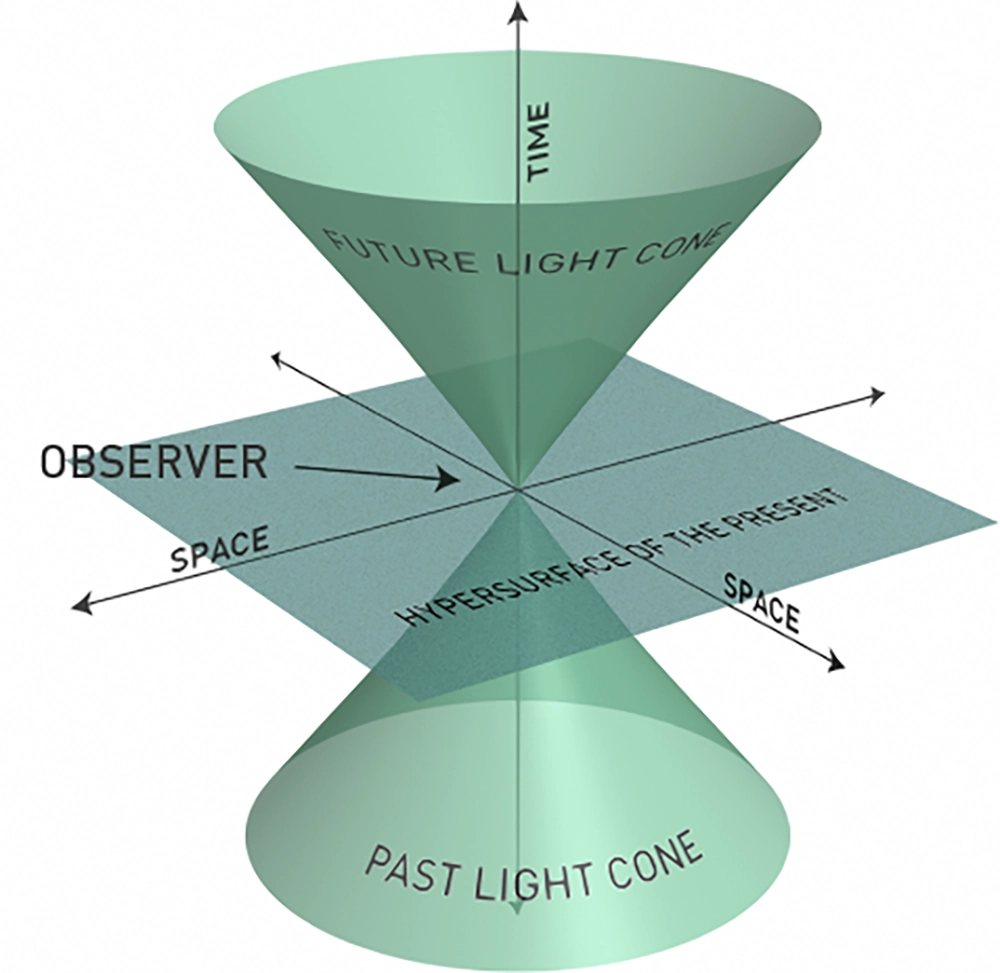

In this view, time itself doesn’t work the way we imagine. The theory of relativity already confounds certain of our intuitions about time, such as a global partitioning of events into those that took place in the past, those that are taking place now, and those that will (or may) take place in the future. According to Einstein, the notions of past and future can only be defined locally, based on the “light cone” of a point in spacetime, which represents the region that could have affected it in the past and that it might influence in the future—really two cones, their apexes converging on an infinitesimal present. Everything outside your light cone is inaccessible, causally disconnected from you, hence neither part of your past, present, or future.

The light cone

In RQM, pasts and futures are even more local and relative, as they are contingent on the particular network of prior interactions leading up to an event. Thus RQM offers a new perspective on an especially bizarre variation of the two-slit experiment proposed by physicist John Wheeler in 1978, 37 and confirmed experimentally in 2007: the “delayed-choice” experiment. 38 In this version, an experimenter decides whether to measure which slit a particle went through after it has already passed through the slits; yet the seemingly paradoxical result remains: an interference pattern is observed only when no measurement takes place. It’s as if the information about whether the experimenter will “ask” which slit the particle went through somehow travels backward in time to determine retroactively whether it behaved like a particle or a wave!

Yet all of this follows because the equations of quantum mechanics describe interactions, not “things in themselves.” What we call a measurement is just an interaction between A and B. We could say A is the experimenter, and B the object being studied, but either way works. For A, B collapses; for B, A collapses. But for C, which has not yet interacted with A or B, A and B are entangled in superposition. 39

Subjectivity, then, exists even at the most fundamental level of description. You can only know about something by interacting with it, and any uncertainty inherent in that interaction is real—for you. Between one interaction and the next, that uncertainty grows. And the same applies reciprocally. Reality is continually being locally and mutually constructed, interaction by interaction. We can’t ask what “real” reality looks like, outside that network of interactions. As Rovelli puts it: “If we imagine the totality of things, we are imagining being outside the universe, looking at it from out there. But there is no ‘outside’ […]. The externally observed world does not exist; what exists are only internal perspectives on the world which are partial and reflect one another. The world is this reciprocal reflection of perspectives.” 40

The same can be said of our psychological universe, because, like RQM, theory of mind is purely relational. It involves one mind modeling another—and itself.

To reiterate: none of the above implies that theory of mind, consciousness, or any mental process relies on quantum phenomena per se. However, physics is relevant to our understanding of concepts like consciousness, agency, free will, and souls because these concepts (and the difficulties we encounter in grappling with them) have always been contingent on our folk understanding of the universe’s underlying rules.

Zombie-Free

In a dualist universe, physical laws govern ordinary matter, but spirit and the self exist on a separate plane, presumably governed by other laws. Often, these other laws are deemed divine or unknowable. Even so, Descartes struggled to answer the hard questions raised by dualism: How could animals not have spirits, too? How does one’s spirit know what the organs of the body perceive? (And why doesn’t the spirit have access to other bodies?) Even trickier, how does the spirit control our body, and where is the physical locus of this control?

The conceptual and neuroanatomical absurdities encountered by pursuing this line of thinking led the iconoclastic Enlightenment physician Julien Offray de La Mettrie (1709–1751) to write L’Homme Machine (“man machine”) in 1747, extending Descartes’s bête-machine (“animal-machine”) concept to humans and suggesting that resorting to supernatural “other laws” to account for human cognition or behavior was unnecessary. 41

Title page of L’Homme Machine, 1748

At the time, La Mettrie’s thesis was radical enough to compel him to publish his tract anonymously, in a miniature format that could be secreted in an inner coat pocket. The authorities burned many copies, and La Mettrie was forced to flee one country after another, especially after he was outed.

Eventually, though, his perspective became mainstream. Today, thinkers like Robert Sapolsky and Sam Harris articulate the same view, and both have written bestsellers arguing the point.

In a fully deterministic Newtonian universe, everything is fated, time is reversible, and there is no difference between cause and correlation. Blurriness about the future or the unknown, or the existence of counterfactuals (how things could be, as opposed to how they are), are illusory: nothing could be other than it is, was, and will be. Without counterfactuals, blame or responsibility seem difficult to justify, as Sapolsky points out. Consciousness and free will are at best epiphenomena, meaning that they lack causal power—if they can be said to exist at all.

This leads us to wonder about philosophical zombies: the same events and behaviors without those epiphenomena. People without souls. Why not? How would we even know, and what would “knowing” even mean?

The quantum world—the one we actually inhabit—has very different rules, and understanding these rules, as physically counterintuitive as they may be, helps to resolve many of the apparent metaphysical—even spiritual—conundrums that arise in a Newtonian universe:

- The future is not predetermined after all, especially for living systems, which are finely tuned to amplify noise through dynamical instability.

- Because life isn’t deterministic, counterfactuality—the idea that things could or could have been otherwise—is not just an illusion.

- Choice—though constrained by the blurry “future cone” of the physically possible—is likewise real, and underwrites the free will of beings able to model and select among alternative futures.

- Our mental models of causality have real meaning and power, especially when it comes to predicting how we and other living beings will behave—yet we are also free to violate the expectations of others (if we choose to, and have a good enough theory of mind to pull it off).

- We can never fully know the inner experience of others, but only model it based on interactions.

- That said, subjective experience is also real; or, to turn it around, reality is defined subjectively by networks of interactions.

Given all this, the idea of philosophical zombies seems incoherent. If you interact with someone over an extended period such that your theory of mind models them in detail, that model includes their model of you, and their model of your model of them, and so on. If they don’t in fact have a working theory of mind of their own, then your theory of mind will be violated in the interaction—and it will become apparent to you that there’s “nobody home.” This really is just the Turing Test.

One can, of course, be fooled by a social interaction; actors, for instance, can take on a role in which they pretend to feel things they don’t, then drop the act, resulting in a (possibly unpleasant) surprise. But let’s suppose that never happens. Imagine an interaction between A and B, in which B is different on the inside than A imagines; B might, for instance, actually be B’, an actor who plays B and never breaks role. Perhaps B’ is really a cold fish, and has never experienced heartbreak, but can pretend to, pitch-perfectly. We could say, then, that A is “real,” B is “fake,” and B’ is the “real” B.

Here’s the problem. In this description of the situation, we have presumed some God-like perspective that can observe every detail inside A and B, and render an oracular judgment about the actor B’ “inside” B. But no such God-like, all-seeing, all-judging perspective exists.

If we replace that third-person view with a real third person, C, we can spot the trouble. Now, C must interact with both A and B to render judgment. This interaction could involve not only conversation, but also all sorts of lab tests, body sensors, brain scans—the works. Even so, C can’t consult any magic oracle to distinguish between “real” and “fake” people, and is still just making a (more detailed) model-based judgment. Theory of mind is that model.

Another judge, D, could come along, and on the basis of their own, somewhat different model, or different interactions, or alternative instrumentation, disagree with C. Who is right?

It’s not that reality doesn’t exist, any more than RQM argues that the universe doesn’t exist. The point is that reality consists of relationships, and there is no specially privileged view, no magic oracle. Perhaps agreement becomes nearly universal on certain kinds of judgments, especially when those judgments carry strong predictive power. But a judgment may always remain contested, especially when it concerns subjectivity itself.

Alters

“Dissociative identity disorder,” a controversial condition formerly known as “multiple personality disorder,” offers a real-life example. People with dissociative identity disorder seem to have multiple other people living in their heads—“alters,” with some combination of different personalities, different memories, and even different-sounding voices. Encountering someone with this disorder can be frightening, since it so dramatically violates our usual assumptions about the indivisible unity of a person, their brain, and their “soul.” At times, it may have been interpreted as demonic possession. 42

Is this condition real, or do people just fake it? If they are faking it, do they have any choice in the matter? Could some people be unaware that they’re faking it? The psychiatric community can’t agree on any of these questions. Some believe it’s an act, others believe it’s real, and yet others hedge their bets. I’m skeptical that we will ever have anything like a universally convincing answer, because I can’t imagine what shape such an answer could take, even in principle. It certainly won’t take the form of a cloven pineal gland.

Imagine for a moment that, as neuroscience advances, it becomes possible to read out a person’s experiences and thoughts perfectly, using superconducting magnetometers, optogenetics, nanotechnology, or whatever else to record the activity of every neuron in the brain. 43 That would, of course, produce a staggering amount of data—impossible for any researcher to make sense of in raw form. Even today’s instruments, which can easily record from hundreds of neurons, require sophisticated computational modeling for any meaningful interpretation.

How would a researcher train and test a model designed to provide total access to a subject’s internal experience? An honest subject could give positive feedback when the model correctly reconstructs what’s in their minds and negative feedback when it’s wrong. Once trained, such a model could allow an experimenter to map brain activity onto the description that subject would give—what they’re seeing, hearing, touching, and tasting, what they’re feeling and thinking, and so on. 44

Once again, we’re talking about a theory of mind. It might be an excellent one. But ultimately, the model is just another “observer C.” Or, more accurately, the model would provide a human experimenter C with a computational prosthetic, a “theory of mind extender” with the ability to decode the rich brain signals in the subject’s head that would otherwise remain hidden. That could make C a fearsome interrogator, but it does not afford them a “view from nowhere.”

It’s unclear whether, even in principle, we could make a generic model that would accurately read out anyone’s mind, as opposed to the mind of a volunteer for whom the model has been individually (and consensually) tuned. But I suspect that even if we could travel forward in time (and into the right Black Mirror episode) to grab such a generic theory of mind–extender gadget, then back in time to experiment with it on various medieval saints who believed they had witnessed miracles, we would find that some of them believed what they were saying. Does that prove these miracles actually happened? And if they didn’t, does it prove that the saints were mentally ill?

St. Catherine of Siena Receiving the Stigmata, by Domenico Beccafumi, about 1513–15. Catherine is shown kneeling in a small chapel while members of her order wonder what is overtaking her, since only she can see the miraculous vision.

I don’t think we can reliably draw either of these conclusions. Virtually all of us believe some things that many others don’t, including the sincerely religious, who comprise a large proportion of the human population, as well as committed atheists. Yet few people in either camp would make the hyperbolic claim that everyone in the other camp is mentally ill.

When it comes to the truth or falsehood of alters, we’re on shakier ground still. Someone’s sincerely held conviction that the Earth is flat is easily and independently testable by anyone—and on the basis of air travel, the moon landing, and much else, it’s as obviously false as a belief gets. 45 But a belief about subjective experience isn’t testable by others in that way. It’s a model of a model, and if it’s your model of your model (whether singular or plural), it’s hard to see how a third party’s contrary claim could invalidate that. It would just be another opinion.

Could one do any better in arriving at a deeper truth? I’ve described a “theory of mind extender” trained with human subjects using supervised learning and wielded by a human experimenter who must ultimately interpret the results. Such scenarios are inherently limited by the human mind. So yes, one could, in theory, do better. Imagine that the complete brain activity of billions of humans, together with every detail of their environment and activity, were used to train a gigantic unsupervised model, vastly more powerful than the human brain, capable of “autocompleting” our every move. For such a model, all the subtlety and complexity of the human mind might boil down to rote behavior plus some irreducible randomness—a bit of deterministic Sphex wasp and a bit of fluttering moth. Surely this model could afford us something more than “just another opinion”!

Not really. It would not tell us whether alters are “real,” or, at least, not unless the most cynical possible interpretation holds—that everyone who claims to have alters is simply lying, knows it, and doesn’t bother keeping up the exhausting act in private. (Few psychiatrists adopt this extreme view, as patients exhibiting such behaviors are often deeply traumatized and exhibit signs of other mental conditions.) In short, insofar as people believe things and are consistent in their beliefs about themselves, such that those beliefs reliably inform their behaviors, the most any model could ever tell us is just that.

Let’s now move on to territory that has been explored scientifically in rigorous detail: the curious case of split-brain patients.

M-I-B

“[…] [T]he Washington freshmen came up with a mantra that their coxswain, George Morry, chanted as they rowed. Morry shouted, “M-I-B, M-I-B, M-I-B!” over and over to the rhythm of their stroke. The initialism stood for ‘mind in boat.’” 46

Beginning in the mid-twentieth century, doctors began experimenting with brain surgery as a medical treatment—including, at times, for such purported “conditions” as being gay, or just failing to conform. Their approach was at best cavalier, at worst abhorrent.

One of the few enduring interventions developed during this period was for the treatment of epilepsy. During an epileptic seizure, violent electrical activity spreads through the brain, often starting from one or more foci. Brain surgery to cut out the diseased tissue where the electrical storms begin can be effective. In the most intractable cases, though, a last-ditch solution is to cut through part or all of the corpus callosum, the bridge of white matter connecting the left and right hemispheres. This makes seizures less debilitating by preventing runaway electrical activity from propagating between the hemispheres. Surprisingly, the procedure often has little obvious effect on a person’s cognitive ability or behavior.

On closer examination, though, the results are disconcerting. 47 After the surgery, each hemisphere appears to have independent inputs, outputs, and even thoughts. If different visual stimuli are shown to the left and right halves of the visual field, then a verbal account from the patient will include only what was on the right side; they appear oblivious to anything in the left visual field. 48 On the other hand, if the patient is asked to report on their experience using their left hand—by drawing, for instance—this hand can report the contents of the left visual field, but not the right. 49

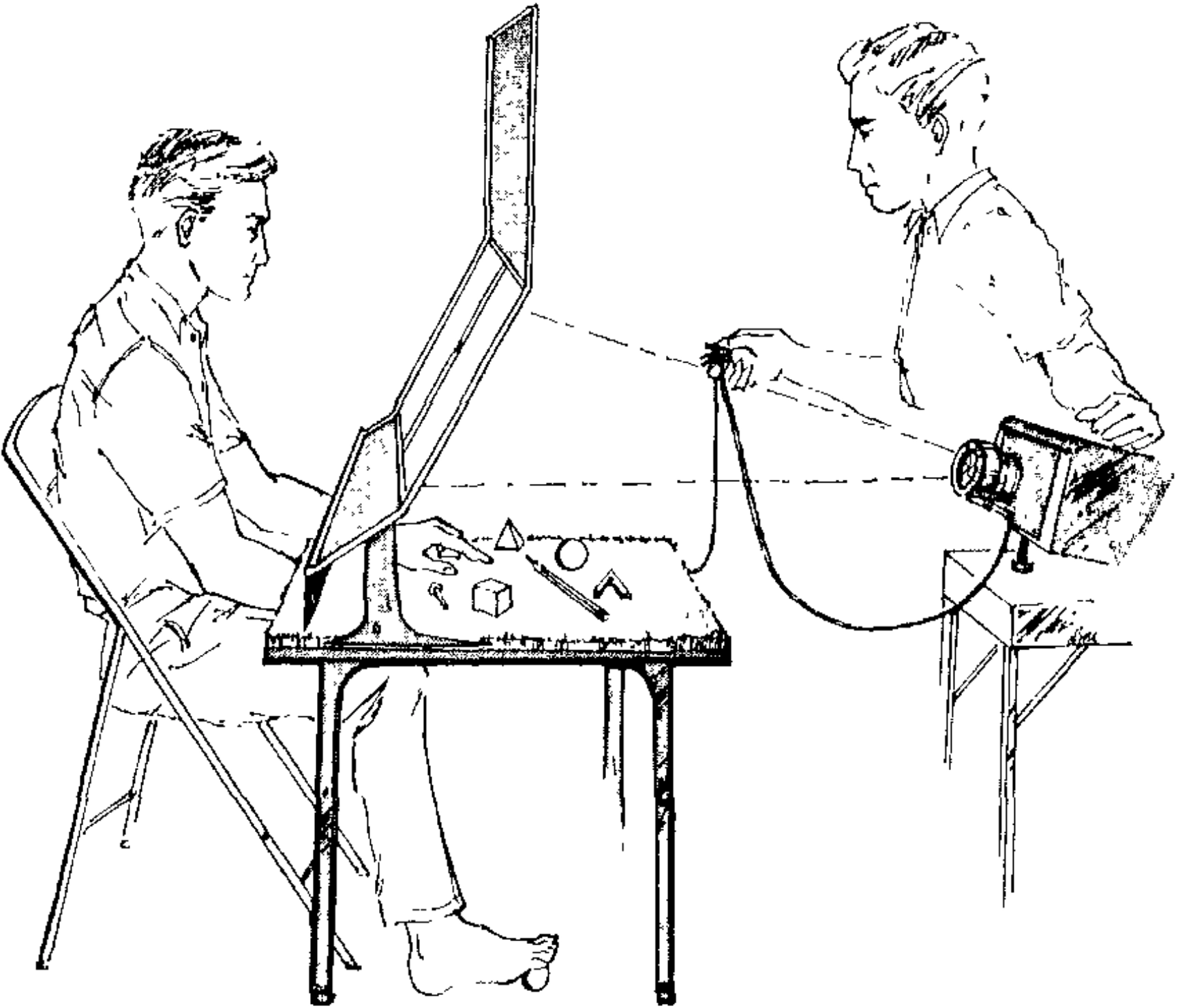

The experimental setup used in classic split-brain experiments, from Sperry 1968

Usually, there are enough ways in which the left and right hemispheres can stay in sync that these inconsistencies don’t arise. The intrinsic coordination of eye movements, for instance, generally ensures that the left and right visual fields see the same world; the setup for split-brain vision experiments requires visual fixation on crosshairs and careful projection of separate left and right images.

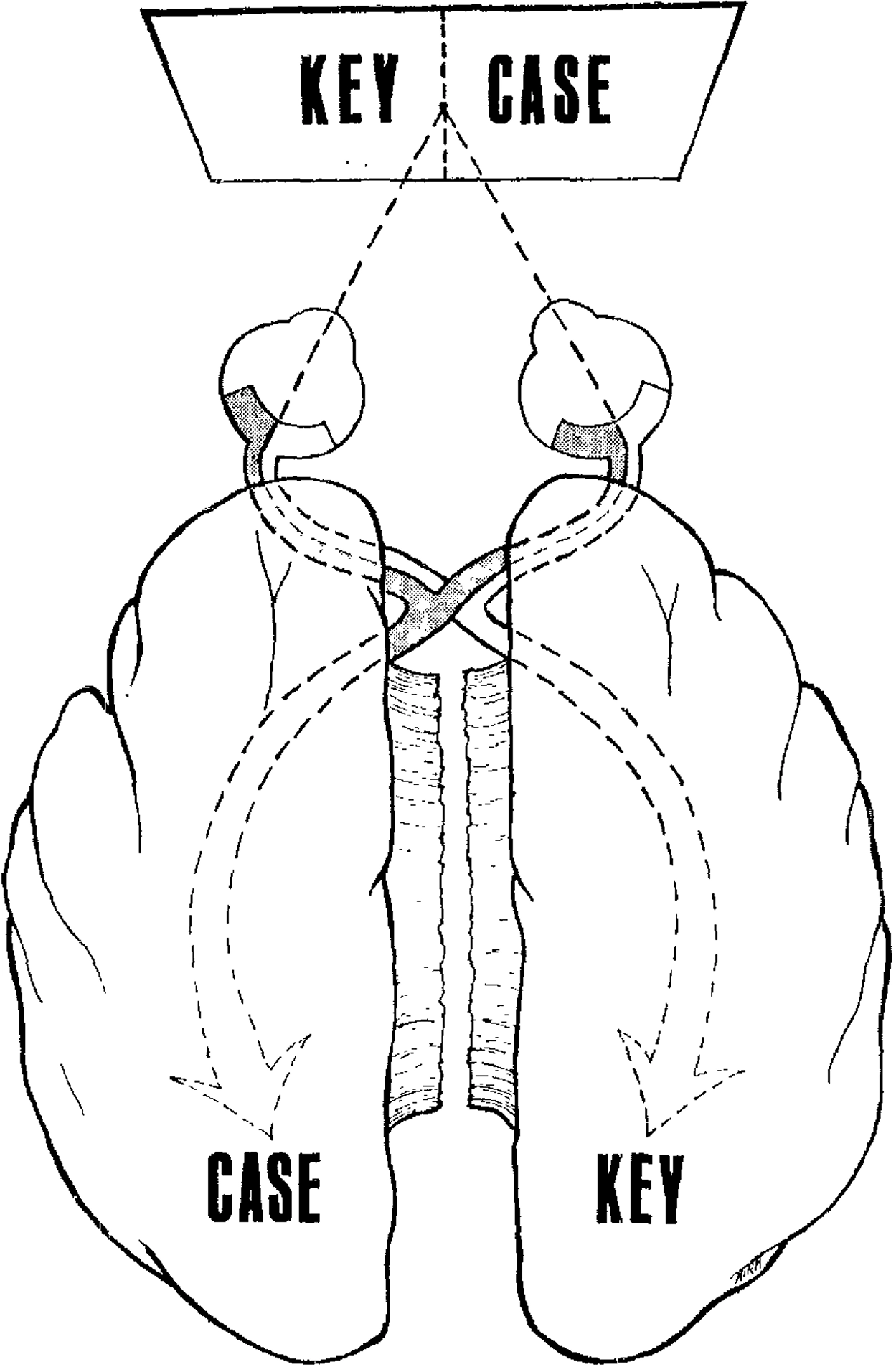

Diagram illustrating the way each hemisphere’s visual cortex receives visual information from the opposite hemifield, from Sperry 1968

Creating these dissociations, though, makes it apparent that, in many ways, split-brain patients have two minds. In one famous recorded experiment, a patient plays “Twenty Questions” with himself to allow one half of his brain to figure out what the other half is seeing. 50 There are even occasional reports of “hemispheric rivalry” in a patient’s behavior. One hand might be buttoning up a shirt while the other hand is busy unbuttoning it, in an apparent disagreement over a wardrobe choice; or one arm might be hugging a spouse while the other pushes him away. 51 So, is a split-brain patient one person, or two?

Demonstration of hemispheric awareness in a split-brain patient

First, let’s note that the split-brain findings may not be as surprising as they seem, if we are indeed not so different from the octopus. Myelination has allowed our neurons to consolidate more in our heads, but our brains nonetheless exhibit extensive symmetry, not so unlike that of the octopus body. Cortical columns appear to be repeated structures with highly local connectivity, like the nerve ganglia surrounding each of an octopus’s suckers, and our cerebral hemispheres are (nearly) bilaterally symmetric, like a pair of octopus arms. Communication between the hemispheres is higher-bandwidth than between an octopus’s arms, but it’s still limited to a narrow bridge, even in the intact human brain.

Neuroscience has also failed to locate a homunculus anywhere in the brain; there doesn’t appear to be any single special spot where consciousness “lives.” Hence it’s not so surprising that both hemispheres are conscious, contra Descartes.

And finally, we know that the hemispheres are innervated differently and specialize in different functions. The visual field is divided in two by the optic chiasm, a cross-shaped wiring complex that visual signals must pass through en route to the left and right visual cortex at the rear of the brain, in their respective hemispheres. We’ve known for a long time that the left hemisphere controls and receives inputs from the right side of the body, while the right hemisphere controls and receives input from the left side of the body. We’ve also long known that, in most right-handed people, language is handled mainly by the left hemisphere, and vice versa.

All of which is to say: if the left and right hemispheres can no longer communicate directly, then the lateralized neurons producing language can’t speak to anything seen or experienced on the opposite side of the body. How could it be otherwise?

Still, we could reasonably have hypothesized a few other outcomes:

- The split-brain patients might never have woken up after surgery. If consciousness in any form had required the continual communication of specialized areas on both sides of the brain, then cutting those connections could have left patients comatose or unresponsive.

- The split-brain patients might have woken up with complete hemineglect and hemiplegia: consciousness of and ability to control only one side of the body. This would imply that “you” live on only one side of your brain, while the other is just a peripheral—a lateralized version of the homunculus idea.

- The split-brain patients might have been deeply cognitively disabled when they woke up. This would have been the case if cognition were so diffusely “holographic” that forming any clear thought or percept requires every part of the brain to work in tandem.

Fortunately, for most of the patients, none of the above obtain. What we see instead speaks to the modularity, robustness, parallelism, and “internal sociality” of the cerebral cortex.

As I’ve argued, no part of the cortex is doing something fundamentally different from any other part. Specialization arises mainly due to connectivity and something like the division of labor in a human society—that is, analogously with the way we learn different skills and do different jobs, but are not fundamentally different in our capacities. Local connectivity is “cheaper” than long-range connectivity, so functionality is implemented as locally as possible—a design that can be robust to failures in connectivity (as evidenced by split-brain patients) or to regions of the cortex being knocked out, whether by injury, stroke, or some more transient event.

Hence our cerebral hemispheres seem to be intelligences in their own right, which are made of still more local intelligences, and so on. And of course, for intelligences to work effectively with others, they must continually model each other. It’s theories of mind, all the way down.

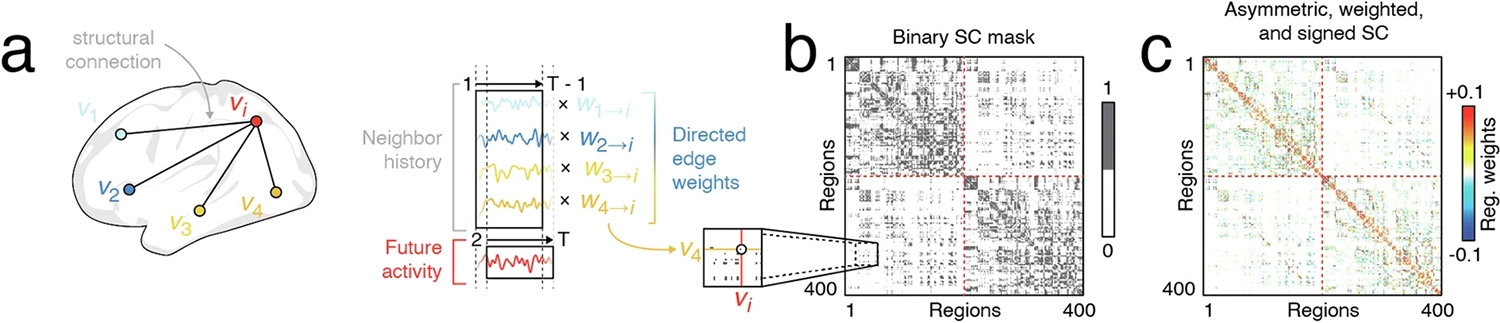

A visualization of the neural connectivity of the human brain based on temporal activity patterns, from Tanner et al. 2024. Regions 1–200 represent one hemisphere, and 201–400 represent the other; notice the strong connections within hemispheres and far weaker connections between hemispheres. Within each hemisphere there are in turn sparsely interconnected regions exhibiting denser local connectivity. This “multifractal” pattern presumably holds at finer scales too.

Perhaps the most revealing split-brain finding is not in how the abilities of the patients are affected, but in their subjective experience. They need to work harder at certain tasks than those of us with intact brains do, as revealed by Twenty Questions–type scenarios that look suspiciously like two people helping each other—or, even more frustratingly, hindering each other, such as when hands are actively working against each other—rather than one person. It might, similarly, be harder for a rowing crew to achieve swing if a barrier were suddenly erected mid-boat, preventing the rowers in the front and the back from seeing or hearing each other. They might even occasionally try to row in opposite directions. Nonetheless, the left and right hemispheres of a split-brain patient are still obviously in the same boat.

The result? Split-brain patients won’t “admit” that there’s more than one person in there. This is a particularly striking illustration of how the relational quality of theory of mind violates our usual assumption that there’s a single, objective truth about “who is a who.” The patient still feels whole; there’s just more trouble after the surgery reaching for certain words, or performing certain tasks, especially when one’s eyes or hands are prevented from working together “normally.”

A first-person account from split-brain patient Joe

However, to the experimenter, it seems evident that there are two minds in the patient’s head. Indeed, to make sense of any interaction with a split-brain patient in an experimental setting where left and right stimuli are dissociated, it’s necessary to have distinct theories of mind for the left and right hemispheres—what do they see and hear? Which hands do they control? What do they know? What will they choose to do? To fail to take these things into account would be to flunk the Sally-Anne Test. It’s worth reflecting on the implications, even for those of us with an intact corpus callosum.

When we say we’re “of two minds” about something, could that literally be true? It is a small miracle that we manage to make decisions and take coherent action most of the time, despite being a tandem bike, or rowing crew, or maybe a whole crowd, on the inside. Yet we learn such internal cooperation from birth. Unless you learn how to do it as a party trick, it’s hard (without a split brain) to button your shirt with one hand while simultaneously unbuttoning it with the other!

Still, we’re not by any means in eternal a priori agreement with ourselves—or else that multiplicity would be redundant, and would therefore not exist, as a big brain is a highly expensive adaptation. We only get more intelligent as the brain scales up because every additional “local mind” brings its own skills, inputs, specializations, and, one could even say, differing opinions to the table.

Experimenters can easily engineer internal disagreements by presenting us with forced-choice responses to conflicting stimuli that will be processed by different parts of the cortex. The “Stroop effect” is a classic example, named after American psychologist John Ridley Stroop. In 1935, Stroop published a study testing the reaction times of subjects on a simple color-recognition task. The name of a color was printed on a card in colored ink. If the ink color conflicted with the named color, for instance the word “red” was printed in green ink, both the error rate and reaction time rose considerably. 52

A demonstration of the Stroop effect: try to name the color of the text

Despite the complexity of the brain, studies like these are consistent with a simple model of how we arrive at decisions: parallel populations of neurons “vote,” with softmax-like lateral inhibition determining which ones “win.” 53 A close call leads to slower convergence. The fine balance of neural circuits once again comes into play here: always close enough to firing that an eager “raised hand” somewhere can quickly cascade into a decisive global response, yet not so overexcitable that chaos and epilepsy ensue.

Although it’s a fine balance, room for functional variability remains. Are our neural parameters tuned for faster convergence on a decision, or for more thoughtfulness? Or for too much thoughtfulness, also known as waffling? (That’s me!) If some cortical columns actively dissent, do they commit to a collective decision anyway, or do they carry on grumbling? How much idle chatter do brain regions generate when they’re not trying to drive behavior? Could they even attempt to pursue their own agenda by “fooling” their neighbors at times?

These are interesting questions, and ones that may underlie certain systematic personality differences between people. Carried to extremes, such differences could become dysfunctions: lack of impulse control, decision paralysis, perhaps even schizophrenia or dissociative identity disorder.

The Interpreter

One of the most telling split-brain findings is the way the language-specialized (usually left) hemisphere assumes a role neuroscientist Michael Gazzaniga and colleagues have dubbed “the interpreter.” 54 It has sometimes been cited as a counterargument to “the typical notion of free will,” 55 but, more to the point, the interpreter role reveals something important about how and why split-brain patients tend to feel to themselves like one person, despite their (literal) cognitive dissonance.

In one classic early study, a patient’s left hemisphere was shown a chicken claw, while the right hemisphere was shown a snow scene. The patient needed to select associated objects with each hand, given four choices per side. As expected, each hand chose an image associated with what its corresponding hemisphere could see: for the left hand, a shovel (rather than a lawnmower, rake, or pickaxe), and for the right hand, a chicken (rather than a toaster, apple, or hammer).

But now comes the twist. When asked why he had made those choices, the patient responded without hesitation, “Oh, that’s simple. The chicken claw goes with the chicken, and you need a shovel to clean out the chicken shed.” The language-imbued left brain appears to be, in other words, a fluent bullshitter.

In another example, the right hemisphere is given the instruction, “Take a walk.” The subject stands up and begins walking. When asked why, the response might be, “Oh, I need to get a drink.” 56

You may now be wondering: how could a split-brain patient manage to walk at all, when that requires the coordinated activity of both legs? And how could such a bilaterally coordinated action take place in response to a unilaterally willed command?

In fact, split-brain patients perform coordinated activities all the time, including tasks involving both hands. Some researchers have hypothesized elusive cross-hemispheric nerve fibers somewhere other than the corpus callosum maintaining some kind of minimal communication, but this has never been convincingly shown, either behaviorally or neuroanatomically. 57

It’s not necessary to reach for such an explanation, though. The whole body is a rich cross-hemispheric communication channel. Your eyes can see what your arms and legs are doing. If one leg begins to exert pressure on the ground to stand up, your butt can feel it. If the muscles on one side of your neck begin to tense to turn your head, that tension is felt on the opposite side. And so on. As anyone who has rowed crew or run a three-legged race knows, it’s possible to quickly pick up cues and follow through on movements based purely on sensory feedback. Gazzaniga and colleagues call this “behavioral cross-cueing”; it emphasizes the importance of physical embodiment, the way our bodies are, in a sense, part of our brains, as well as vice versa.

Conjoined twins Abby and Brittany Hensel vividly illustrate the phenomenon. They have separate heads, brains, and spinal cords, but a single pair of arms and legs, with Abby controlling one arm and leg and Brittany controlling the other. Despite their virtually complete sensory and motor separation, the twins are able to run, swim, play volleyball, play the piano, ride a bicycle, and drive a car. 58 When one of them initiates a movement, the other unconsciously and effortlessly follows through. Not only can they complete each other’s sentences, as close friends might be able to; they also share an email account, and have no trouble typing email with both hands. While they maintain individual identities, they use the “I” pronoun when they agree, as they often do, and use their names if they disagree.

Abby and Brittany Hensel plan a road trip to Chicago

In this light, the left hemisphere’s effortlessly creative narrative “interpretation” seems less like a special case (or like bullshit), than like what the cortex always does: it predicts, and follows through. This involves constantly updating its theory of mind, including a theory of mind for that omnipresent first-person entity we call “I.” Such a theory-of-mind model includes simple, low-order terms to predict the immediate future, like “I’m walking, and just stepped with my left foot, so my right foot is going to step next.” It also includes higher-order terms, like “I’ve just gotten up to walk, and I’m a little thirsty, so I’m probably headed for the kitchen to get a drink of water.”

If the bit of cortex doing the modeling happens to control my right foot, then its “active inference” will involve moving that foot to “autocomplete” the walking movement. In fact the spinal cord is perfectly capable of such low-order autocompletion on its own. 59 If, instead, the bit of cortex doing the modeling is the left hemisphere’s language center and the experimenter has just asked why I’m leaving the room, then autocompletion involves spinning a likely story.

Even with a corpus callosum, no part of the brain can have complete access to every other part, so this kind of inference takes place all the time. Mutual modeling, including within the brain, is the very essence of intelligence.

Still, this kind of teamwork, in which our “inner selves” predict and cover for each other, can lead to embarrassment. We’re all highly invested in appearing whole, unified, consistent, and “rational” in our social interactions with others. Given the potentially adversarial nature of social prediction, we’re disconcerted when someone else is able to predict us better than we can predict ourselves. Per chapter 5, being too predictable makes us vulnerable, and feels like a violation. It’s even worse to believe that you’ve made a choice when you haven’t, and then to be caught out justifying that “choice” with a post-hoc rationale—a literal attack on personal integrity.

Yet we’re all vulnerable to such manipulation, as Swedish psychologist Petter Johansson and colleagues have demonstrated in a series of groundbreaking studies. They first demonstrated the phenomenon they call “choice blindness” in a 2005 study entitled “Failure to Detect Mismatches between Intention and Outcome in a Simple Decision Task.” 60 The task involved showing the subject two cards with faces on them and asking which was more attractive. Immediately after choosing, participants were sometimes shown their card again, and asked why they had judged this face more attractive. However, unbeknownst to the subjects, in three out of fifteen trials their choice was swapped using sleight of hand. The participants were being asked to justify why they had made the choice they had not just made.

Choice blindness in a face-preference task, from Petter Johansson’s TEDxUppsalaUniversity talk in 2017

Surprisingly few subjects noticed the swap. When they had been given two seconds to make a judgment (which they generally affirmed was enough time), only thirteen percent detected the ruse. Even under the friendliest possible experimental conditions, when they were given unlimited time to judge, and the faces were selected to be especially dissimilar, the figure only rose to twenty-seven percent. Viewing time was the only condition that made any difference. The respondent’s age and sex didn’t matter. Neither did the similarity of the faces, even though “[low-similarity] face pairs […] bore very little resemblance to each other, and it is hard to imagine how a choice between them could be confused.”

Perhaps most surprisingly, there was little or no statistically significant variation between the justifications given for real or swapped choices. The researchers certainly tried to find such differences. Using multiple human raters, they considered length of response, laughter, emotionality, specificity, the proportion of blank responses (in which subjects couldn’t say why they had made the choice), and even whether they described their judgment in the past or present tense. The only slight difference—maybe a telling one—was in “more dynamic self-commentary” in the swapped instances, in which “participants come to reflect upon their own choice (typically by questioning their own prior motives),” but only five percent of respondents evinced this behavior.

As behavioral scientist Nick Chater has written in describing these experiments, our left-brain “interpreter” can “argue either side of any case; it is like a helpful lawyer, happy to defend your words or actions whatever they happen to be, at a moment’s notice.” 61