Preface

AI is probably the biggest story of our lifetimes. Its rapid development, starting in the early 2020s, has generated a mixed outpouring of excitement, anxiety, and denial.

Everyone wants to weigh in. Even authors writing about history, language, biology, sociology, economics, the arts, and psychology feel the need to add a chapter about AI to the end of their books. Indeed, AI will surely affect all of these far-flung domains, and many more—though exactly how, nobody can say with any confidence.

This book is a bit different. It’s less about the future of AI than it is about what we have learned in the present. At least as of this writing, in January 2025, few mainstream authors claim that AI is “real” intelligence. 1 I do. Gemini, Claude, and ChatGPT aren’t powered by the same machinery as the human brain, of course, but intelligence is “multiply realizable,” meaning that, just as a computer algorithm can run on any kind of computer, intelligence can “run” on many physical substrates. In fact, although our brains are not like the kinds of digital computers we have today, I think the substrate for intelligence is computation, which implies that a sufficiently powerful general-purpose computer can, by definition, implement intelligence. All it takes is the right code.

The Wright brothers’ first flight, 1903

Thanks to recent conceptual breakthroughs in AI development, I believe we now know, at least at a high level, what that code does. We understand the essence of an incredibly powerful trick, although we’re still in the early days of making it work. Our implementations are neither complete, nor reliable, nor efficient—a bit like where we were with general computing when the ENIAC first powered up, in 1945, or where we were with aviation when the Wright brothers made their first powered flight, in 1903.

It’s an old cliché in AI that airplanes and birds can both fly, but do so by different means. 2 This truism has at times been used to motivate misguided approaches to AI. Still, the point stands. Bird flight is a biological marvel that in some respects remains poorly understood, even today. However, we figured out the basic physics of flight—not how animals fly, but how it’s possible for them to do so—in the eighteenth century, with the discovery of Bernoulli’s equation. Working airplanes took another century and a half to evolve.

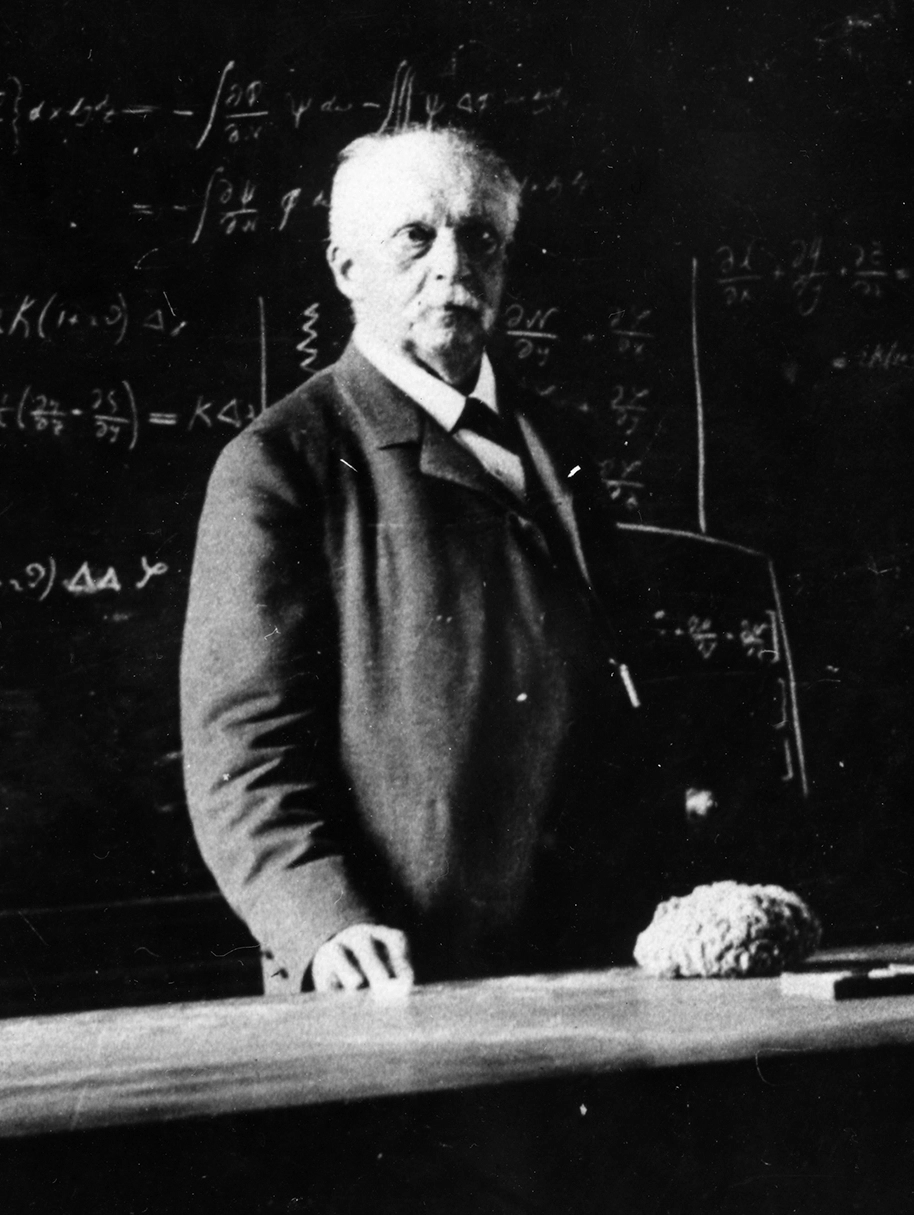

Last photograph of von Helmholtz, taken three days before his final illness

Similarly, while there is still a great deal about the brain that we don’t understand, the idea that prediction is the fundamental principle behind intelligence was first put forward by German polymath Hermann von Helmholtz in the 1860s. 3 Many neuroscientists and AI researchers have elaborated on Helmholtz’s insight since then, and built models implementing aspects of the idea, 4 but only recently has it become plausible to imagine that prediction really is the whole story. I will argue that, understood in full and interpreted broadly, the prediction principle may explain not only intelligence, but life itself. 5

My definitions of life and intelligence are different from others in the literature. On the other hand, definitions in the literature also differ dramatically from each other. The definitions I propose are not designed to litigate particular instances (like the aliveness of viruses, or the intelligence of chatbots) but rather follow from the picture I’ll present of how and why life and intelligence arise.

I define life as a self-modifying, computational state of matter that arises through evolutionary selection for the ability to persist through time by actively constructing itself, whether by growing, healing, or reproducing. Yes, everything alive is a computer! Chapter 1 will explain why self-construction requires computation, and why, even more fundamentally, the concept of function or “purpose,” which is central to life, is inherently computational.

Intelligence, in turn, is the ability to model, predict, and influence one’s future. Modeling and prediction are computational too, so they can only take place on a computational platform; thus intelligence needs life. Likewise, life needs intelligence, because the ability to persist through time depends on predicting and influencing the future—in particular, on ensuring that the entity doing the predicting will continue to exist in the future. Hence all living organisms are, to one degree or another, intelligent. Every one of them is smart enough to have persisted through time in a complex, ever-changing environment.

That environment is each other: life and intelligence are inherently social. Every living thing is made of simpler cooperating parts, many of which are themselves alive. And every intelligence evolves in relation to other intelligences, potentially cooperating to create larger, collective intelligences. Cooperation requires modeling, predicting, and influencing the behavior of the entities you’re cooperating with, so intelligence is both the glue that enables life to become complex and the outcome of that increasing complexity.

The feedback loop evident here explains the ubiquity of “intelligence explosions,” past and present. These include, among many others, the sudden diversification of complex animal life during the “Cambrian explosion” 538.8 million years ago, the rapid growth of hominin brains starting about four million years ago, the rise of urban civilization in the past several thousand years, and the exponential curve of AI today.

That predictive modeling is intelligence explains why recent large AI models really are intelligent; it’s not delusional or “anthropomorphic” to say so. This doesn’t mean that AI models are necessarily human-like (or subhuman, or superhuman). In fact, understanding the curiously self-referential nature of prediction will let us see that intelligence is not really a “thing.” It can’t exist in isolation, either in the three pounds of neural tissue in our heads or in the racks of computer chips running large models in data centers.

Intelligence is defined by networks, and by networks of networks. We can only understand what intelligence really is by changing how we think about it—by adopting a perspective that centers dynamic, mutually beneficial relationships rather than isolated minds. The same is true of life.

Developing these ideas will require weaving together insights from many disciplines. As we go along, I’ll introduce concepts in probability, machine learning, physics, chemistry, biology, computer science, and other fields. When they’ve been most relevant to shaping our (sometimes mistaken) beliefs, I’ll also briefly review the intellectual histories of key ideas, from seventeenth-century “mechanical philosophy” to debates about the origin of life, and from cybernetics to neuroscience.

You, dear reader, may be an expert in one or more of these fields, or in none. Few people are expert in all of them (I’m certainly not), so no specialized prior knowledge is assumed. On the other hand, even if you’re an AI researcher or neuroscientist with little patience for pop science, I hope you will find new and surprising ideas in this book. A general grasp of mathematical concepts like functions and variables will be helpful (with bonus points for knowing about vectors and matrices), but there will be no equations. (Well … almost none.) A general understanding of how computer programming works will be useful in a few places, but isn’t required. If you find fundamental questions about the nature of life and intelligence interesting enough to still be reading, rest assured: you are my audience.

The Introduction describes the recent emergence of “real” AI, sketching the hypothesis that prediction is intelligence and pointing out some of the big questions that raises. The rest of the book consists of about a hundred bite-size sections that attempt to answer those questions, organized into the following ten chapters (plus two interludes):

- Origins begins with “abiogenesis,” the emergence of life on Earth some four billion years ago. Darwin struggled to understand how life could have arisen. “Artificial life” experiments let us make more progress today, and suggest that life may be inevitable in a world capable of supporting computation (like ours). Understanding the deep role computation plays in our universe, and the way it links the seemingly disparate fields of physics, chemistry, and biology, both sheds new light on evolutionary theory and sets the stage for understanding the computational nature of intelligence.

- Survival considers the predictive intelligence hypothesis from the perspective of a tiny, simple organism capable of rudimentary computation: a bacterium. Being alive in the world is inherently social (even for bacteria), but this chapter imagines life as a one-player game, and considers what it takes to keep playing—which, since life is an infinite game, is the only prize there is.

Interlude: The prehistory of computing offers a historical perspective on the origins and founding assumptions of “traditional” computer science, from its roots in Enlightenment thinking and the Industrial Revolution to the development of the first electronic computers at the close of the Second World War. - Cybernetics traces an alternative history of computing, which has recently re-emerged as modern AI, back to its foundations in the mid-twentieth century, describing how Norbert Wiener’s biologically inspired theory of feedback lets us understand the connections between the predictive intelligence hypothesis and the development of artificial neural nets.

- Learning connects more recent advances in machine learning with our growing understanding of computational neuroscience and the evolution of nervous systems.

- Other minds explores how, as multiplayer life becomes complex, the main job of a mind becomes modeling other minds. This leads to a mutual modeling arms race, resulting in the kind of “intelligence explosion” that has produced humanity.

- Many worlds describes the way both long-term planning and short-term unpredictability (otherwise known as “free will”) emerge as consequences of social modeling, especially when applied to ourselves.

- Ourselves both explains and deconstructs consciousness in light of the above, asking: are we as coherent a “self” as we believe? Answering will involve combining conceptual insights and experimental findings from AI and neuroscience.

- Transformers chronicles the development of large language models and their multimodal successors. Do these AI models understand concepts? Are they intelligent? Can they reason?

- Generality explains both similarities and differences between Transformers and brains, shedding light on how Transformers achieve their generality and where gaps remain in their functionality. Here we’ll also grapple with the most fraught question: do AI models have subjective experiences?

Interlude: No perfect heroes or villains offers a short but important disclaimer about the AI debate. - Evolutionary transition zooms out and looks ahead. The emergence of AI probably won’t bring either rapture or apocalypse, but it does resemble earlier major evolutionary transitions on Earth, including those that led to eukaryotes, multicellular life, and photosynthesis. As in these earlier (and momentous) transitions, mutual prediction and new interdependencies will generate unprecedented new levels of complexity and diversity. We will also need to revise our political and economic assumptions, and even to rethink human identity.

The ideas in this book came together first gradually, over the course of decades as a software engineer and occasional computational neuroscientist, and then quickly, when my colleagues and I at Google found ourselves at the crest of AI’s “great wave” in the early 2020s.

Short film of the Marine Biological Laboratory in Woods Hole, 1935

Here’s my story, in brief. I’ve been obsessed with both brains and computers since childhood. In the late 1990s, as I was finishing a BA in Physics at Princeton, I began working with physicist and computational neuroscientist Bill Bialek on simulations to explore the relationship between what neurons actually do, which we have understood at a biophysical level since the 1950s, and what they compute. 6 Bill’s computational perspective on neuroscience influenced my own thinking deeply, both then and now.

After I graduated, Bill asked me to help set up the computers for a course he had been invited to co-direct that summer in Woods Hole, a small town on the elbow of Cape Cod. It was a wonderful opportunity to audit a course I wouldn’t otherwise have gotten into, and the experience proved life-changing.

The Marine Biological Laboratory in Woods Hole, founded in 1888, is at once ramshackle and illustrious. Over sixty Nobel Prize winners have been associated with it at one time or another. The summer courses are legendary, attracting visiting lecturers from around the world. Old class photos from the course Bill had inherited, Methods in Computational Neuroscience, looked like a who’s who of the field twenty years later. They still do.

One of the postdocs in that 1998 cohort, Adrienne Fairhall, was, like Bill, a physicist who had decided to work on the brain. We married, and a decade after we met at that course, she became its director! Our kids spent many idyllic summers in Woods Hole, hanging out with the families of the other visiting scientists, rowing around in a little dinghy on Eel Pond and poking gingerly at bioluminescent comb jellies and other small creatures they scooped up in plastic buckets. At their workbenches, the older researchers did much the same. Over the years, many organisms collected from those waters have provided fundamental insights into neural computation, from squids (whose giant axons were ideal for figuring out the biophysics of the action potential or “spike”), to the distributed nerve nets of jellyfish and Hydra, to the simple visual system of the Atlantic horseshoe crab, Limulus polyphemus.

While Adrienne established herself as a computational neuroscientist in the 2000s, I founded a tech startup. The startup was acquired by Microsoft, where I worked between 2006 and 2013. One of my areas of focus at the time was computer vision. Among other responsibilities, I led teams working on problems like reconstructing 3D scenes from images, tracking camera motion, and recognizing objects and text—all using hand-engineered or “Good Old Fashioned” AI (GOFAI).

By the early 2010s, the wind was shifting. Artificial neural nets, which were much more explicitly brain-inspired than GOFAI algorithms, were taking off. The new approach was obviously the future.

So, in late 2013, I left Microsoft to join Google Research, the epicenter of this new convergence between AI and neuroscience. The following decade was a tremendously exciting time, during which I built many teams to work on a wide range of projects in AI. We did basic research in neural net–powered perception, media generation, AI bias and fairness, and privacy-preserving training algorithms. We also helped various product teams at Google build AI features and technologies, and hatched big dreams about what AI might ultimately make possible, beyond the envelope of any existing kind of product.

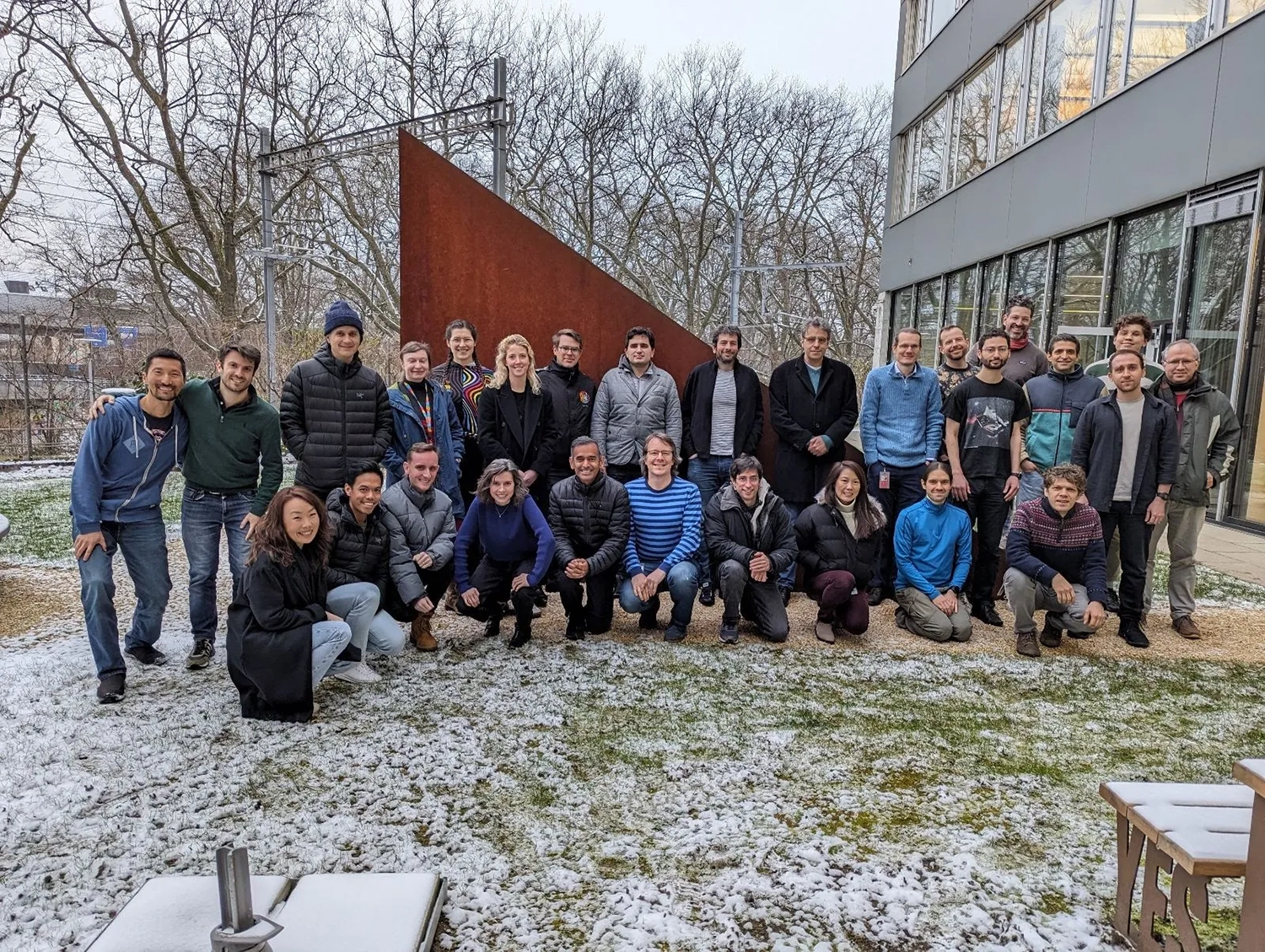

In late 2023, I founded a new research group at Google, Paradigms of Intelligence, to reimagine the foundations of AI. Our projects so far have included alternative, biologically inspired approaches to computation, new design ideas for efficient AI hardware, exploration of the predictive modeling hypothesis, research into the social scaling of AI, and even artificial life.

The Paradigms of Intelligence (Pi) team, Zürich 2024

I’ve been privileged to see and at times participate in the development of AI projects that were far enough ahead of the curve to seem like magic. Many of them caused me to rethink old assumptions, not only about AI, but also about the brain, intelligence, evolution, and the big philosophical questions that have dogged these topics for millennia. I began to write and give talks about some of the new insights, but synthesizing them into a coherent picture required more time and space than could fit into a lecture, essay, or paper.

This book is my attempt to bring it all together. It’s an extended argument drawing on a wide range of work—from my own research group, from colleagues, and from much farther afield. In swinging for the fences, I’m sure to get some things wrong. I hope to keep revising and updating the material as AI advances at breakneck speed and our understanding continues to improve. My hope and belief, though, is that the book’s core ideas trace a novel, enduring path through the complex territory of life and intelligence in all its forms.

Holloway 2023; Agrawal, Gans, and Goldfarb 2024 ↩, ↩. A few recent books I find compelling but that claim AI is not “real” include Godfrey-Smith 2020; Seth 2021; Smith-Ruiu 2022; Christiansen and Chater 2022; Lane 2022; Humphrey 2023; M. Bennett 2023; K. J. Mitchell 2023; Mollick 2024 ↩, ↩, ↩, ↩, ↩, ↩, ↩, ↩, ↩.

Feigenbaum and Feldman 1963 ↩.

See von Helmholtz 1925 ↩, though as always when it comes to intellectual priority, an argument can be made that it goes back earlier—here, for instance, to Immanuel Kant. A modern, more mathematical articulation of the prediction principle was proposed by the early cyberneticists, as will be discussed in “Behavior, Purpose, and Teleology,” chapter 3; see Rosenblueth, Wiener, and Bigelow 1943 ↩.

Anil Seth and Andy Clark have also made a vigorous case for prediction as fundamental to intelligence, though their books don’t explicitly make the connection to predictive modeling in AI; Seth 2021; A. Clark 2023 ↩, ↩.

Agüera y Arcas, Fairhall, and Bialek 2000, 2003; Hong, Agüera y Arcas, and Fairhall 2007 ↩, ↩, ↩.