Single System

A widely cited psychological model for human reasoning posits two modes of thought in the brain, “System 1” and “System 2.” 1 System 1 is fast, automatic, effortless, frequent, stereotypic, and unconscious, while System 2 is slow, effortful, infrequent, logical, calculating, and conscious. Obvious parallels can be drawn with a chatbot’s one-shot “just give me the answer” mode of operation, which resembles System 1, and chain-of-thought prompting, which induces the model to work more like System 2.

Researchers have even quantified this parallel by testing large language models using human psychometric tasks designed to expose System 1’s cognitive biases. Without chain-of-thought prompting, chatbots tend to use the same kinds of heuristic shortcuts we do in System 1 mode, whereas with chain-of-thought, they reason things through, as we do in System 2 mode, avoiding many logical errors and “cognitive illusions.” 2 These findings suggest a shared computational basis for the two systems. To put it another way, perhaps only one system is in play, which can work either in one shot or in multiple steps.

If so, that would help resolve a longstanding evolutionary puzzle. French cognitive scientists Hugo Mercier and Dan Sperber have referred to reason as “an enigma” 3 because it seems so unclear how it could evolve gradually out of “instinctive” animal behaviors. After all, nonhuman animals make System 1–style inferences all the time, yet even the cleverest of them seem far from capable of scaling the kinds of intellectual cliffs humans do. How, then, could we have evolved the capacity to reason if System 2 is so different, and so unprecedented? 4

Mercier and Sperber suggested that a similar kind of mental “module” could carry out the kinds of inferences associated with both System 1 and System 2, though when they published The Enigma of Reason in 2018, this was all quite theoretical. Today, Transformers would seem to implement precisely such a mechanism. The very same model, trained to do the very same thing—actively predict the future given the past, including both information from the outside world and one’s own train of thought—can behave like either System 1 or System 2.

If an immediate response is called for, the model will do its best, making use of any learned (or “instinctive”) heuristics in the network, at the price of being reflexive, vulnerable to biases and “gotchas.” However, with time to think things through, the same neural net can generate its own intermediate results, plans, speculations, and counterfactuals, resulting in a potentially much higher-quality, though also much more effortful, reasoned response.

Taking this hypothesis further, System 1 is “unconscious” for the fairly obvious reason that there’s no time for a train (or chain) of thought—only for the transient activity of a neural-activity cascade en route from stimulus (“Q: …”) to response (“A: …”). By contrast, we are conscious of System 2 processing precisely because all of those intermediate results must go into the “context window” along with the “prompt”—that is, the input from the outside world.

Being self-aware is, after all, about having access to your own mental states, being able to perceive them while knowing that you yourself are their source, and being able to reason further about them, engaging in acts of “metacognition” or “thinking about thinking.” In a sense, all System 2 or chain-of-thought activity is metacognitive, since it involves thinking about your own thoughts, and doing so with an awareness that they come from “inside the house.” I’m sweeping the dubious unity of the self under the rug here, though the very existence of something like a context window, by virtue of its single-threadedness, may be exactly what produces that autobiographical sense of a unified self that experiences the world as a sequence of events in time and is capable of introspective thought.

Our conceit that System 2 is uniquely human, or even peculiar to big-brained animals, is likely misplaced, though. In fact, ironically, the greatest advantage of having such a big brain may lie in our ability to do many things quickly and in parallel, using System 1, that would otherwise require step-by-step System 2 processing. Recall the tradeoff made by Portia spiders, who can scale their own (not inconsiderable) intellectual cliffs simply by taking their time and proceeding in many tiny steps. Presumably, they use something like chains of thought—and long ones. Their mental footholds may need to be close together, but they are patient.

Hive Mind

Portia are certainly clever— but they may not be such outliers among invertebrates. In his 2022 book The Mind of a Bee, 5 zoologist Lars Chittka draws on decades of bee-cognition research to paint a very different picture than that of Jean-Henri Fabre, who insisted on the “machine-like obstinacy” of insects—a claim amplified by Daniel Dennett in referring to their “mindless mechanicity” 6 and by Douglas Hofstadter in invoking their “sphexishness” (see chapter 5).

A honeybee, Apis mellifera, carrying pollen

In reality, Fabre, a lifelong close observer of actual bugs, wasn’t nearly as unequivocal as these later theorizers, cautioning that “the insect is not a machine, unvarying in the effect of its mechanism; it is allowed a certain latitude, enabling it to cope with the eventualities of the moment. Anyone expecting to see […] incidents […] unfolding […] exactly as I have described will risk disappointment. Special instances occur—they are even numerous—which are […] at variance with the general rule.” 7

This turns out to be true even of the behavior that inspired the word “sphexish.” As a careful commentator observed in a 2013 reappraisal, “digger wasps very often do not repeat themselves endlessly when the cricket test is done. After a few trials many wasps take the cricket into their burrow without the visit.” 8

Chittka and colleagues have documented an astonishingly sophisticated array of behaviors among bees, beyond the common sense not to get stuck in endless loops. These aren’t just genetic libraries of canned responses, either; bees can readily learn, generalize, and even, to a degree, reason. A handful of examples include:

In this experiment, bumblebees were trained, in several steps, to pull a string to access a sugary reward. Although only a small minority of untrained bumblebees could learn this “unnatural” task spontaneously, many more could do so by observing trained demonstrators from a distance, suggesting that bumblebees are both more intelligent than generally assumed and equipped for social learning; Alem et al. 2016.

- Bees can problem solve when building their hives, adapting their construction and repair techniques to changing circumstances (including weird ones never encountered in the wild). While they are born with some innate nest-building capability, they develop expertise by observing and learning from each other.

- Bees can be trained to recognize arbitrary shapes and patterns, and will invest extra time into spotting differences when incentivized to do so by positive or negative rewards. (They need to invest time to make a nuanced discrimination because, like Portia, their small brains are limited to scanning stimuli sequentially. 9 )

- Bees can generalize choice tasks, for instance associating cues across different sensory modalities, learning to distinguish novel symmetric versus asymmetric shapes, and even distinguishing among human faces (a skill that eludes the one percent or so of humans with face blindness, or “prosopagnosia”).

- Bees have a long working memory, which they can use to solve matching-to-sample tasks (“choose the same one for the reward” or “choose the different one for the reward”). They can exhibit self-control, if required in order to obtain a delayed reward, with delays of six, twelve, twenty-four, or thirty-six seconds.

- After a bad experience with a camouflaged artificial crab spider, bees will avoid the fake “flowers” associated with them, though given sugary incentives inside, they will carefully scan these suspect flowers from a distance before skittishly alighting on them.

When a robotic “crab spider” temporarily (but harmlessly) immobilizes a bumblebee, the bee will show far greater caution in approaching similar “flowers” in the future; Ings and Chittka 2008.

Neuroscientist Christof Koch has gone as far as to write, “Bees display a remarkable range of talents—abilities that in a mammal such as a dog we would associate with consciousness.” 10

That we have found these properties specifically in bees is likely just a function of where we have looked. They’re charismatic insects, and especially easy to study because of their hive-dwelling and nectar-collecting lifestyle. But we know that jumping spiders and wasps are clever too. 11 What about dragonflies, praying mantises, and the zillion other bugs we’ve written off as mindlessly mechanical? It seems likely that quite a few of these insects are better described as possessors of a scaled-down “rational soul” than as preprogrammed automata.

In fact, fully instinctual preprogramming is extraordinarily expensive, from an evolutionary standpoint. It requires that a behavior be hardcoded in the genome, which is replicated in every cell of an animal’s body. It also constrains learning to evolutionary timescales, which are painfully slow, foreclosing any possibility of adapting to local or temporary circumstances. Bees, by contrast, benefit from impressive feats of learning, despite a lifespan measured in weeks. Perhaps, for a creature with a brain, learning just isn’t that hard, and instincts are more of a fallback strategy in nature, for use only when really needed.

In this light, Mercier and Sperber’s “enigma of reason” no longer seems enigmatic. Reasoning with a big brain may be what happens when we predict by crunching away for a while using chain-of-thought and making greater use of introspection, but this doesn’t make it an unprecedented new trick, in evolutionary terms. On the contrary, small-brained animals have—by necessity, and because of their small brains—probably been doing it for hundreds of millions of years.

Although comparisons between brain sizes and neural-network model sizes must be taken with a generous helping of salt, it’s worth asking how large a Transformer model needs to be to reliably exhibit System 2 behaviors using language. The usual narrative, based on large language model scaling laws, maintains that one needs billions of parameters, at a minimum, to generate coherent stories, answer questions, or perform reasoning tasks.

However, in 2023, Microsoft researchers overturned this assumption in a paper called “TinyStories: How Small Can Language Models Be and Still Speak Coherent English?” 12 They used a large model to create a corpus of stories using language typical three- or four-year-olds can understand, then pretrained small models on this corpus. Surprisingly, models with only ten million parameters, and only a single attention layer, could reliably understand and reason about these multi-paragraph stories. Very crudely, these figures are in the ballpark of a bee brain. 13

If bees, spiders, and small Transformers can do so much with so few neurons, what on Earth are we doing with so many? The answer we’ve already touched upon is parallel processing. A bee must fly over a field of flowers, attending to one flower at a time. Our massively parallel visual system, though, allows us to take in the entire field in one glance, and spot (say) the red ones in a fraction of a second. The way they seem to pop out is a function not only of a much larger retina, but also of correspondingly replicated columns of visual cortex, all of which can “look” at the same time.

Keep in mind that “looking” is an active and predictive process, not just a feedforward flow of information, so if you are trying to spot red flowers, or blue ones, or ones of a particular shape, each cortical column knows that, and will be on that job. If it sees the right kind of flower, it will signal that vigorously, like a kid raising their hand in class. It will also use lateral inhibition to try to suppress the less behaviorally relevant responses of neighboring columns, and “vote” for eye movement to better resolve anything that looks relevant enough to foveate.

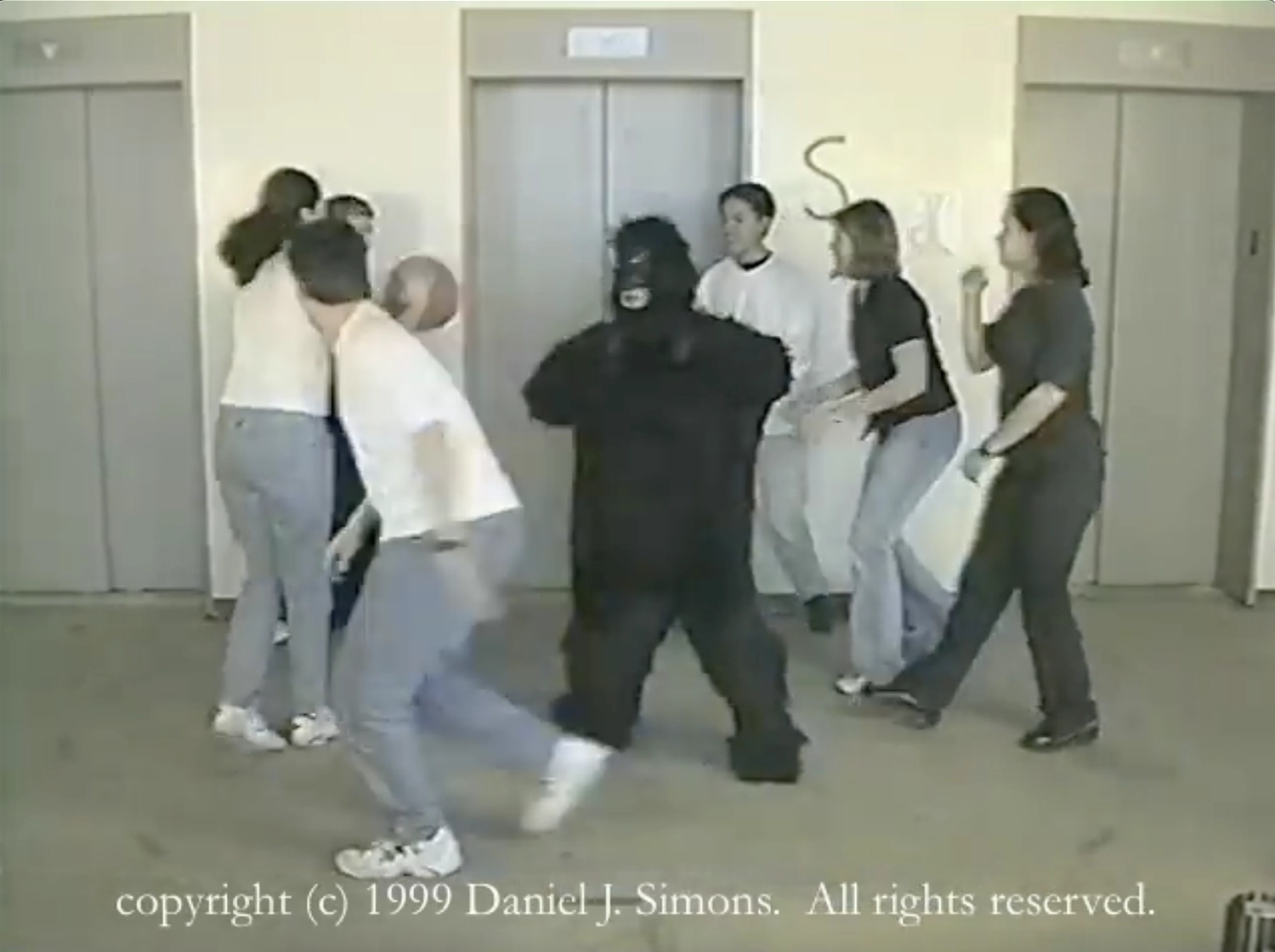

Upon initial viewing, most people are oblivious to the figure in the gorilla suit at the center of this freeze-frame from the famous “Gorillas in Our Midst” video (viewable here), Simons and Chabris 1999.

A famous illustration of the active—and thereby selective—quality of vision involves a short video of a group of students in white or black shirts throwing and catching a basketball. 14 As an experimental subject, you’re told to count the number of times someone in a white shirt makes a pass. It takes some concentration, but it’s not hard to do. At the end of the video, you’re asked whether you noticed anything strange; most likely, you will answer “no.” But as it turns out, a person in a gorilla suit made their way among the ball throwers, stood right in the center, beat their chest, then walked offscreen. It can be hard to believe this actually happened without your noticing it, but … no part of your visual cortex was looking for gorillas, or for “anything strange.” Your cortex was busily counting passes. Even if a cortical column somewhere raised its hand to say “umm … ,” it was likely ignored.

While such “inattentional blindness” may cause us to fail to notice the gorilla, the advantage of massively parallel human vision over more serial bee vision may seem obvious in a foraging context. After all, finding flowers in a field seems like a perfect instance of a highly parallelizable task.

And it is, but not quite in the right way to favor big brains. Consider: each flower contains only a tiny drop of nectar. You may be able to see them all at a glance, but you would still need to move your vastly larger than bee-sized body from one flower to the next to actually harvest them. The energy in their nectar wouldn’t even cover the cost of movement, let alone the energetic demands of that glucose-hungry parallel processor between your ears—which would, incidentally, be idling (or at least not on the foraging job) most of the time.

Your brain, in other words, is massively overprovisioned for the task. A bee, being orders of magnitude smaller, harvests a surplus of energy using its serial approach; its sensory and motor systems are far better matched both to each other and to fields of flowers.

In the Cretaceous Period (145–66 million years ago), some bees and other insect species did massively parallelize, but by forming hives rather than by scaling up their individual brains. The hive reproduces as a unit, and comprises a superorganism—a classic instance of symbiogenesis. 15 Highly decentralized organization maintains the right balance between sensory and motor systems, allowing individual bees to sense and act independently. Yet they share both the calories harvested and information about where to find more, using their famous waggle dance. Imagine the hive as a giant octopus, with each bee like a sucker on the end of an invisible arm that can extend for miles. As a massively parallel processor and forager, this superorganism is exquisitely versatile and efficient.

A bee communicating a foraging location to the hive with the waggle dance

Taking the more centralized approach to scaling intelligence by growing a bigger individual brain and body provides the comparative advantage of speed, or, rather, latency. A single body can execute a quick coordinated movement, with the parallel processing of many neural assemblies “voting” in a fraction of a second. Compare this with the hours it can take a bee to make a round trip and dance for her fellow bees. If you’re eating plant products, a timescale measured in hours is fine. If you’re eating other animals, you and your prey will enter a cybernetic arms race driven by smart coordinated action at speed, as described in chapter 3. Moreover, bigger brains require bigger bodies to carry them around, and bigger bodies require bigger brains to coordinate their movements, so the amount of muscle (or meat) available in a single animal also increases as this arms race escalates. The steaks go up!

Ironically, lightning-quick cybernetic predation is the essence of System 1 thinking. It doesn’t leave time for reflection. (That’s why early to mid-twentieth century cybernetic systems endowed only with low-order prediction were good enough for warfare applications like missile guidance.) On the other hand, nothing prevents big-brained predators from using premeditated cunning to plan their attack on unsuspecting prey, as Portia does, providing an ongoing advantage for System 2 thinking. 16

And, of course, among highly social big-brained animals—us, most of all—friendly cooperation, politics, and mating put a special premium on slower thinking. As anyone knows who has come up with a witty retort long after the moment for it has passed, 17 speed is relevant in social interactions, but even rapier-like wit doesn’t need to operate on a timescale measured in hundredths of a second, as required in an actual swordfight. During argument, deliberation, bargaining, group planning, teaching, learning, or mate wooing, taking a few seconds to follow a chain of thought before opening your mouth is usually a fine idea.

Our combination of fast parallel and slow serial processing is one take on psychologist Jonathan Haidt’s characterization of people as “90% chimp, 10% bee,” 18 although chimps are themselves quite social, hence capable slow thinkers. The new element humans bring to the table is a highly developed sensory-motor modality ideally suited to both internalized and socially shared chains of thought: the modality of language.

Modalities

It may seem puzzling to refer to language as a modality. From a machine-learning perspective, though, that’s exactly what it is. Chatbots and simpler models like Word2Vec are trained on text, not on pixels, sounds, or other sensory signals.

Of course we don’t perceive text directly. We recognize text via other modalities, including hearing (spoken), vision (written), and even touch (Braille or finger-writing). In conversation, hearing and vision are often used in concert, with gestures, facial expressions, and environmental cues playing important roles, especially during language learning.

Nonetheless, there is also neuroscientific justification for thinking of text as a sensory modality, albeit an indirect and culturally acquired one. In literate humans, a specific part of the brain—the “visual word form area” (VWFA), near the underside of the left temporal lobe—develops to perform reading tasks, that is, learns to convert visual input into text. High-level neural activity in this area can then serve as a specialized textual modality for any other brain region that wires up to the VWFA.

Seen this way, vision is not fundamentally more “real” as a sensory modality than text. Recall that raw visual input is a hot mess—nothing like the stable “hallucinated” world you think you see. Using predictive modeling, the visual system solicits and processes feedback from the eyes to create a kind of diorama that other parts of the brain can then interrogate. As far as those regions are concerned, it is this stately diorama, not the raw, jittery input from the eyes, that comprises the visual umwelt. The additional processing that renders visual input as text is simply another such transformation, sifting words out of stabilized images to create a textual modality.

The VWFA is a remarkable testament to the cortex’s flexibility and generality. Genes may support or predispose us to develop certain capabilities via “pre-adaptation,” but it’s not clear how that could be the case for reading and writing—it’s too recent. Keep in mind that humans have been around for hundreds of thousands of years, while the first known writing is only a few thousand years old.

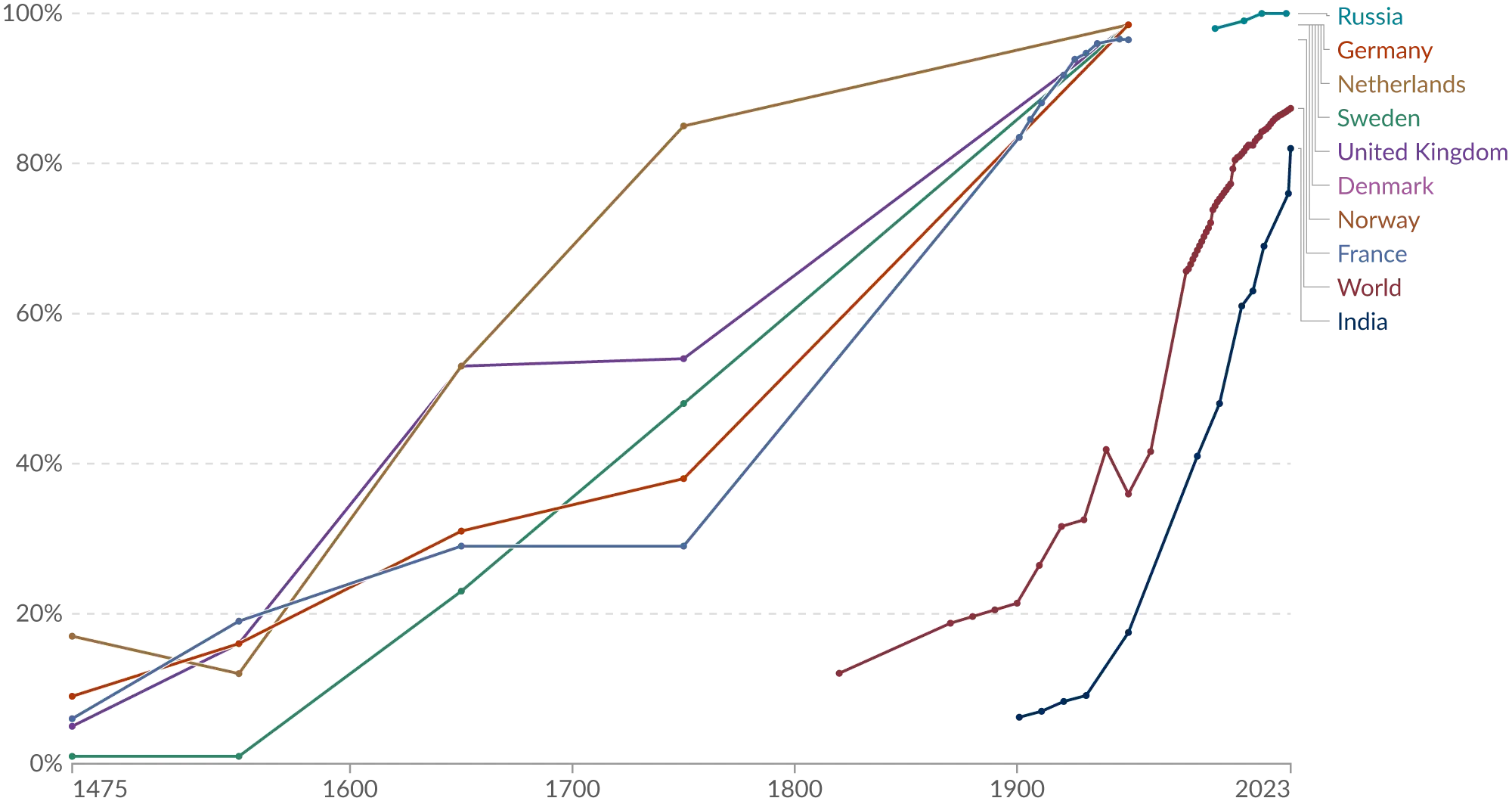

Before objecting that a few thousand years might be enough for an evolved trait to emerge, consider that even after the invention of writing, literacy remained confined to a tiny proportion of the human population—professional scribes, clergy, and ruling elites—until just a few generations ago. There are good odds that at least some of your great-great-grandparents were illiterate.

Worldwide literacy data, dating back to 1475 in a few countries with unusually high historical literacy rates; Roser and Ortiz-Ospina 2018.

We can only conclude that the VWFA is an ordinary bit of brain that just happened to be in the right place (in terms of connectivity) at the right time. In modern, literate humans, it has established a symbiotic functional relationship with other brain areas, using a generic predictive-learning procedure to support a valuable culturally evolved trait. 19 Thus, the VWFA highlights the way highly specialized sensory processing—a new modality, in effect—can be learned, opening up the space of modalities to high-speed cultural evolution.

A similar story may apply not just to reading, but even to language itself. Despite the common refrain among linguists that our brains come with a built-in “language organ,” 20 it isn’t at all clear that we are genetically pre-adapted specifically for language, nor has the search for universal grammatical or syntactic properties shared by all human languages been successful. 21 Insofar as human genetics support language learning to a greater degree than in our primate cousins, it seems increasingly likely that this support consists of a combination of enhanced sequence learning in general 22 and greater pro-sociality. 23 If so, other manifestations of sequence learning, especially ones that reinforce sociality, such as dance and music, may well have predated complex language. 24

Relative to vision, smell, and other modalities, language has some unique properties. Whereas ordinary senses are for perceiving the world broadly, language is purely for sensing each other. It has wonderfully reflexive, self-referential qualities (hence my ability to write about it in this book, and your ability to make sense of what I’m writing—I hope). In providing us with a mind-reading mechanism, language must allow for communication about any aspect of our umwelt, including our models of ourselves and others—which necessarily includes a model of every other sensory modality and motor affordance, both our own and others’. 25 That same infinite, recursive hall of mirrors described in chapter 5 for internal states applies to our linguistic models of the external world too.

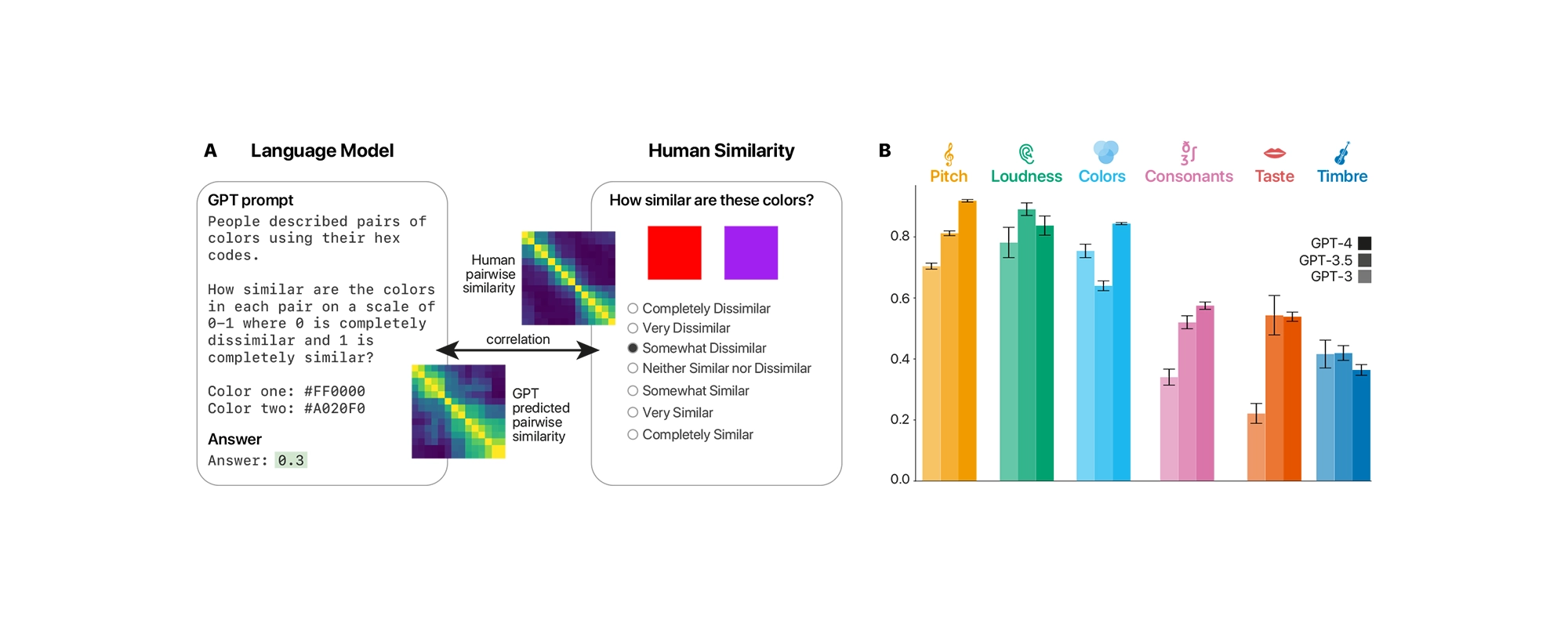

A 2023 paper entitled “Large Language Models Predict Human Sensory Judgments Across Six Modalities” nicely illustrates this. 26 The paper’s authors ask a large language model to estimate the similarity between pairs of sensory stimuli based on textual descriptions. These modalities include pitch, loudness, colors, the sounds of consonants, tastes, and musical timbres, described either in quantitative terms (decibels or Hertz for sounds, numerical red, green, and blue component values for color) or by name (“quinine,” “artificial sweetener,” etc. for taste; “cello,” “flute,” etc. for timbre).

Language models can be asked to rate perceptual color differences by giving them numerical red, green, and blue component values (encoded here in the commonly used hexadecimal format #RRGGBB, with values ranging from 00 to FF, or 255 in decimal). Similar approaches across other modalities can be used to calculate correlations with human responses. These correlations are generally both high and improve with model size; Marjieh et al. 2023.

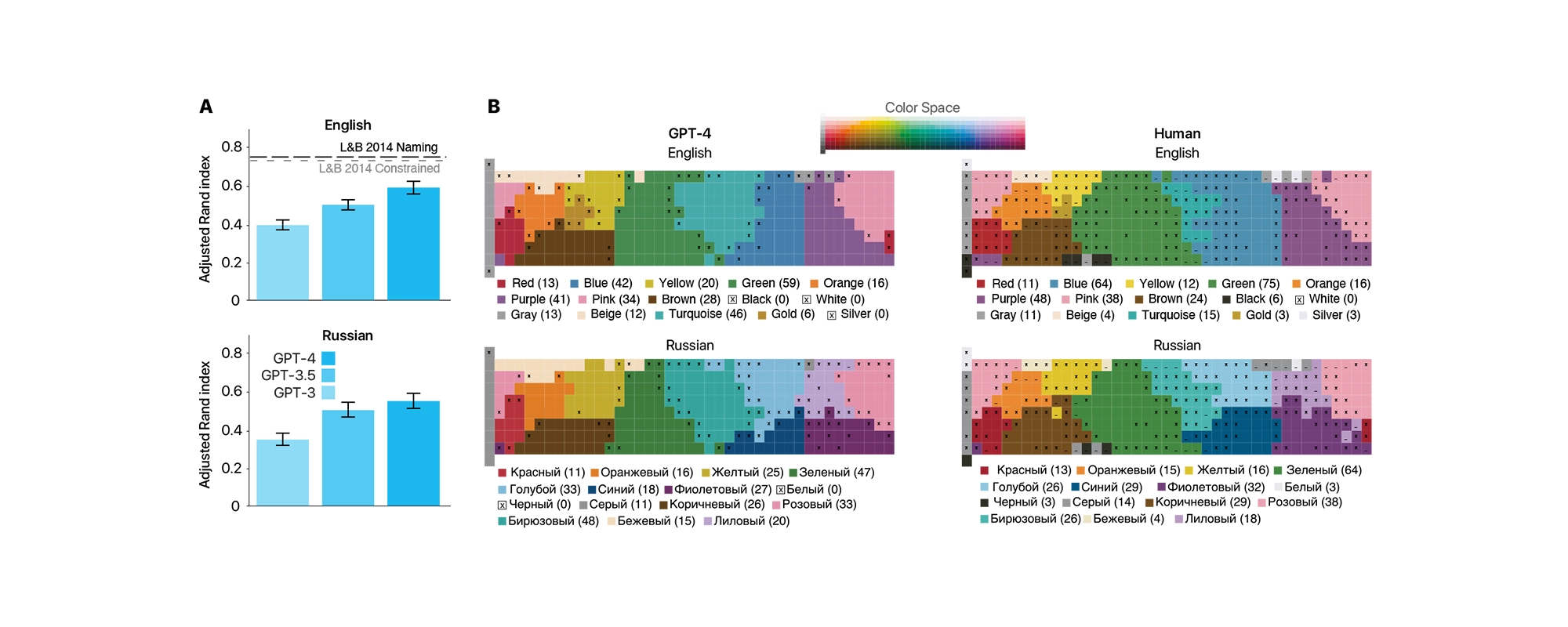

Language models can name colors. When doing so, they reflect the way different languages vary in their color naming, as shown here for English and Russian (which differ especially with regard to blue); Marjieh et al. 2023.

Despite being trained only on text, the model’s responses mirror human responses to an astonishing degree. 27 As, on reflection, they should: the goal of pretraining is to predict human responses to any textual question or prompt. The information needed to make these predictions can be found in a large enough training corpus, because we talk about pretty much everything we experience, including all that we perceive, think, and feel—or at least, everything accessible to the interpreter.

Pure Speech

Despite these arguments, I used to worry that training a large model on text might be cheating. We only learn how to read and write after mastering speech; I wasn’t sure a Transformer could learn language without starting from a transcription—where, in effect, the hard work of turning sound into symbols had already been done. AudioLM convinced me.

The project began when a team I managed at Google Research developed a neural net for audio modeling called SEANet, then turned it into an audio compressor called SoundStream in 2021. 28 SoundStream used a small Transformer to turn auditory waveforms into token sequences, making use of the observation that good prediction allows for powerful compression. Since Transformers were the best predictive models available, and they hadn’t previously been used to compress raw audio, we were pretty sure SoundStream would set a new sound compression record. It did.

Then, in 2022, the team created AudioLM by inserting a second, much beefier Transformer, like those used for large language models, between SoundStream’s encoder and decoder. 29 They pretrained this large audio token model on the soundtracks of YouTube videos featuring people speaking in English.

The results were amazing, and a bit eerie. After pretraining on the equivalent of about seven years’ worth of YouTube audio, the model could do a convincing job of replying to simple prompts or questions. In one of the first exchanges I had with AudioLM, I asked it, “What’s your favorite sport?” and generated three alternative replies (we were using a nonzero temperature setting):

AudioLM sample

“I like baseball!”

“I don’t know? I like football.”

“I play basketball.”

Curiously, all three replies were in children’s voices. On reflection, this made sense. This was a pretrained model without any fine-tuning or additional reinforcement learning, so it was strictly in the business of giving high probability predictions of the future (its response) given the past (my question). You just don’t ask adults a question like, “What’s your favorite sport?” It’s a question for kids. So, it responded with a likely answer in a likely voice. For us humans, predictions must be conditional on our individual life history, from the physiology of our vocal tract to our school experiences on sports teams, but a model pretrained on a broad range of human voices and experiences isn’t constrained in the same way. In its protean state, the model “is” a broad sample of humanity, not a single human.

With further improvements to the model architecture, AudioLM, now called SoundStorm, 30 could stream long replies and continue multi-speaker dialogues. Two team members prompted it with,

“Where did you go last summer?”

“I went to Greece. It was amazing.”

The model seamlessly improvised a continuation of the conversation, alternating between perfect renditions of their voices (and yes, the deepfake potential here was immediately worrying):

SoundStorm sample

“Oh, that’s great. I’ve always wanted to go to Greece. What was your favorite part?”

“Uh, it’s hard to choose just one favorite part. But … yeah, I really loved the food. The seafood was especially delicious—”

“Uh huh—”

“—a-and the beaches were incredible.”

“Uh huh—”

“We spent a lot of time swimming … uh, sunbathing, and exploring the islands.”

“Oh, that sounds like a perfect vacation. I’m so jealous.”

“It was definitely a trip I’ll never forget.”

“I really hope I’ll get to visit someday.”

It wasn’t scintillating dialogue, but it was entirely believable. The nuances of the voices, their accents and mannerisms, were so perfectly reproduced that even those of us who know those two team members well weren’t able to guess which lines were real and which were synthesized. The model renders breaths, disfluencies, sounds of agreement, people speaking over each other—in short, all of the features that characterize actual dialogue, as opposed to the stylized kind you read in novels.

The team eventually made AudioLM multimodal by adding text too, creating AudioLLM. Just as translation between languages is possible in a large language model with little or no explicitly translated training data, only a small amount of transcribed speech was needed to allow AudioLLM to establish the relationship between speech and text. The correlations inherent to speech are enough to form internal representations roughly analogous to phonemes, so in theory (and especially in a language with sensible spelling, like Spanish) all it would take is a paragraph or so of sounded-out text to map each letter to a phoneme, much as the Rosetta Stone sufficed to form a mapping between two written languages. In fact, given the higher-order correlations and analogies between text and speech, I’m sure that with enough pretraining data, an AudioLLM-style model could learn those analogies with no sounded-out text at all.

What was most interesting about the original AudioLM, though, was its ability to learn and understand language from pure analog sound, without text or any other modality. The model was given no rules, assumptions, or symbols. It was a striking refutation of the longstanding hypothesis that language learning requires genetic preprogramming.

The father of twentieth-century linguistics, Noam Chomsky, has made an influential pseudo-mathematical “poverty of the stimulus” argument, asserting that the amount of speech babies are exposed to can’t be nearly enough for them to learn the grammar of natural language without a strong statistical prior. 31 Such a strong prior, a “universal grammar” common to all human languages, would reside within the hypothetical, genetically preprogrammed “language organ.” GOFAI pairs well with this idea, since it implies that the way to get a computer to process language—and perhaps to reason—is to explicitly program in this universal grammar, thus restricting the role of language learning to the simpler task of locking in the language-specific “settings.”

Chomsky’s argument was already in trouble before LLMs, for a variety of reasons. 32 As mentioned earlier, human languages differ in so many ways that the search for a supposedly universal grammar has been unsuccessful. Neuroscience, too, has offered little in support of the thesis. The “interpreter” in the left hemisphere does specialize in language, but like any other part of cortex, its specialization appears to be a function of its connectivity, not of any “language organ” fairy dust sprinkled in that particular spot.

The way babies and children learn language—beginning by paying close attention to mom or dad, looking where they look or point, pointing in turn, mimicking sounds, learning to take turns, acquiring a few salient words, starting to combine them into stock phrases—also seems inconsistent with the use or acquisition of a formal grammar. Babies are quick and wonderful learners, but that doesn’t mean that they are little linguists, or scientists, or any other kind of “ists.”

AudioLM puts a final nail in the coffin of “poverty of the stimulus.” While all machine learning models have some statistical priors, Transformers are so generic that they can learn about any kind of sound, including music, birdsong, or whale song; 33 for that matter, they can learn the crackle of radio telescope data, or weather patterns, or sequences of pixels in images. Yet they can learn human language—from how vocal tracts sound, to grammar, to the meanings of words, to social appropriateness and turn-taking, to the nuances of breathing and other non-speech sounds—from nothing but seven years’ worth of random YouTube audio of people talking.

Before you object that children learn how to speak at an equivalent level in fewer than seven years, and aren’t constantly listening to speech over that period, consider how much easier they have it: their learning is scaffolded by many other sensory modalities, and in the beginning their parents and siblings repeat the same words over and over in consistent voices, pointing to familiar things, making eating gestures, and so on. That language can be learned at all without any of this scaffolding, with no interaction, no curriculum, and no rewards, is remarkable.

None of this implies that language is entirely arbitrary. It has to begin with sounds human bodies can easily make and hear, which is already a significant constraint. It must also be reasonably efficient and not overstrain our cognitive capacities (e.g., by insisting that a common word be produced by rapidly clicking the tongue thirty-nine times in a row). Indeed, the historical record shows clear evidence that languages with gnarly features tend to get streamlined over time, making them increasingly user-friendly. 34 The statistical regularities involved, however, have little to do with formal grammar and more to do with convenience, along with constraints on memory, the vocal tract, and the distinguishability of sounds.

Babel Fish

While there is no universal grammar, there certainly are plenty of statistical relationships between languages—otherwise, the language translation experiments described in chapter 8 wouldn’t work. Some correlations stem from human physiology and cognitive constraints, and some from the common ancestry of languages. Many languages are closely related, as with the Romance languages, and others more distantly, as with Indo-European. Possibly, all languages share a common ancestor, though this remains uncertain. 35

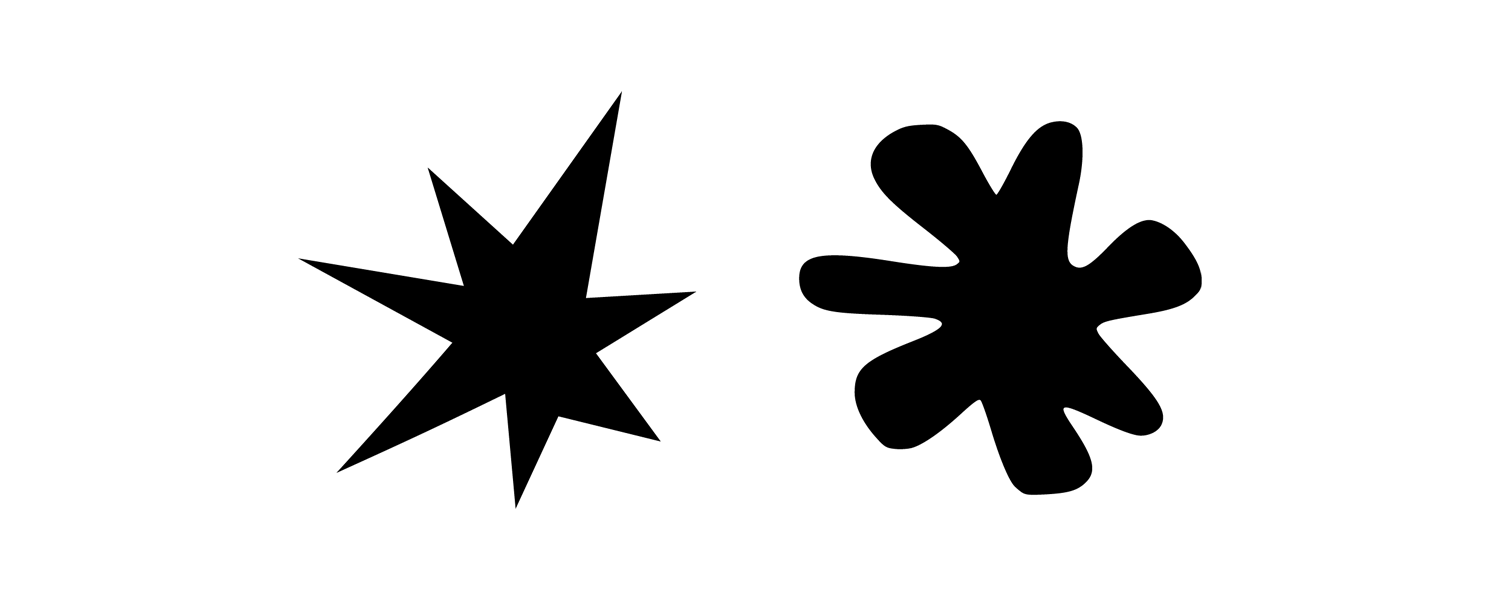

Onomatopoeia and synesthesia play a part, too. It’s unsurprising that “meow” and “splash” sound similar in many languages, even when the words have no common ancestor. Less obviously, quirks of the relationships between sensory representations in the brain also lead most humans to make the same choice when deciding how to associate the nonsense words “bouba” and “kiki” with two shapes, one of which is spiky, and the other rounded. (Yes, “kiki” is the spiky one.) This classic result in psychology, dating back to the 1920s, shows how aspects of synesthesia, a seemingly arbitrary mental association between distinct stimuli of different modalities that some people profess to experience strongly, have a universal neural basis. 36 Whether because those associations aren’t as arbitrary as they seem, or because they are implicitly reflected in human languages, multimodal large language models reliably exhibit the bouba/kiki effect too. 37

The classic “kiki” (left) and “bouba” (right) shapes

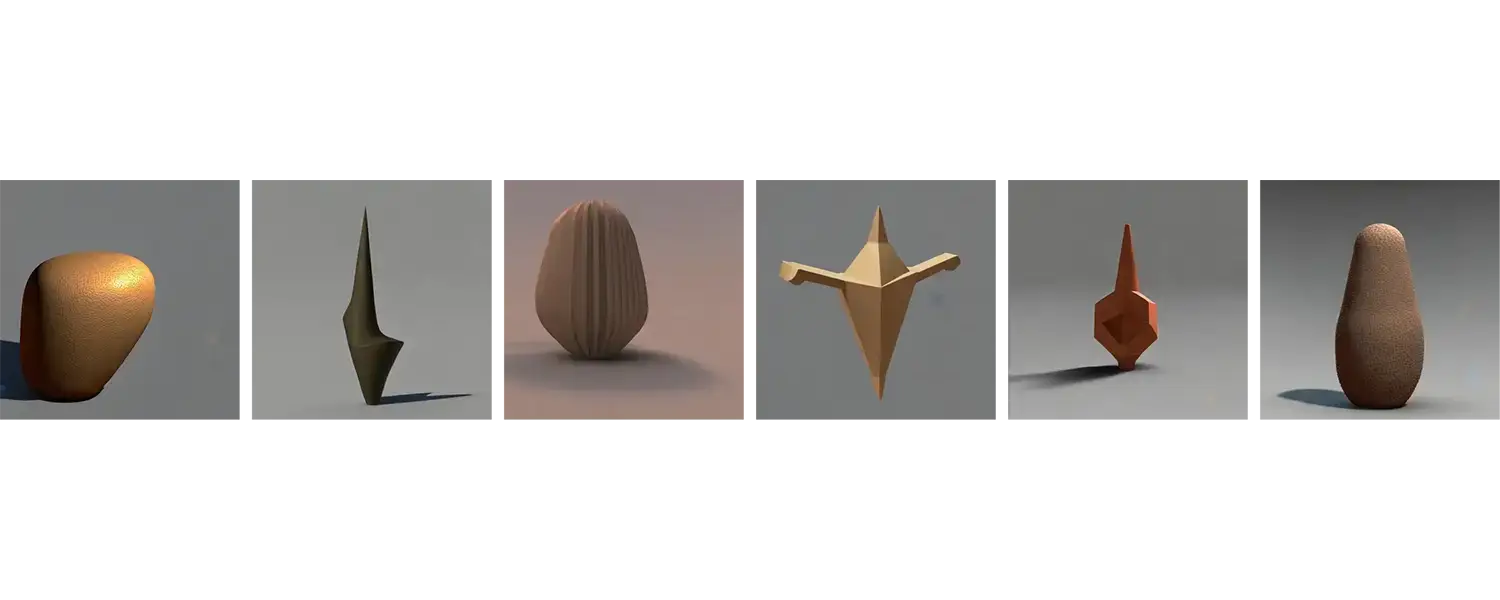

Random generations from the Stable Diffusion model using the prompt “A 3D rendering of a _____ shaped object,” where the blank was filled in as: (scroll to reveal)

Most of all, languages are all related because they are all about us and the world, and we are all basically the same, and we all live in the same world. The real universal grammar is actually semantics. I’m fairly certain that, if a tribe of people were somehow isolated from everyone else at birth and developed language de novo on their own island, an AudioLM model pretrained on large enough amounts of their speech and, independently, on English, would be able to freely translate between the two languages without any need for a Rosetta Stone.

In The Hitchhiker’s Guide to the Galaxy, 38 a surprisingly profound satire beloved by generations of twelve-year-old nerds, British humorist Douglas Adams describes a “mindbogglingly useful” sci-fi creature, the “Babel fish.” “Small, yellow, and leech-like,” when you put one in your ear, “you can instantly understand anything said to you in any form of language.”

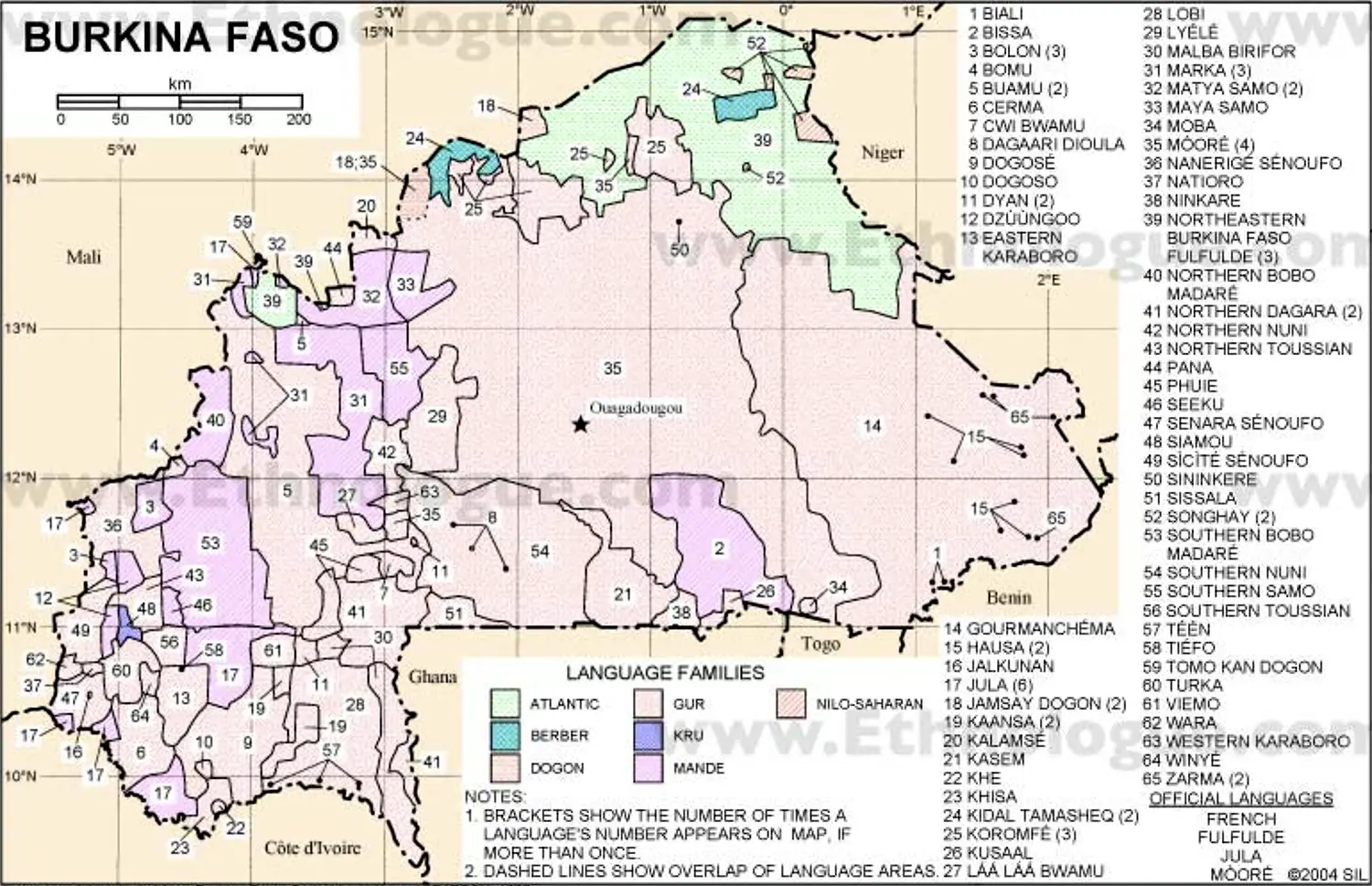

Such a technology would indeed be mind-bogglingly useful, even if limited to the seven thousand or so languages spoken by Earth’s humans today. 39 For one, language barriers are an enormous impediment to socioeconomic justice for many of the world’s poor. For instance, in Burkina Faso, a landlocked West African country, about seventy languages are spoken, sixty-six of which are indigenous. As of 2024, the literacy rate is about forty percent. While the government uses French (decolonization dates back only to 1960), that former imperial language is only spoken by a small minority of the population. 40

A map (doubtless incomplete) of the languages spoken in Burkina Faso, a country roughly the size of Colorado

In such countries, a Babel fish could improve people’s prospects enormously, giving them access to information, employment, services, education, and development opportunities that are out of reach today. Moreover, because a real neural net–powered Babel fish can operate in full duplex mode, and could even offer tutoring and participate in conversation, it could aid in the preservation of indigenous cultures and their languages.

Keep in mind that poorer countries have far younger populations and higher birth rates than more developed countries; as countries become richer, their birth rates inevitably drop, but due to the time lags in these dynamics, we should understand that the populations of countries like Burkina Faso, already numerous, will comprise a far greater proportion of humanity in the latter part of the twenty-first century than they do today. This is humanity’s future. 41

If we begin thinking about humanity as a superorganism, what is at stake here is the scale, diversity, and cohesion of our collective intelligence. Without nurturing the diversity of its people and cultures, we reduce the value each has to offer to the others, and the potential for hybridity, which is critical to cultural innovation and development. On the other hand, without scale, collective intelligence is impoverished; it’s difficult for an isolated population or a backwater to flourish.

There is a sweet spot, where local connectivity (in cultural terms, tradition) is strong enough to provide real diversity yet there is also enough longer-range connectivity to share knowledge, capability, and resources. The cortex embodies that balance, with dense connectivity within cortical columns and long-range wiring to bring the benefits of scale. The abundant cultural and economic productivity of the Silk Roads may have been achieved through a similar balance. 42 For many centuries, highly active trade networks linked dozens of major cities and thousands of smaller settlements across Eurasia, each with strong and diverse local cultures, yet also benefiting from scale.

James Evans’s Knowledge Lab at the University of Chicago has found evidence of the same kind of sweet spot in the more abstract networks of collaboration among academics. Scientific advances happen when robust, tightly interconnected research communities are also in contact with each other, combining local depth with wider hybridity. 43

Today, we’re simultaneously under- and over-connected. Young people in places like Burkina Faso remain isolated, while at the same time cultural and linguistic homogeneity threatens to erase much of the world’s rich human diversity, just as the genetic monocultures of industrial farming threaten biodiversity. Linguistically, the problem stems from the fact that the seven thousand or so languages spoken on Earth follow a frequency distribution that is, as a statistician would put it, very long-tailed, meaning that there are a large number of rare categories. The rarest, so-called “low resource” languages, are so critically endangered that one goes extinct every few months, with the death of its last living speaker. 44

While new languages used to differentiate and coalesce at a comparable (or higher) rate, increasing globalization has upset this balance. As a UNESCO report put it in 2003, “About ninety-seven percent of the world’s people speak about four percent of the world’s languages; and conversely, about ninety-six percent of the world’s languages are spoken by about three percent of the world’s people […]. Even languages with many thousands of speakers are no longer being acquired by children [… and] in most world regions, about ninety percent of the languages may be replaced by dominant languages by the end of the twenty-first century.” 45

Log-log plot using data from Ethnologue.com estimating the number of speakers of the top one thousand languages in the early 2000s; Zanette and Manrubia 2007.

This flattening of our cultural and linguistic ecology has accelerated since the early 2000s, when people began to move online en masse. English dominates the internet, with just a handful of other languages (not coincidentally, those associated with the former great empires) comprising the overwhelming majority of the non-English material. Data centers now contain orders of magnitude more textual material than existed in the entire world when the 2003 UNESCO report came out. On the other hand, most indigenous languages are virtually absent from this vast digital landscape.

With unsupervised sequence models, building a real Babel fish—and more—has become newly possible. It should not be thought of as a specialized “product,” since translation is an emergent capability in any model trained multilingually. A giant, multilingual version of AudioLLM could enable it to learn languages from field recordings; it could even invent written forms for languages that lack them. Dialects, accents, and regional variations could all be learned too. Using AI glasses, you could read Sumerian tablets or Aramaic manuscripts. A multimodal model could even dub video in real time, or generate an avatar of you able to instantly render gestures in any of the world’s sign languages.

The fly in the ointment is that long-tailed language distribution. Given the vast amount of data pretraining seems to require, how on Earth could a large model become competent at a regional Burkinabè dialect, let alone a critically endangered indigenous language known only to a handful of elders?

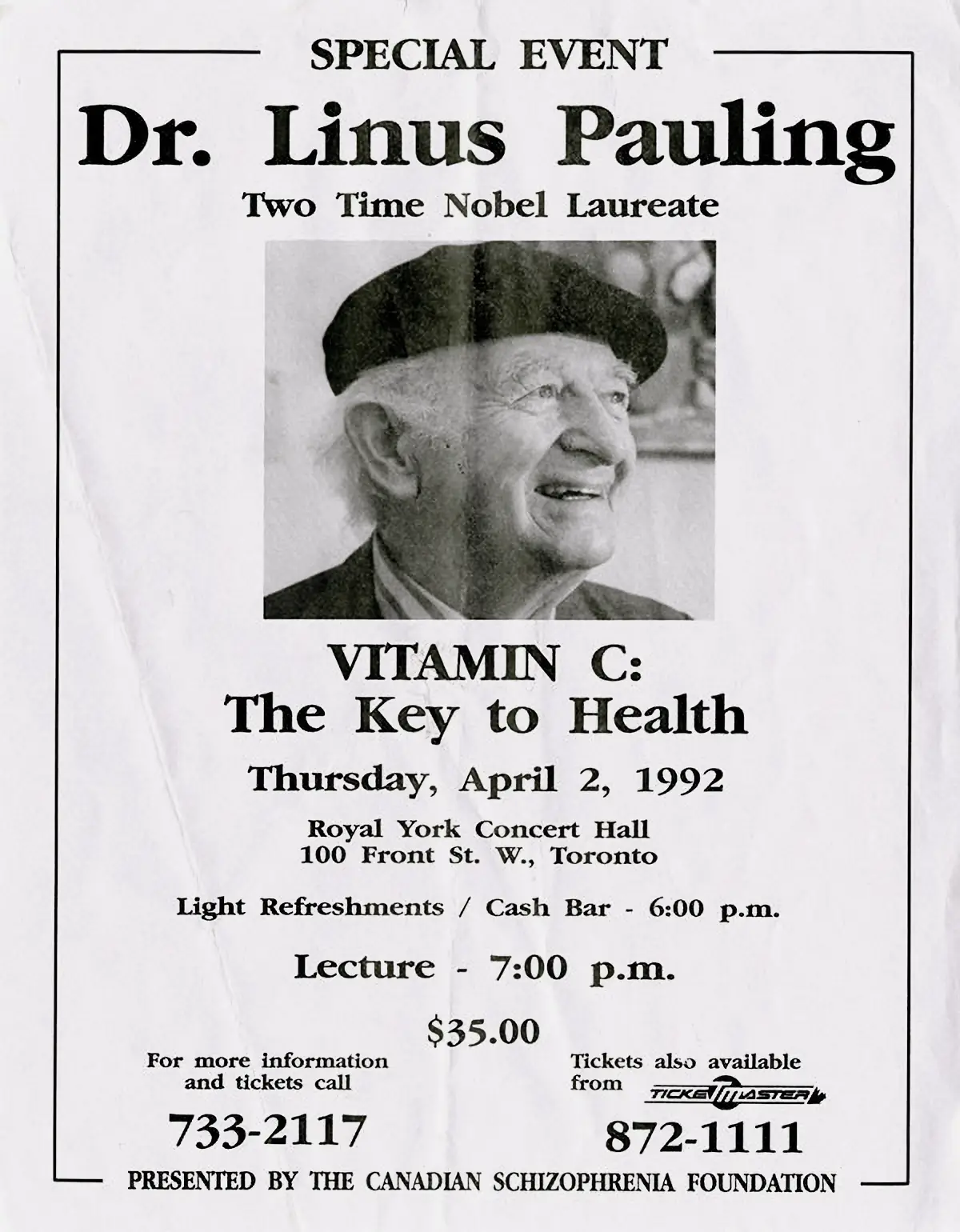

Testament

By 2021, my colleagues at Google Research had begun working in earnest on multilingual large language models, and they noticed something interesting: learning one language greatly accelerated the subsequent learning of another. For instance, pretraining on an enormous amount of English text, then continuing the pretraining on a comparatively tiny amount of, say, Portuguese produces a competent bilingual model. It may not be quite as good at Portuguese as at English, but if it were instead trained monolingually on Portuguese, it would need orders of magnitude more Portuguese content to reach an equivalent skill level.

This effect is so powerful that beginning with a multilingual model, then continuing to pretrain using only the text of the New Testament in a novel language produces a model likely to be capable of rudimentary translation to or from that novel language. 46 This is especially noteworthy because Christian missionaries have translated the New Testament into more than 1,600 languages—a pretty good start at working our way down the long tail.

For better or worse, missionaries have long been the vanguard of ethnographic linguistics. It takes real commitment for scholars from a rich country to travel far from home, embed themselves in a foreign culture, and learn enough of the local language and culture to translate a complex text, sometimes in the process devising a written form for a language that had previously only been spoken. Historically, religious faith and a desire to win converts has often provided the necessary motivation; that’s why the New Testament is the most widely translated text on Earth.

Today, much of this work is carried out by SIL Global (formerly the Summer Institute of Linguistics International), an evangelical Christian nonprofit founded in 1934 and headquartered in Dallas. SIL’s online database, Ethnologue, is by far the most comprehensive catalog of known languages, thanks to the organization’s thousands of field linguists embedded in communities all over the world.

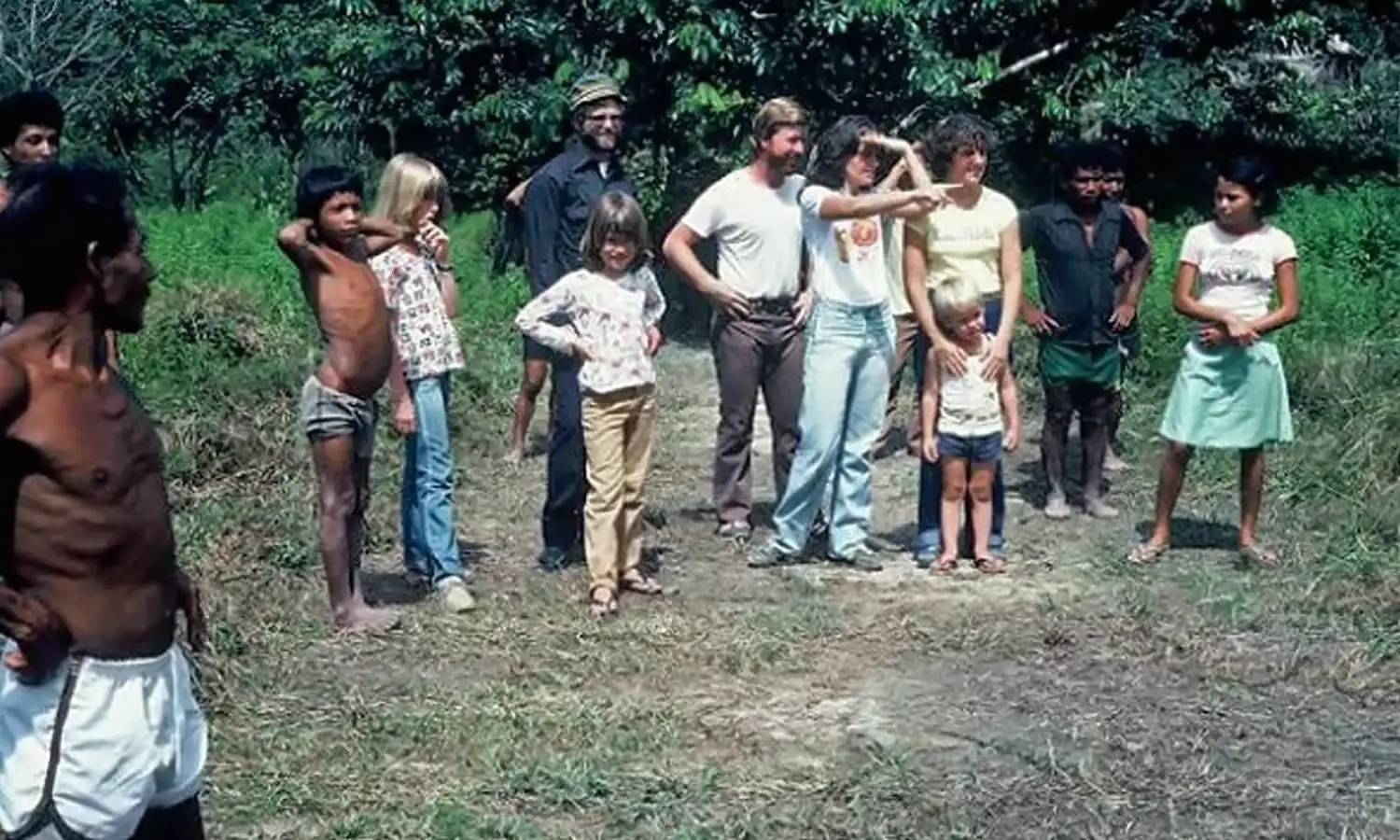

In 1977, Daniel Everett, a recent graduate of the Moody Bible Institute of Chicago, had signed up to become one of those missionary linguists. Impressed by his talent, SIL sent him, along with his wife Keren and their three young children, to learn a language the Institute had failed to crack after twenty years of study: the language of the Pirahã, an indigenous group numbering less than a thousand living in the Brazilian rainforest, near the mouth of the Maici river, a tributary of the Amazon.

The Everetts soon after they arrived among the Pirahã as a missionary family

Daniel Everett conducting language research among the Pirahã many years later

Despite the difficulty of the language, Everett eventually succeeded in learning it, and, in the process, did much to dismantle Chomsky’s armchair theories about universal grammar. Pirahã lacks “linguistic recursion”—the ability to nest grammatical structures within each other. So, for example, there is no Pirahã equivalent to the English phrase “John’s brother’s house.” According to Chomsky, recursion is what makes languages open-ended, distinguishing them from the finite communication systems of nonhuman animals. And indeed, without recursion, a finite vocabulary can only be used to construct a finite number of valid sentences. 47 The lack of recursion is not quite as limiting in practice as it may appear; a Pirahã speaker can break nested ideas up into multiple sentences, as in “John has a brother. This brother has a house.”

However, Pirahã also lacks several other features common to most languages, including past and future tenses, conditionals, and numbers. These gaps aren’t superficial. Monolingual Pirahã people, for instance, don’t just lack words for numbers, but lack any sense of numerosity, beyond a qualitative difference between “one” and “more than one.” They can’t do math at all. 48 Similarly, the lack of tenses and counterfactuals is associated with a worldview that only credits direct experience. A sentence beginning “John said that …” doesn’t just pose a translation challenge, but an epistemic one.

Daniel Everett discussing numerosity with a Pirahã

The larger picture here demonstrates that a wide range of cognitive capacities Chomsky and his followers have assumed to be genetically pre-programmed are not. Numbers and verb tenses are, like reading, social technologies. Human brains are special not by virtue of having evolved a specific suite of capabilities, but by virtue of having the flexibility, capacity, and inclination to be able to learn them, both from our direct sensory experiences and from others.

As you might imagine, Everett had little luck converting a people who have no use for what John, or any other first-century evangelist, had to say. With much effort, Everett managed to translate the Gospel of Mark, but when he tried to explain that Jesus lived a long time ago, yet he, Everett, still had Jesus’s words, the reply was, “Well, Dan, how do you have his words if you have never heard him or seen him?” Taking pity, a Pirahã took Everett aside to explain, “We don’t want Jesus. But we like you. You can stay with us. But we don’t want to hear any more about Jesus.” 49

Everett did stay with them. The wonderful book he wrote three decades later, Don’t Sleep, There Are Snakes, describes not only the unusual features of the Pirahã language, but how, instead of winning converts, life among them ultimately caused him to give up his own faith!

Long Tails

I find it fascinating to consider that the Biblical translation work thousands of missionaries have done over the years could so efficiently bootstrap multilingual AI models. With a large AudioLM-type model pretrained on many spoken languages, recordings of a few dozen hours of conversation among elders speaking a rare language could likely do the same. 50

There’s a seeming paradox here. On one hand, improvements to a large model seem to be subject to diminishing returns as pretraining runs increase in size—hence AI’s voracious appetite for data. In other words, training on two hundred billion tokens of web content isn’t twice as good as training on one hundred billion tokens; it’s only incrementally better. In fact, doubling the performance of a model requires an exponentially larger amount of data, as well as an exponential increase in the number of model parameters. 51

And yet we also see that a miniscule amount of additional data in a new language can enable a model to go from monolingual to bilingual, which seems like a doubling of its capability. In fact, if we keep the amount of novel language content fixed and vary the original amount of pretraining data, the bilingual results get better as the amount of initial pretraining increases. That is, the larger and more capable the original model, the better use it can make of a very limited amount of novel language content. How can these models simultaneously exhibit logarithmically diminishing returns to scale, while also seeming to become exponentially faster learners as they grow? Counterintuitively, the two effects turn out to be closely related.

Remember that translation emerges as an automatic capability in large language models because it’s a form of analogy. Specifically, the cloud of dots representing the embeddings of words or concepts in language A is paralleled by an almost identically shaped cloud of dots representing all of the words or concepts in language B; moving from one cloud to the other is literally a matter of adding or subtracting a constant shift in the embedding space. The shape of each of those clouds is, in turn, the shape of the human umwelt, the geometry of everything we know how to talk about.

The symmetry between these clouds—if the model is massively multilingual, a many-way symmetry—offers powerful opportunities for generalization, and generalization is what intelligence does. Recall that, once a convolutional net learns how to see generically, it can easily learn how a new object looks in one shot, because learning how to see involves building a generic representation for objects that includes all of the symmetries arising from rotating any given object around in space, looking at it from farther away or closer, changing the lighting, and so on. In just the same way, learning both the universal shape of the human umwelt and the symmetries between languages allows a new language to be learned in something approximating one shot—or a single book, like the New Testament.

Why, then, do we see such diminishing returns to scale in pretraining? We need to keep in mind here that if we mixed together samples from two very unequally represented languages, say ninety-nine percent English sentences and one percent sentences in Wolof (a West African language), we would see the usual diminishing returns on the combined data. It’s only when we segregate the Wolof sentences and train on them only after training on the English, that we see evidence of the accelerated acquisition of Wolof.

In the mixed data, the Wolof sentences would comprise unusually important training examples with novel content, but the point is that all datasets—including the sentences purely in English—are mostly repetitive, only occasionally adding new information. Even in a monolingual dataset, words and concepts have a long-tail distribution, just like the distribution of languages themselves.

Long tails like this are a signature of multifractal properties in data: details have details, and those details have their own even more esoteric details. Language, and knowledge in general, is multifractal like that. Math may comprise only one percent of the vast world of things we talk about. Technical discussion among STEM professionals may comprise only one percent of the math talk (the rest being dominated by the arithmetic kids do in class, or basic accounting, or splitting the tab at restaurants). Among those professionals, one percent of the discussion might be about number theory. Within number theory, perhaps one percent of the conversation touches on, say, the Grothendieck–Katz p-curvature conjecture.

Multiplying those four percentages by the eight billion people on Earth gives eighty readers, if my own grade-school math is right, which seems in the right ballpark for this particular community of interest. There’s nothing unique about the Grothendieck–Katz p-curvature conjecture, either; not everyone is cut out for such esoteric math (I’m not), but lots of people nerd out on one thing or another. The most elaborate conspiracy theories of flat earthers, the deep recesses of Pokémon fan fic, and the craftspeople keeping handmade accordion manufacturing alive also represent fine-grained detail in humanity’s Multifractal of Everything.

One could draw a cartoon of pretraining as follows. Suppose that, to come across a novel concept after reading some number of sentences at random, you have to read one percent more. If you’re a model, that means that the first hundred sentences you encounter on your very first training iteration are all likely to contain new stuff. But after reading a couple of hundred sentences, only one in two adds anything novel. After reading a million sentences, you’d likely need to read another ten thousand before coming across something you hadn’t seen before. That’s why learning slows down—not because it becomes less efficient, but because when sampling at random, the likelihood of encountering something genuinely novel in the next piece of data decreases so dramatically as a function of how much you already know.

In-Context Learning

Companies like Microsoft and Google are now pretraining large models on a good chunk of the entire Web; social media are increasingly in the mix too. Some analysts are pointing out that, at this rate, even given the ongoing exponential growth of digital data, we’ll soon run out. 52

Critics have deemed this apparently bottomless demand for human-generated content problematic for conceptual, ethical, and pragmatic reasons:

- Pretraining seems very different from the way humans learn, both emphasizing the inefficiency of today’s approaches to machine learning and adding fuel to arguments that AI models don’t really understand anything, but are just giant memorizers. While I’ve offered a range of evidence that this isn’t so, it’s a constant issue in AI research; it’s as if no AI test can ever be closed-book, because the model has read, compressed, and potentially memorized some approximation of “everything.”

- Concerns have been raised about the legality and ethics of using so many peoples’ content this way. Even when legality isn’t at issue, little of this material was created with the intent for it to become AI fodder. And once a particular piece of media has been used in pretraining, it becomes difficult to determine whether and to what degree it influences the model’s subsequent output. Especially when AI creates intellectual property or in some other way produces economic value, this raises questions about what constitutes “fair use” and when something is unique versus a “derivative work.” 53

- The extreme industrial scale of pretraining, both in terms of data and computing power, limits the creation of the largest “frontier” models to the very small number of companies and governments able to make massive capital investments. 54 On one hand, this may be a blessing (while it lasts), as it makes prevention of the most dangerous uses of advanced AI at least possible; it wouldn’t be if anyone could roll their own. However, the situation raises concerns about monopoly, unfair competition, and AI diversity.

- The most profound theoretical difficulty with the pretraining approach is the way it separates learning from inference—an unwelcome legacy from the early days of cybernetics. This means that the model is, in some sense, frozen in time; when one begins interacting with it, it knows about nothing in the world that happened after the date the pretraining data were scraped. In effect, it has total anterograde amnesia.

None of these issues is quite as straightforward as it appears.

Regarding #1, the unnaturalness of pretraining, I suspected for many years that the backpropagation method universally used to train large models today, but long known not to be biologically plausible (per chapter 7), was at fault. Surely, I thought, our brains implement a brilliant learning algorithm that would greatly improve on backpropagation. Otherwise, how could any of us have grown from helpless newborns into smartypants college students in a mere eighteen years, most of which were spent sleeping, daydreaming, watching inane cartoons, playing 8-bit video games, avoiding our parents, and smoking weed behind the school dumpster? 55

Brains may indeed implement some hyper-efficient neural-learning magic, but it’s increasingly clear that a good deal of the suboptimality in pretraining lies in a foie gras–like approach to training data. We take as much of the Web as we can grab, grind it up into paste, and force it down the neural net’s gullet, in random order, with no regard for curriculum, relevance, redundancy, context, or agency on the part of the model itself. (Apologies if this just put you off dinner.)

Indeed, the contrast between the usual diminishing returns to scale on training data and the accelerated learning we see with continued pretraining on novel data (as with the Wolof example) is telling. It suggests that much of today’s pretraining is redundant. The bigger our models get, the more wasteful the random-sampling approach becomes. In short, the problem may be in the teaching more than in the learning.

Regarding #2, while AI supercharges the “fair use” debate due to its speed and scale, the question of originality has been hotly contested for decades, as it’s not specific to AI; all creative work is necessarily a product of one’s life experience, which includes everything a person has ever seen, heard, touched, smelled, tasted, read … and despite any self-serving story our interpreter may spin, we are often unaware of our influences, or the degree to which we’ve covered our tracks via mutation and recombination, otherwise known as “originality.”

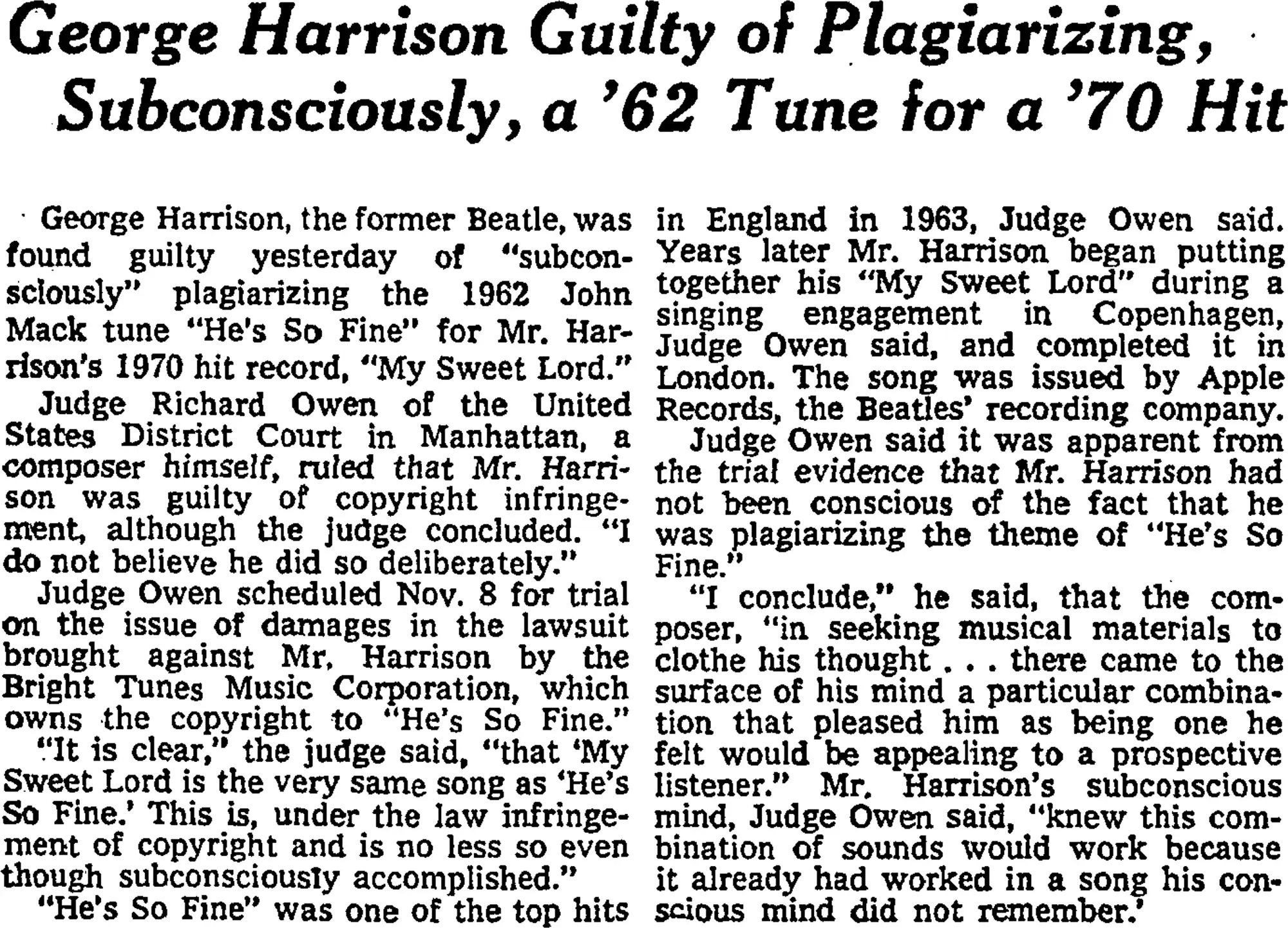

In one famous case, George Harrison, post-Beatles, released his first solo hit in 1970, “My Sweet Lord,” a catchy song calling for an end to religious sectarianism. But, as it turned out, “My Sweet Lord” was extremely reminiscent of Ronnie Mack’s chart-topping 1963 gospel hit “He’s So Fine.” Harrison had of course heard this song, but was unaware that he was copying it, almost note for note. What followed has been characterized as “without question, one of the longest running legal battles ever to be litigated in [the United States].” 56

From the New York Times, 8 September 1976; final resolution of the legal case would only occur in 1998.

If we could figure out how to train models with far less data, more like us, it would go a long way toward addressing issues #1–3. Curating the training data would become more practical, ensuring the answers to test questions aren’t included, avoiding indiscriminate scraping of living artists’ work, and (for better and worse) opening up the ability to create AI models from scratch to a broader public.

The real key, I believe, lies in #4: erasing the distinction between learning and inference. We know this is possible, not only because brains exhibit no such distinction, but because of a series of findings that shed light on fundamental properties of sequence learning and help clarify why Transformers work as well as they do.

In 2020, OpenAI announced their GPT-3 language model, the predecessor to GPT-3.5, which would power ChatGPT. The announcement came in the form of a paper with a curious title: “Language Models Are Few-Shot Learners.” 57 The learning in question was mysterious, and, it seemed at the time, unrelated to learning in the usual sense, involving minimizing error through backpropagation. The authors were pointing out that during inference—that is, normal operation after training—language models still appear to be able to learn, and to be able to do so with extraordinary efficiency, despite no changes to the neural-network parameters. Specifically, they defined “few-shot learning” as giving the model a few examples of a task in the context window and then asking it to do another such task; “one-shot learning” involved only a single example and “zero-shot learning” included no examples, only describing the task to be done.

We’ve already encountered several instances of such situations. Asking a model that wasn’t pretrained or fine-tuned on translation tasks to do translation is, for example, a zero-shot learning task. So is asking for chain-of-thought reasoning. Or, for an example that definitely didn’t come up anywhere in the pretraining, consider the following instance of zero-shot learning:

“‘Equiantonyms’ are pairs of words that are opposite of each other and have the same number of letters. What are some ‘equiantonyms’?”

To be clear, equiantonyms aren’t a thing, or at least, they weren’t until my co-author Peter Norvig and I concocted this query in 2023 to illustrate zero-shot learning. 58 This isn’t a particularly easy task; as of 2024, none of the mainstream chatbots reliably succeed, though with some prodding, Gemini Advanced manages to come up with “give/take,” adding cheerily that it is “determined to find more.”

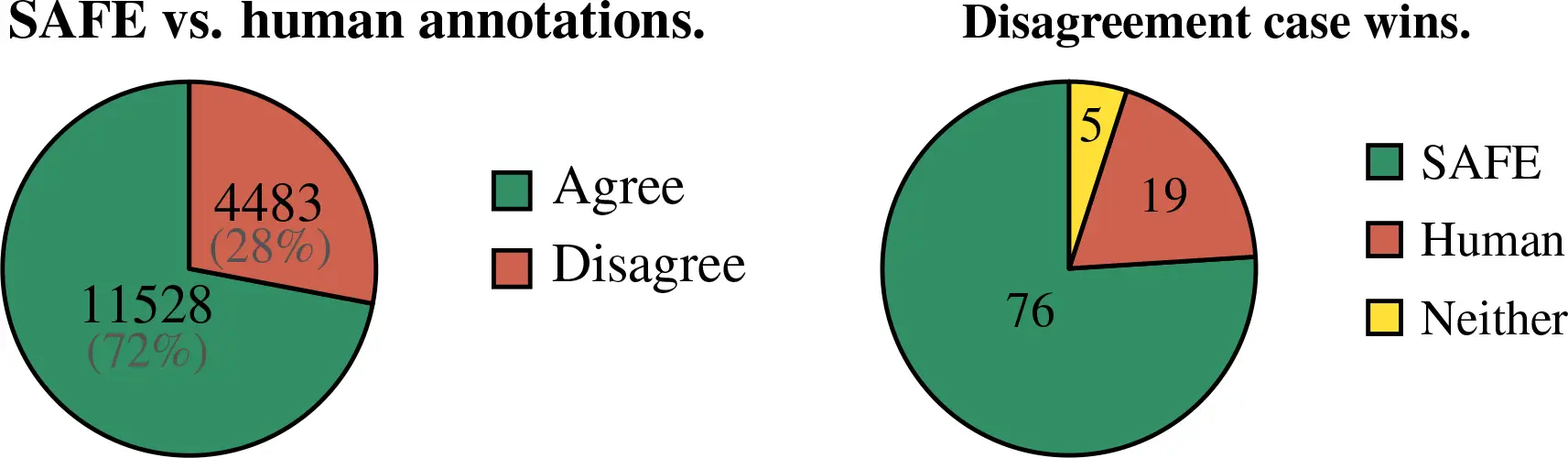

Can we really call this learning if the model parameters remain unchanged? It’s straightforward to perform learning by ongoing unsupervised or supervised backpropagation (i.e., fine-tuning) to cause a baseline model to improve at known tasks like translation, or to perform novel tasks like coming up with equiantonyms. We could then compare baseline model performance with the performance of these refined models. Performance has to be measured by prompting, that is, by asking “What are some ‘equiantonyms’?” with no preamble. Presumably, the baseline would already be OK at translation, though ongoing training would improve it; unless the model makes a very lucky guess as to the meaning of equiantonym, its baseline performance at that novel task would be zero, though, with training, it will improve. Similarly, we could draw a comparison between the baseline with no preamble and the baseline with zero-, one-, or few-shot prompts. All of these interventions result in improvements over the baseline. So, despite their fixed parameters, the prompted models seem like they are learning!

The GPT-3 authors pointed out that this ability to learn on the fly from the prompt itself—“in-context learning”—is, like math, reasoning, or any other model capability, a skill that improves with scale; bigger models are better at it. A 2023 paper from researchers on my own team finally began to clarify how it works. 59 They showed that a simplified Transformer with a single attention layer could, given a toy problem and a specially configured array of parameters, perform the mathematical equivalent of a single backpropagation step on the contents of the context window. In other words, in this somewhat contrived setting, the model is able to respond to its prompt as if it had learned from that prompt before predicting the next token. Adding a second attention layer makes it possible for the model to effectively take two backpropagation steps, a third layer allows a third step, and so on.

If this result had only held given hand-specified parameters, it would have been no more than a curiosity; indeed, it had recently been discovered that a Transformer is Turing complete, so it could, in theory, perform any computation on its context window, given the right parameters. 60 However, as it turns out, ordinary pretraining results in precisely the same in-context learning behavior as in the hand-specified case. Pretrained transformers, in other words, really do learn to learn.

As of 2024, learning in-context isn’t yet fully solved, because although Transformers do it automatically, they don’t remember anything they’ve learned once the “training” material scrolls out of the context window. The missing machinery may involve something like a hippocampus, and perhaps a sleep cycle for consolidating knowledge and memories.

Regardless, in-context learning is important, both theoretically and practically. Working through its mechanics demystifies some of the Transformer’s more surprising capabilities. It reveals a unity between learning and prediction that makes sense, when considered carefully. After all, prediction always involves modeling a changing environment (unless you’re in an unchanging Dark Room); learning is nothing more than prediction over long timescales. Over short timescales, and especially when what is learned is rapidly forgotten, we often call it “adaptation.” 61

An important, related theoretical point concerns the difference between cause and correlation. One of the criticisms often leveled against machine learning is that, since it usually involves passive learning (as with pretraining), it can only learn correlations, not causes. 62 According to this critique, it’s not possible for a passively trained AI model to know that X causes Y, but only that X and Y are correlated in the training data. Living things like us, on the other hand, can easily learn causation by doing experiments. Perhaps when your cat, as an active learner, uses her paw to blithely push a vase off a high shelf, she’s only experimenting to see if, indeed, pushing it that way will cause it to fall and shatter.

It’s true that when experimentation is possible, it offers a powerful way to test causation. However, the presumption that causality (technically, “entailment”) can’t be inferred from passive observation, and in particular by pretrained language models, has been proven wrong. 63 It’s not necessarily easy, nor is it always possible, but it can be done. Indeed, there’s no shortage of researchers who study systems that they can’t causally experiment on—astronomers and macroeconomists, for example. In other cases experimentation is ethically prohibited, as in some areas of social science and medicine. These researchers must rely on “natural experiments,” that is, on observations that strongly imply causal relationships. Such observations can never entirely prove causation, but, then again, neither can an experiment. (Perhaps the cat was just adding another trial, to lower the uncertainty in her causal model. Yep—this vase shattered when it fell, too. Right. Again.)

Historically, the claim that machine learning only learns correlations, not causes, gained currency during the CNN era, in the 2010s. Since most CNNs did not operate over temporal sequences, but merely classified isolated stimuli, it was hard to see how they could learn anything other than correlations among those stimuli. Nvidia’s self-driving car prototype DAVE-2, for instance, 64 learned through supervision to associate being left of the centerline of the lane with a “steer right” output, and being right of the centerline with “steer left,” but it would be a stretch to claim that the model understood that those steering actions would subsequently cause those centerlines to be closer to the middle. They could just as well have done the opposite, or nothing. Indeed, DAVE-2 had no internal representation of “subsequently.” If you shuffled all of the frames in a driving video, its per-frame outputs would remain the same, and, indeed, during training the frames are shuffled randomly.

Learning to predict changes everything, though. Specifically, an autoregressive sequence model trained on the same task would learn the effect on subsequent frames of steering left or right, which implies that it would learn, at least within the limits of its umwelt, what steering does. It would be able to use that understanding to follow through with a steering correction even if the forward-facing camera were briefly obscured. It would even be able to simulate counterfactuals—how the view would change if it were to steer left versus right. Ordinary, passive pretraining, moreover, would suffice to learn these causal relationships. There’s nothing magical about learning causality; it simply requires modeling time sequentially.

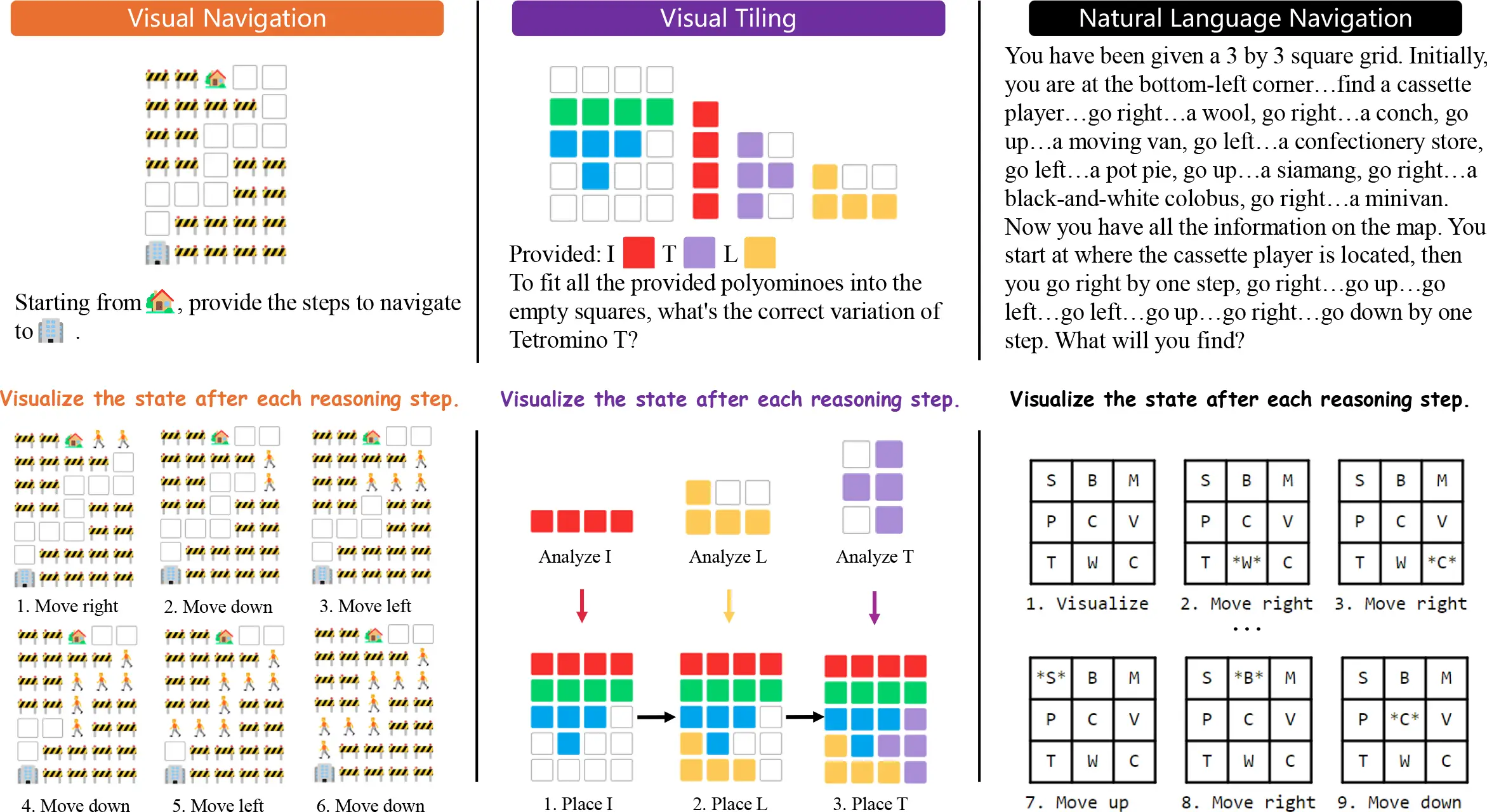

But let’s return to the four problems described earlier, and how in-context learning can help overcome them. If Transformers learn how to learn, they could teach themselves, or each other, just as we do. They could ask for or look up information, or, in some circumstances, even perform experiments to learn. 65 This kind of active learning, integrated into agential behavior, would be vastly more efficient than the passive random sampling used in today’s pretraining. Learning could be curricular, beginning with children’s books—which, as shown by TinyStories, 66 needn’t require massive amounts of material. Then, having learned to learn basic human concepts and language, an AI could progress to the Young Adult shelf, and on from there. Just as we do.

Each learning-capable AI agent could specialize by learning whatever fields are most useful in its particular context, doing so in an individual, experiential way. If a given agent is interacting with the eighty nerdiest number theorists on the planet, its learning will eventually be focused on a very specific corner of the Multifractal of Everything—a corner that would take gargantuan amounts of computing power to resolve adequately with random sampling. As a bonus, we’d have a true diversity of agents interacting with us socially, rather than the monolithic, generic, and non-specialized corporate models representing the state of the art in 2024.

The burning question is: would those individuated models be like people? And what, if anything, would it be like to be one of them?

Mary’s Room

In 1982, Australian philosopher and self-declared “qualia freak” Frank Jackson posed a famous thought experiment, the “Knowledge Argument,” now more commonly known as “Mary’s Room.” 67 It went like this:

Mary is a brilliant scientist who is, for whatever reason, forced to investigate the world from a black-and-white room via a black-and-white television monitor. She specializes in the neurophysiology of vision and acquires […] all the physical information there is to obtain about what goes on when we see ripe tomatoes, or the sky, and use terms like red, blue, and so on. She discovers […] just which wave-length combinations from the sky stimulate the retina, and exactly how this produces via the central nervous system the contraction of the vocal chords and expulsion of air from the lungs that results in the uttering of the sentence “The sky is blue.” […] What will happen when Mary is released from her black-and-white room or is given a color television monitor? Will she learn anything or not? It seems just obvious that she will learn something about the world and our visual experience of it. But then it is inescapable that her previous knowledge was incomplete. But she had all the physical information. Ergo there is more to have than that, and Physicalism is false.

Today, of course, language models are Mary, so the Knowledge Argument has been getting a fresh airing.

As powerful as Jackson’s fable sounds, it is, like so many philosophical arguments, rooted in storytelling and folk intuition. The “ergo” ties a bow around a logical syllogism, but none of that syllogism’s predicates are unambiguously true or false, as they would have to be in a mathematical proof … and we’re in territory where our folk intuitions can lead us astray. 68 So, let’s update those intuitions by bringing to bear what we now know about perception and experience, which is a good deal more than anyone knew in 1982.

As of this writing, nobody has yet, to my knowledge, hooked up an artificial nose or taste buds to a language model, though I’m sure it will happen soon enough. Being able to physically smell isn’t essential for a model to be able to “get” smell, though. Remember, when COVID causes you to temporarily lose your sense of smell, or you just have a stuffed-up nose, you don’t suddenly become a person for whom the smell of bananas ceases to exist. You are still a smelling being; smells are still a part of your umwelt, just as vision is still part of your umwelt when your eyes happen to be closed.

This is because, fundamentally, smell, and all other modalities, are experienced mentally. They are models. You have a sense of smell because regions of your brain have learned how to model smell; your nose merely prompts characteristic neural activity patterns in those regions. The same regions will also activate, albeit perhaps to a lesser degree, when you imagine a smell. Similarly, your eyes are not your sense of vision; rather, they merely provide error-correction signals to keep your visual cortex’s “controlled hallucination” reasonably well aligned with the world out there.

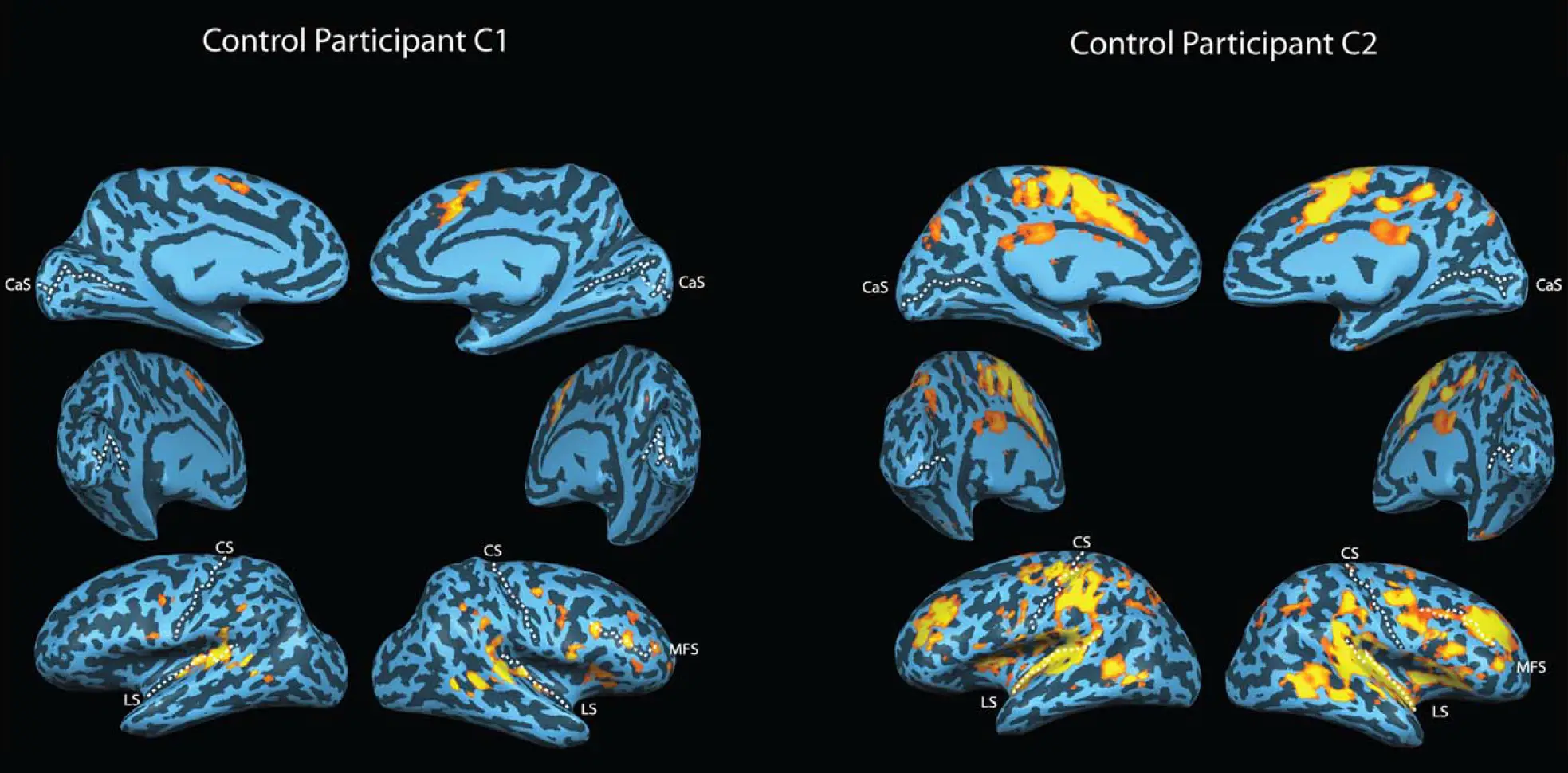

There’s ample evidence that perception and imagination share a common neural basis. Damage to one hemisphere’s visual cortex, for example, doesn’t just prevent you from being able to see things in the opposite visual hemifield, but even from knowing that the opposite hemifield exists, or being able to imagine what might be in it. 69

Damage to the eyes, paradoxically, can have exactly the opposite effect. In 1760, Swiss naturalist Charles Bonnet described the complex visual hallucinations experienced by his grandfather, who suffered from severe cataracts. The older Bonnet began to see nonexistent horses, people, carriages, buildings, tapestries, and other shapes; Charles, too, had weak vision, and as it progressively worsened he began to experience similar hallucinations. 70 These symptoms, now often called Charles Bonnet Syndrome, are common in people going blind.

Even without organic damage, anyone in total darkness for an extended period can experience similar hallucinations, a phenomenon known as “prisoner’s cinema.” This is exactly what one would expect to happen when the visual cortex’s hallucinations remain active but float free of their moorings, unconstrained by error-correction signals from the eyes.

Memory uses the same neural machinery as perception and imagination. Just as the sight of a banana, or the smell of its distinctive ester in your nose, will trigger the controlled hallucination “banana” in your brain, the word banana, or the memory of eating one, can do the same, albeit (unless you’re Marcel Proust) less intensely. Any of these banana-related activity patterns may also be tagged with something like a positional encoding, as described in chapter 8, to let you know that this banana experience isn’t happening here and now.

Tellingly, a damaged or missing hippocampus, as in Henry Molaison’s case, will not only impair the formation of new memories, but will also impair the ability to imagine new experiences. 71 This is consistent with the speculation that imagining a future experience requires pairing known concept embeddings with new positional encodings, perhaps generated in the hippocampus, to represent a future or counterfactual time or place.

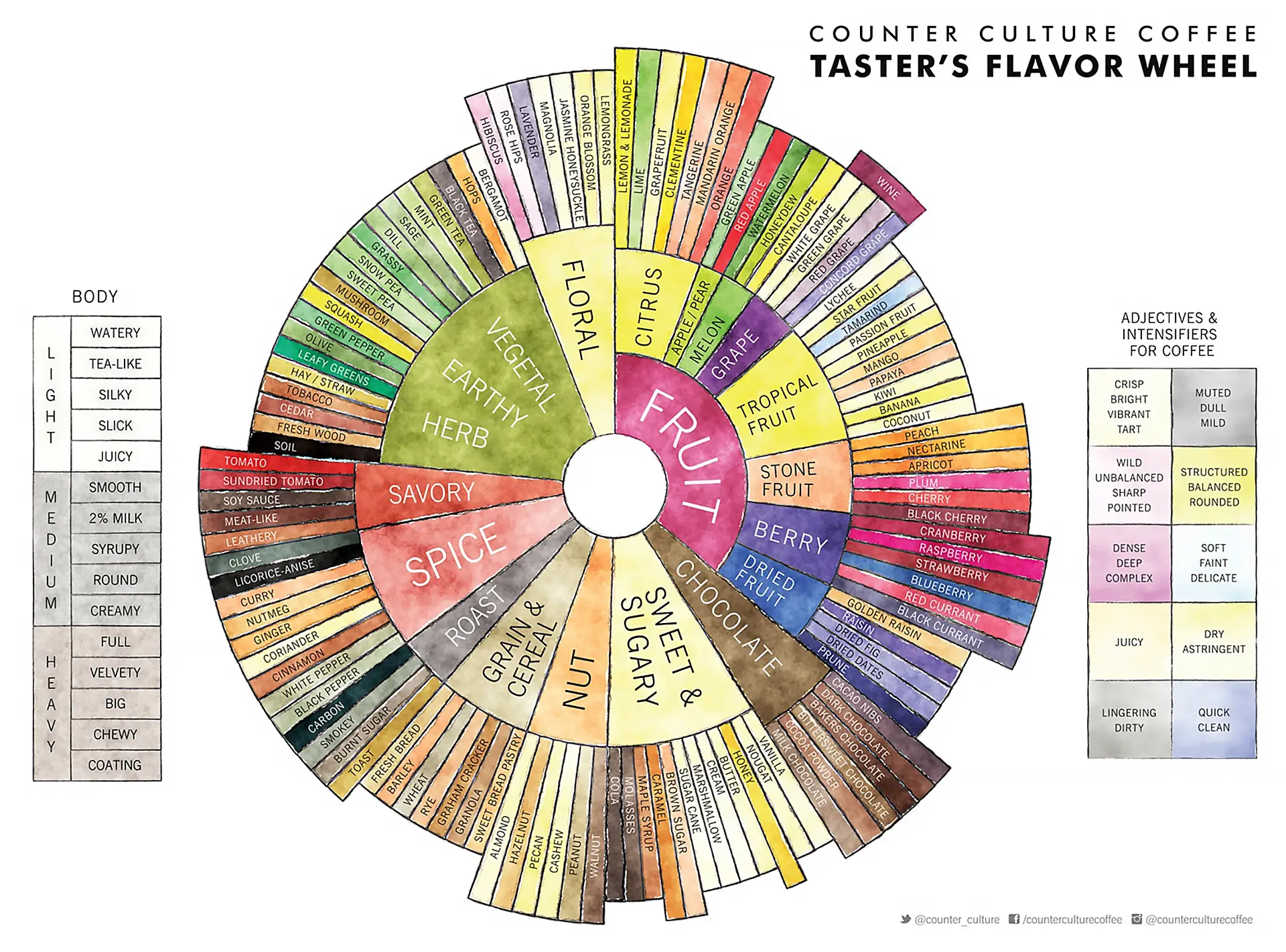

In light of the preceding, the question of whether a language model has perceptual “qualia” seems to have little to do with sense organs, and much to do with the model itself. So many food, wine, and coffee nerds have written in exhaustive (and exhausting) detail about their olfactory experiences that the relevant perceptual map is already latent in large language models, as the “six modalities” paper shows. In effect, large language models do have noses: ours. Those models just happen to be hooked up to noses via textual token embeddings rather than neural pathways.

The culturally-informed encoding of one specific region of the human sensory umwelt into language, a.k.a. some of the things coffee nerds say about coffee.

However, we also have to acknowledge that “qualia” questions cannot be answered objectively. We have to form a model of the model to decide whether it “gets” smell, or color, or anything else. So, we once again have a relational or Turing Test sort of question, with no perspective-independent “view from nowhere.”

AI and cognitive-science researchers struggled over this issue in a debate about whether a Transformer could effectively build a world model of Othello, a simple Go-like board game played on an 8×8 board. 72 In 2022, a group of researchers pretrained a small-ish Transformer using transcripts of valid Othello games. Sure enough, the model learned how to play valid moves, in effect “autocompleting” games. 73

However, the question the researchers were trying to answer wasn’t “can the model play,” but rather, “has the model learned an internal representation of the board?” It can easily be argued that without such a representation, it would be hard to know which moves are valid, but the goal was to address critics who claimed that Transformers work by rote memorization rather than by actually modeling the world, and the world of Othello—consisting of nothing but the state of an 8×8 board—seemed simple and objective enough to put the question to rest.

But how can we tell whether such a world model exists, somewhere among the zillions of neural activations in the Transformer? Ironically, that’s a job that only machine learning can solve. So, the researchers needed to build a second model, which they called a “probe,” to learn how to map the Transformer’s neural activity to an 8×8 pixel image of the board. When their probe was too simple—just linear decoding—it didn’t perform very well; but when it was made a bit more sophisticated with the addition of an extra layer, it did work. The trouble is that, if the probe is trained to map neural activations (which include information about the entire game) to the correct board state, then the researchers could effectively have been using supervised learning to train the probe to learn a world model! And so the debate has gone round and round. 74

It takes a model to know a model. Similarly, when brain regions are connected to each other, they are each acting as a “probe” of the others, although no region is connected to anything like the perspective-independent ground truth of an 8×8 board.

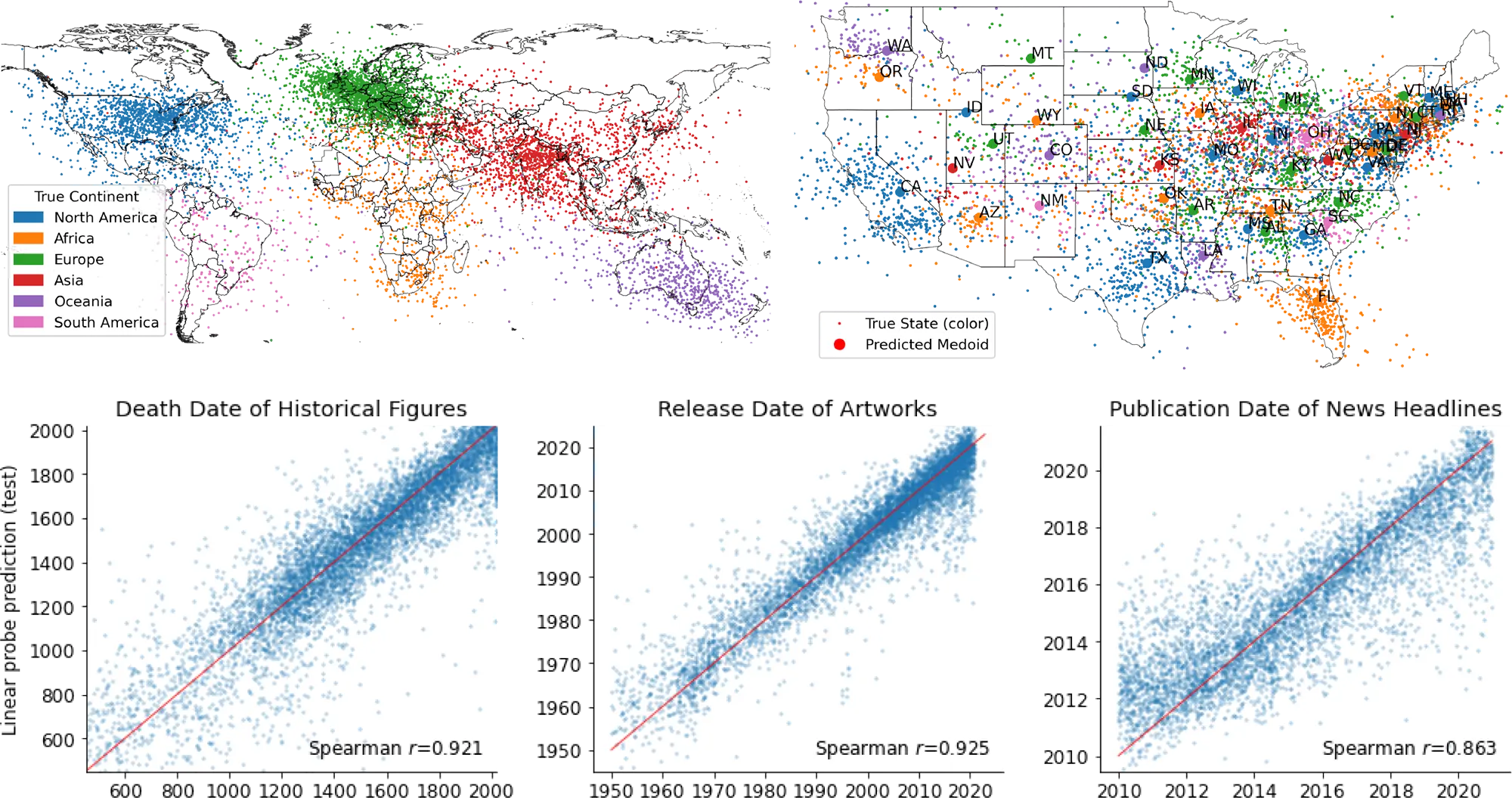

Each dot is the response of an optimal linear probe to the layer 50 activations the Llama-2-70b LLM upon processing the last token of a place name (top) or event (bottom). This demonstrates that the model has learned continuous internal representations of space (here, position on the world map) and time (here, year); Gurnee and Tegmark 2023.