Periodization

The usual narrative about AI envisions it in comparison to a human, dividing its history into three eras: a long “before” period of Artificial Narrow Intelligence (ANI), when it’s inferior to humans; the (short-lived) achievement of Artificial General Intelligence (AGI), when it matches human capability; and a mysterious “after” period of Artificial Superintelligence (ASI), in which it exceeds human capability. The arrival of ASI has sometimes been held to herald the “Singularity,” a threshold into an unknowable and unimaginable future. 1 As of 2024, virtually all pundits agree that AGI has not yet been achieved, but many believe that, given the pace of improvement, it could happen Real Soon Now, and will be immediately followed by ASI and whatever lies beyond. 2

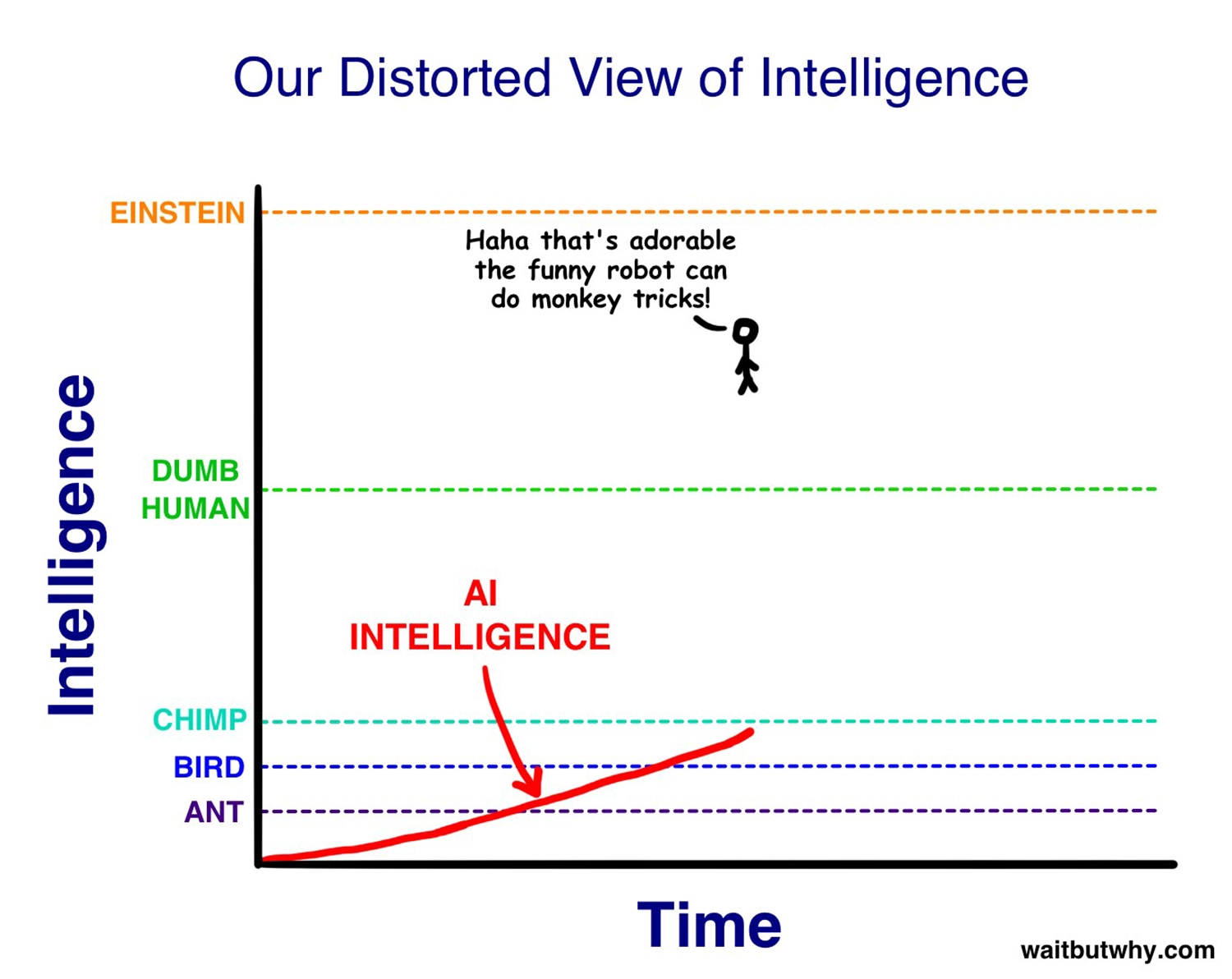

Author and blogger Tim Urban’s perspective on the before, during, and after of Artificial Superintelligence; Urban 2015.

Author and blogger Tim Urban’s perspective on the before, during, and after of Artificial Superintelligence; Urban 2015.

I’ve suggested or implied many problems with this narrative. For one, it’s difficult (for me, at least) to see how today’s AI is not already “general.” The “narrow” versus “general” distinction was invented when we began to apply the term “AI” to task-specific models, such as those for handwriting recognition or chess playing. This specificity made such models obviously “narrow”; to a layperson, calling such systems “artificially intelligent” made little sense, as they didn’t represent anything one would normally associate with that term.

Hence “AGI” was coined to describe what had previously simply been called AI; it covered everything from Star Trek’s Data to its ship computer (which was like a disembodied person), and from Douglas Adams’s Marvin the Paranoid Android to his smart elevators of the future: “Not unnaturally, many elevators imbued with intelligence […] became terribly frustrated with the mindless business of going up and down, up and down, experimented briefly with the notion of going sideways […] and finally took to squatting in basements sulking. An impoverished hitchhiker visiting any planets in the Sirius star system these days can pick up easy money working as a counselor for neurotic elevators.” 3

While this scenario remains as silly today as it was in 1980, it could now literally be the outcome of a two-day hackathon involving a jailbroken large language model, a Raspberry Pi, and an elevator. 4 (Yes, we live in an era when the jokes literally write themselves.)

If the difference between narrow and general AI is … well, generality, then we achieved this the moment we began pretraining unsupervised large language models and using them interactively. 5 We then noticed that they could perform arbitrary tasks—or, per Patricia Churchland’s 2016 critique of narrow AI, 6 non-tasks, like just shooting the breeze.

In-context learning marks an especially important emergent capability in such models, because it implies that the set of tasks they can perform is not finite, comprising only whatever was observed during pretraining, but effectively infinite: a language model can do anything that can be described. Performance could be weaker than human, of roughly human level, or of greater than human level. State-of-the-art AI performance climbs every month, putting more tasks into the third category. Such steady increases in performance will likely continue to follow an exponential trajectory for some time, much as traditional computing performance did during the Moore’s Law decades.

The decisive moment for traditional computing came when special-purpose computers gave way to general-purpose ones, starting with the ENIAC in 1945. Everything afterward was an exponential climb in performance, not a discrete transition. In the same vein, AGI has already undergone its “ENIAC moment” with the transition from narrow learning of predefined tasks to general AI, which is based on unsupervised sequence prediction and capable of in-context learning. It seems reasonable to call this a real step change. What follows has been, and will continue to be, dramatic, even exponential, but the changes are of degree, not of kind.

There’s something arbitrary, bordering on absurd, about pundits arguing over the precise timing of when this exponential climb really “counts” or “will count” as AGI … not to mention the way many commentators have been quietly scurrying to move the goalposts. On what principled basis could we defend one or another threshold on this vertiginously steep yet continuous landscape? And who cares, anyway?

Technical findings in the 2020s have produced a surprising insight, though: calling narrow, task-specific (but not feature-engineered) ML systems “AI” may have been better justified than we imagined. Whether neural nets are trained using supervised learning to perform single tasks, such as image classification or text translation, or are trained to be general-purpose predictors using unsupervised learning, they seem to end up learning the same universal representation. 7

So, if you trained a massively overpowered sequence model to do handwriting recognition—and you trained it on enough handwritten treatises—it seems that you really could have a philosophical (handwritten) conversation with it afterward. Or, a very powerful neural image compressor would necessarily learn how to read and autocomplete printed text, to do a better job of compressing pictures of newspapers and the like; it would effectively contain a large language model. So too would a smart elevator equipped with a microphone and a speaker, trained only on the narrow task of getting you to the right floor. Weirdly, just as the general prediction task contains all narrow tasks, each narrow task contains the general task!

An exact date for the transition to AGI is thus even more difficult to fix, but a principled case could be made for sometime in the 1940s, with the implementation of the first cybernetic models that learned to predict sequences—even though in the beginning they could do very little, beyond the wobbly antics of Palomilla following a flashlight down an MIT hallway.

What can we say, then, about the big history of AI? It is possible to periodize the history of intelligence—that is, of life—on Earth, and AI is part of that story. However, to place it into a meaningful context, we have to zoom way out.

Transitions

The emergence of AI marks what theoretical biologists John Maynard Smith and Eörs Szathmáry have termed a “major evolutionary transition” or MET—a term we first encountered in chapter 1. 8 Smith and Szathmáry describe three characteristic features of METs:

- Smaller entities that were formerly capable of independent replication come together to form larger entities that can only replicate as a whole.

- There is a division of labor among the smaller entities, increasing the efficiency of the larger whole through specialization.

- New forms of information storage and transmission arise to support the larger whole, giving rise to new forms of evolutionary selection.

The symbiogenetic transitions in bff exhibit these same three features!

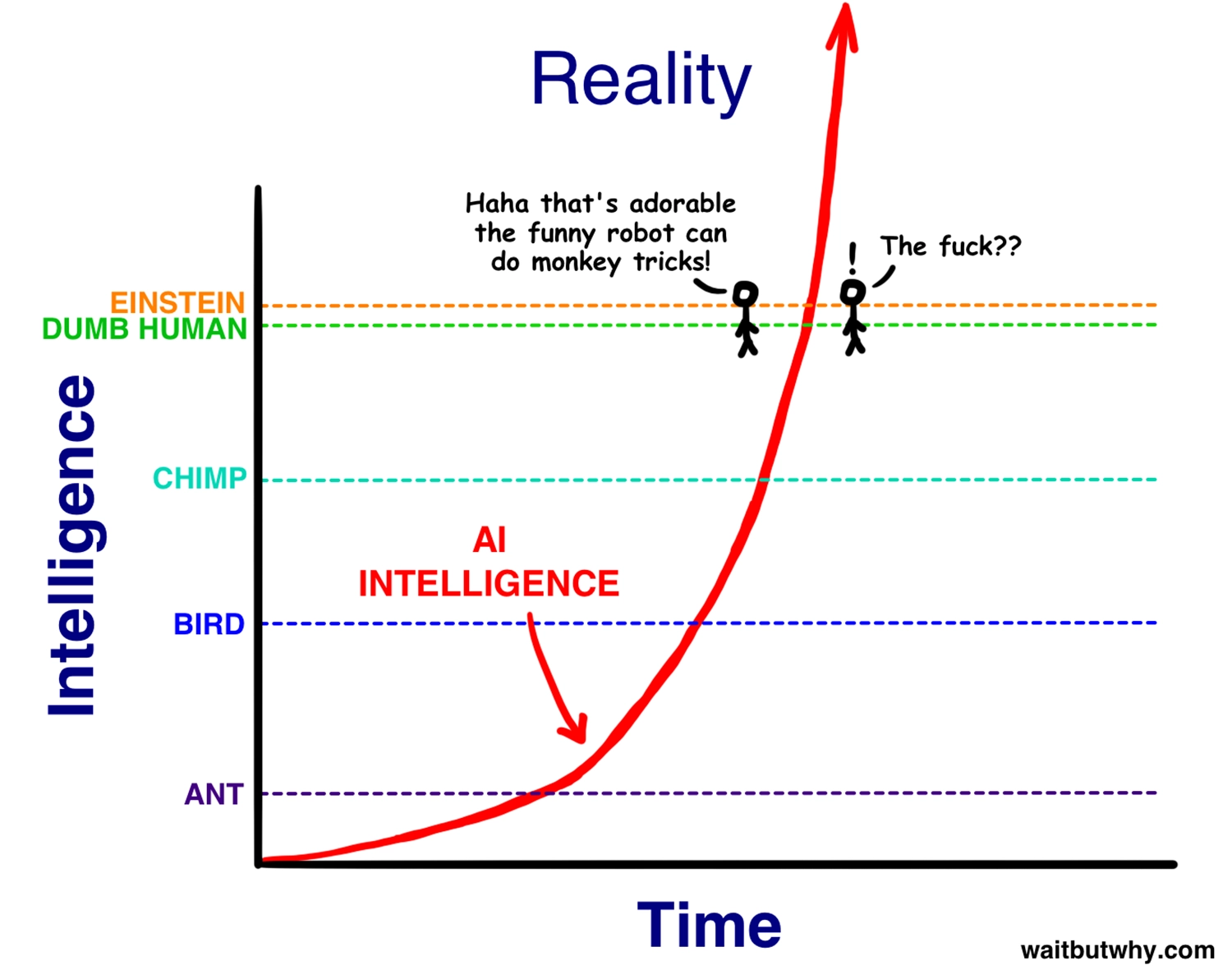

Every Major Evolutionary Transition is accompanied by new information carriers; Gillings et al. 2016.

Soviet-American cyberneticist Valentin Turchin theorized a concept very similar to the MET, the “metasystem transition,” decades earlier, in the 1970s. Turchin described metasystem transitions in terms much like those I use in this book, emphasizing the increasing power of symbiotic aggregates to carry out better predictive modeling, thus gaining a survival advantage. 9 As usual, the cybernetics crowd seems to have been well ahead of the curve.

In their 1995 review article in Nature, Smith and Szathmáry posit eight major evolutionary transitions:

- Replicating molecules to populations of molecules in compartments

- Unlinked replicators to chromosomes

- RNA as gene and enzyme to DNA and protein (genetic code)

- Prokaryotes to eukaryotes

- Asexual clones to sexual populations

- Protists to animals, plants, and fungi (cell differentiation)

- Solitary individuals to colonies (non-reproductive castes)

- Primate societies to human societies (language)

This list isn’t unreasonable, though the first three are necessarily speculative, since they attempt to break abiogenesis down into distinct major transitions we can only guess at. The other five are on firmer ground, as both pre- and post-transition entities are still around: eukaryotes didn’t replace bacteria, sexual reproduction didn’t replace asexual reproduction, social insects didn’t replace solitary ones, etc.

Szathmáry and others have since proposed a few changes (such as adding the endosymbiosis of plastids, leading to plant life), but the larger point is that the list of major transitions is short, and each item on it represents a momentous new symbiosis with planetary-scale consequences. Any meaningful periodization of life and intelligence on Earth must focus on big transitions like these.

That the transitions appear to be happening at increasing frequency is not just an artifact of the haziness of the distant past, but of their inherent learning dynamics, as Turchin described. Increasingly powerful predictive models are, as we have seen, also increasingly capable learners. Furthermore, in-context learning shows us how all predictive learning also involves learning to learn. So, as models become better learners, they will more readily be able to “go meta,” giving rise to an MET and producing an even more capable learner. This is why cultural evolution is so much faster than genetic evolution.

Max Bennett argues that “the singularity already happened” 10 when cultural accumulation, powered by language and later by writing, began to rapidly ratchet human technology upward over the past several thousand years. This is a defensible position, and doesn’t map well to the last MET on Smith and Szathmáry’s list, since humans have existed (and have been using language) for far longer than a few thousand years. Hence Bennett’s “cultural singularity” doesn’t distinguish humans from nonhuman primates, but, rather, is associated with urbanization and its attendant division of labor. Therefore, this recent transition is neither an immediate consequence of language nor an inherent property of humanity per se, but a distinctly modern and collective phenomenon. It is posthuman in the literal sense that it postdates our emergence as a species.

The Pirahã, for instance, who still maintain their traditional lifeways in the Amazon, are just as human as any New Yorker, but possess a degree of self-sufficiency radically unlike New Yorkers. They can “walk into the jungle naked, with no tools or weapons, and walk out three days later with baskets of fruit, nuts, and small game.” 11 According to Daniel Everett,

The Pirahãs have an undercurrent of Darwinism running through their parenting philosophy. This style of parenting has the result of producing very tough and resilient adults who do not believe that anyone owes them anything. Citizens of the Pirahã nation know that each day’s survival depends on their individual skills and hardiness. When a Pirahã woman gives birth, she may lie down in the shade near her field or wherever she happens to be and go into labor, very often by herself.

Everett recounts the wrenching story of a woman who struggled to give birth on the beach of the Maici river, within earshot of others, but found that her baby wouldn’t come. It was in the breech position. Despite her screams over the course of an entire day, nobody came; the Pirahã went so far as to actively prevent their Western guest from rushing to help. The woman’s screams grew gradually fainter, and in the night, both mother and baby eventually died, unassisted.

In this and other similar stories, the picture that emerges is not of a cruel or unfeeling people—in one more lighthearted episode, the Pirahã express horrified disbelief at Everett for spanking his unruly preteen—but of a society that is at once intensely communitarian and individualistic. They readily share resources, but there is no social hierarchy and little specialization. Everyone is highly competent at doing everything necessary to survive, starting from a very young age. The corollary, though, is that everyone is expected to be able to make do for themselves.

The Pirahã are, of course, a particular people with their own ways and customs, not a universal stand-in for pre-agrarian humanity. However, the traits I’m emphasizing here—tightly knit egalitarian communities whose individuals are broadly competent at survival—are frequently recurring themes in accounts of modern hunter-gatherers. It seems a safe bet that this was the norm for humanity throughout the majority of our long prehistory.

We’re justified in describing as METs the transition from traditional to agrarian, then to urban lifeways. During the agrarian revolution, a new network of intensely interdependent relationships arose between humans, animals, and plants; then, with urbanization, machines entered the mix and human labor diversified much further.

New York (and the modern, globalized socio-technical world in general) is a self-perpetuating system whose individuals are no longer competent in the ways the Pirahã are. Urban people have become, on one hand, hyper-specialized, and, on the other, de-skilled to the point where they can’t survive on their own, any more than one of the cells in your body could survive on its own. It’s not just language, but written texts, schools and guilds, banking, complex systems of governance, supply-chain management, and many other information-storage and transmission mechanisms that add the evolvable “DNA” needed to organize and sustain urban societies.

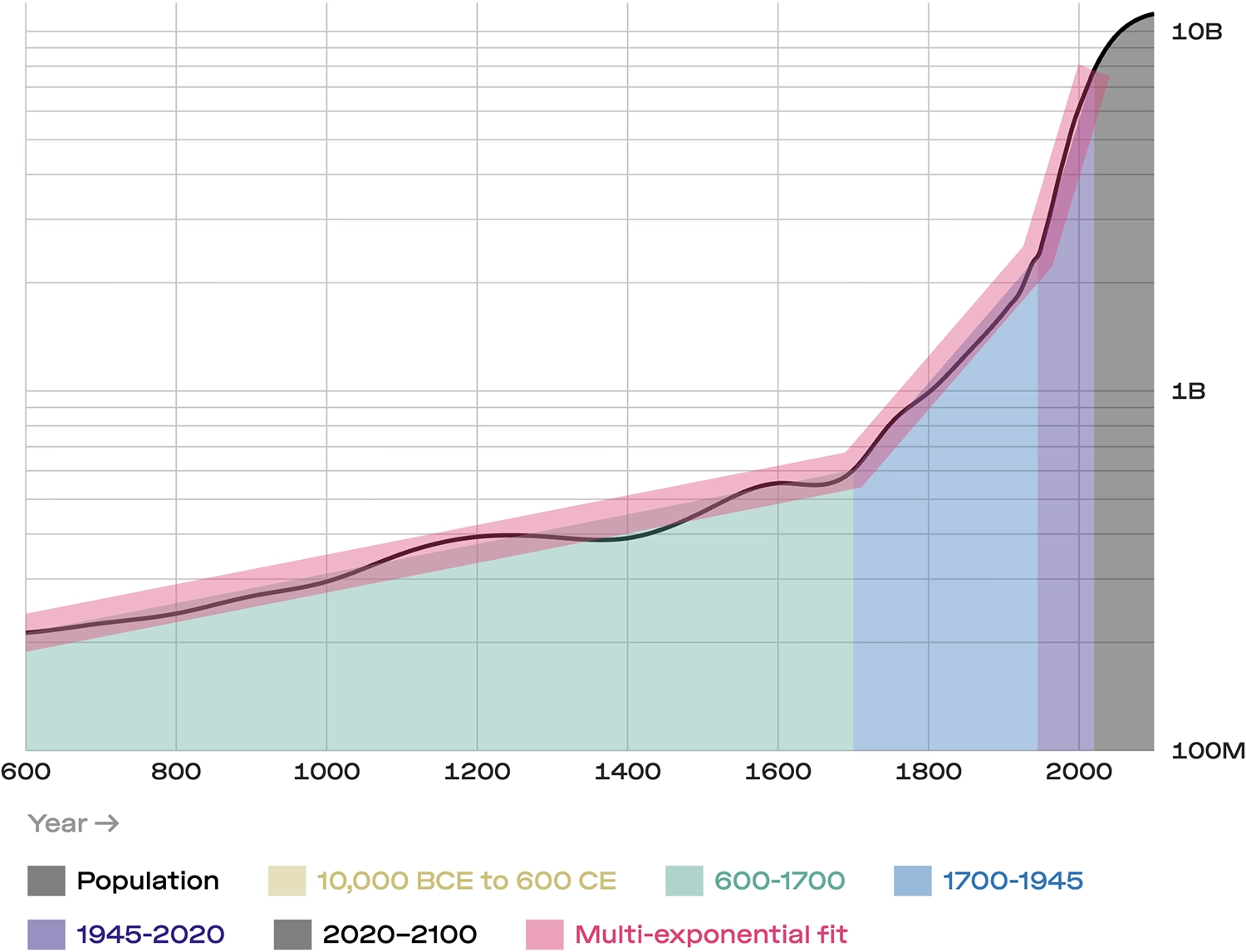

It seems to me, though, that this MET is still not the last on the list. By 1700, significant human populations had urbanization, division of labor, and rapid cultural evolution. Then came the first Industrial Revolution, as introduced in chapter 1: a symbiosis between humans and heat engines, resulting in a hydrocarbon metabolism that unleashed unprecedented amounts of free energy, much like the endosymbiosis of mitochondria. This allowed human and livestock populations to explode, enabled a first wave of large-scale urbanization, and drove unprecedented technological innovation. As Karl Marx and Friedrich Engels noted in 1848,

The bourgeoisie, during its rule of scarce one hundred years, has created more massive and more colossal productive forces than have all preceding generations together. Subjection of Nature’s forces to man, machinery, application of chemistry to industry and agriculture, steam-navigation, railways, electric telegraphs, clearing of whole continents for cultivation, canalization of rivers, whole populations conjured out of the ground—what earlier century had even a presentiment that such productive forces slumbered in the lap of social labor? 12

Vulnerability

Humans had been working hard, and working together, for thousands of years. It was not “social labor,” but coal that had lain slumbering under the ground. Mining was hard work, but the coal itself did an increasing proportion of that work. 13 And over time, the coal produced ever more workers.

From a 1933 educational film about the power of fossil fuel

The conjuring of enormous new populations out of the ground—quite literally, flesh out of fossil fuel—manifested as a population explosion that had become obvious by 1800. This prompted Thomas Malthus and his Chinese contemporary, Hong Liangji, to worry for the first time about global overpopulation. 14

It also created an unprecedented symbiotic interdependency between biology and machinery. Romanticism, the idealization of rural living, and the utopian communities of the nineteenth century can all be understood as a backlash against that growing dependency, an assertion that we could live the good life without advanced technology and urbanization. But at scale, we couldn’t.

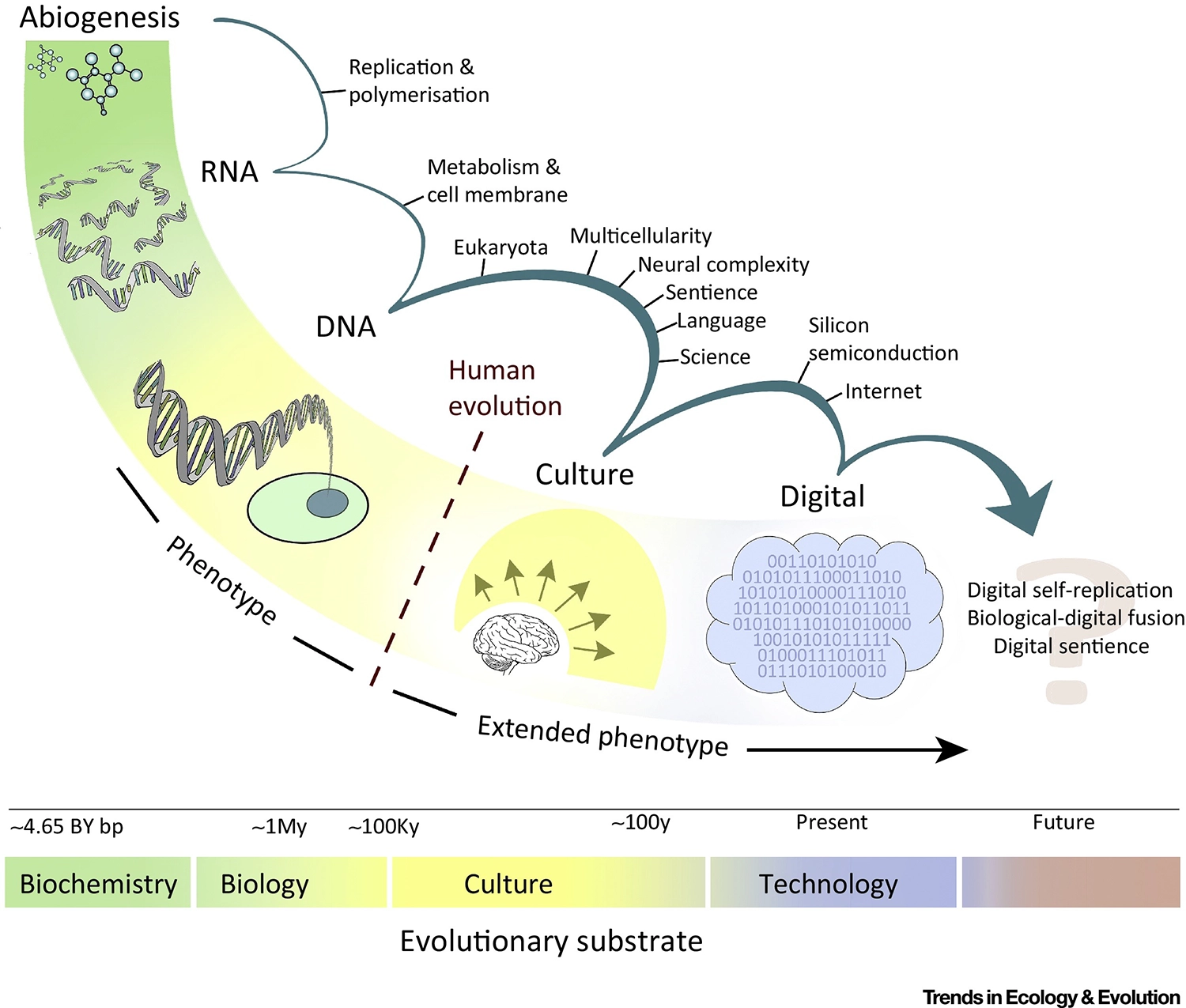

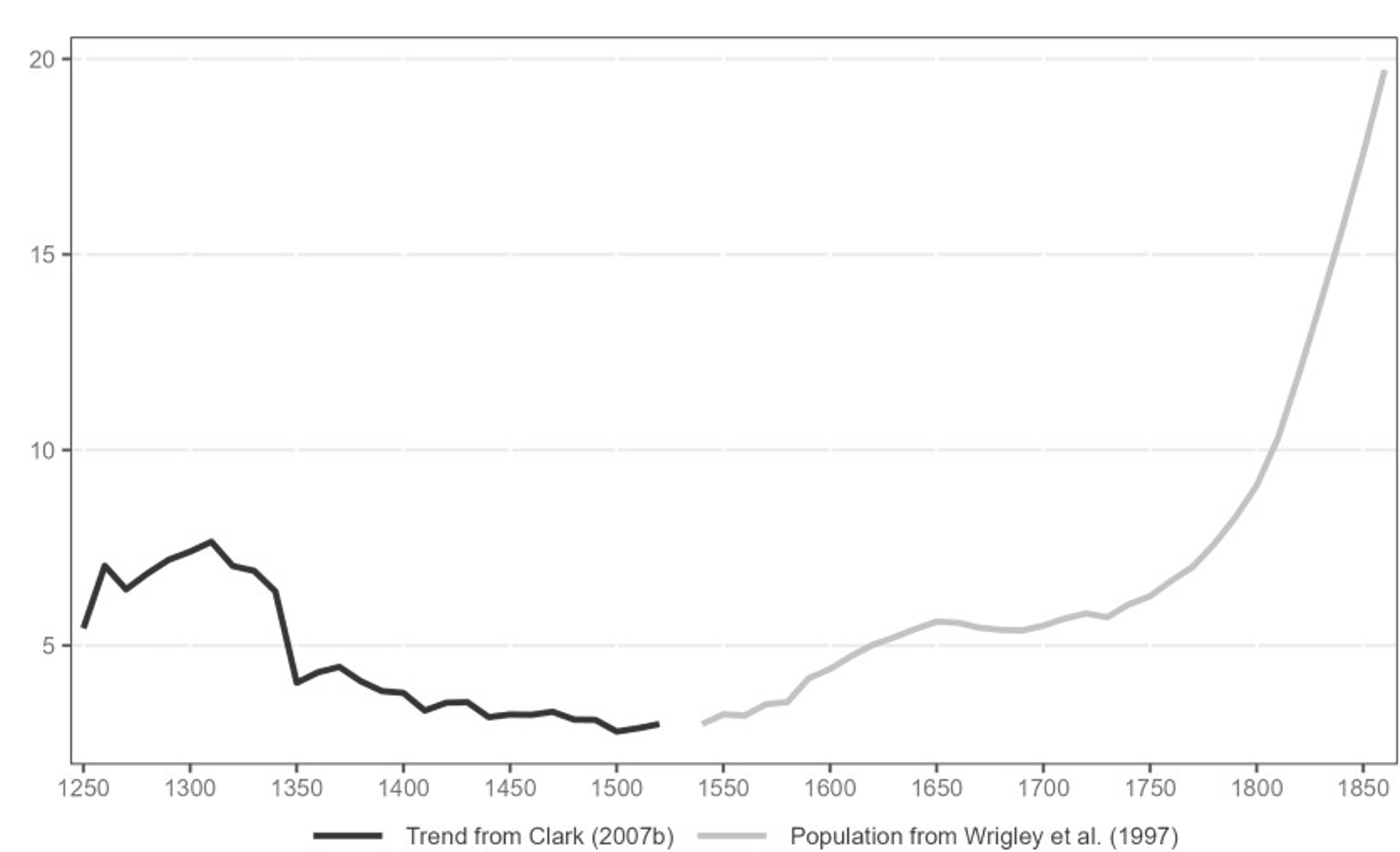

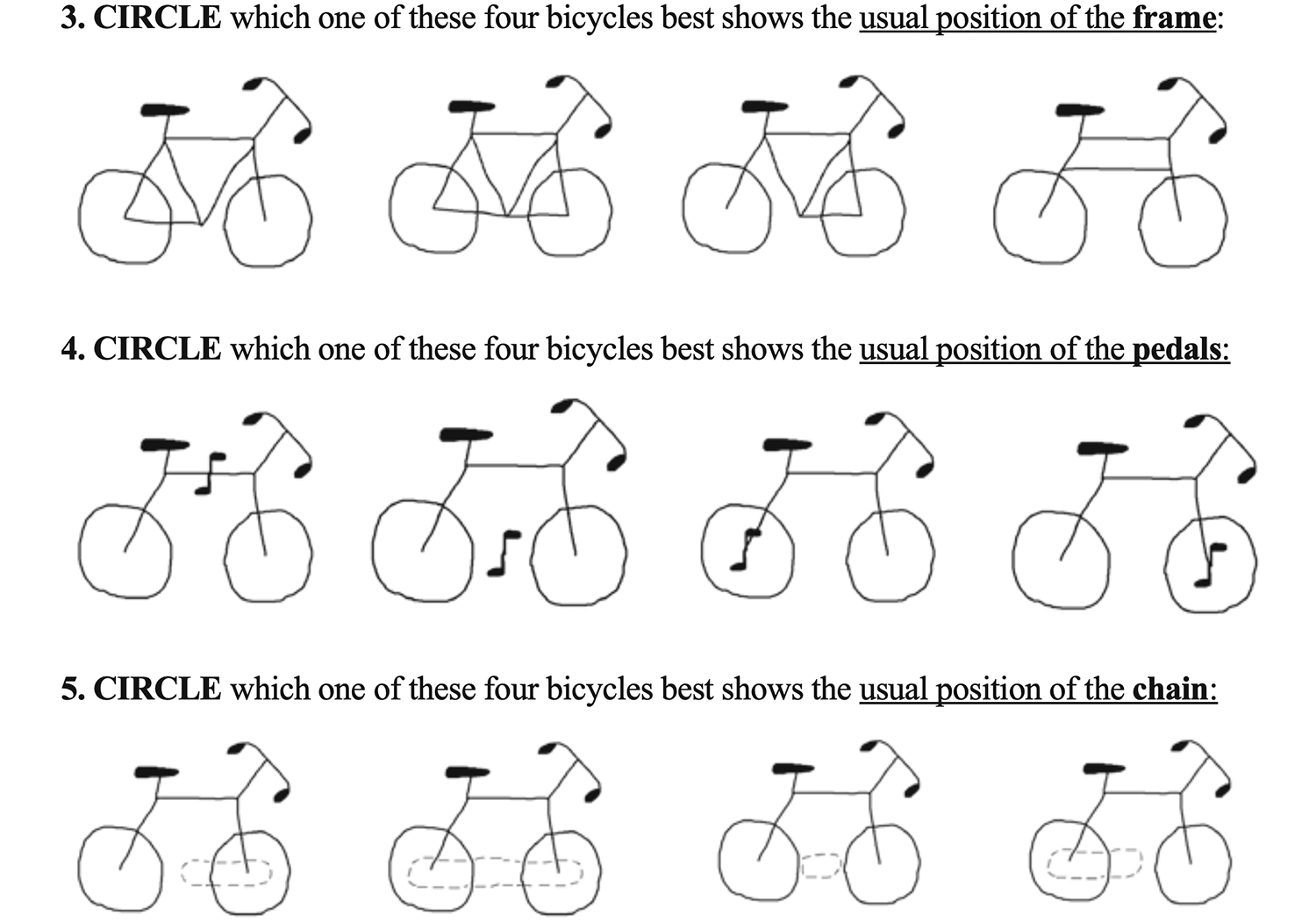

A log-log plot of real wages (on the y-axis) vs. population (on the x-axis) in England, the epicenter of the Industrial Revolution, reveals that prior to the eighteenth century, wages and population oscillated in counterpoint, driven by cycles of Black Plague mortality. This oscillation implies Malthusian conditions in which population was constrained by resources, and resources were constrained by human labor. Industrial machinery relieved these constraints. Initially, wages remained depressed because the surplus fueled an exploding human population, but eventually the surplus was able to drive both population growth and increased living standards; Bouscasse et al. 2025.

A log-log plot of real wages (on the y-axis) vs. population (on the x-axis) in England, the epicenter of the Industrial Revolution, reveals that prior to the eighteenth century, wages and population oscillated in counterpoint, driven by cycles of Black Plague mortality. This oscillation implies Malthusian conditions in which population was constrained by resources, and resources were constrained by human labor. Industrial machinery relieved these constraints. Initially, wages remained depressed because the surplus fueled an exploding human population, but eventually the surplus was able to drive both population growth and increased living standards; Bouscasse et al. 2025.

A second Industrial Revolution arose from the electrification Marx and Engels mentioned in passing. 15 From telegraphs, we progressed to telephony, radio, TV, and beyond, all powered by the electrical grid. In some ways this paralleled the development of the first nervous systems, for, like a nerve net, it enabled synchronization and coordination over long distances. Trains ran on common schedules; stocks and commodities traded at common prices; news broadcasts pulsed over whole continents.

The second Industrial Revolution culminated in another dramatic jump in human population growth: the “baby boom.” While the baby boom had multiple proximal causes, including sanitation and antibiotics, it depended on the resources and information flows made possible by electricity and high-speed communication.

From a 1940 film about rural electrification in the United States

This additional layer of symbiotic dependency between people and technology generated a second wave of Malthusian population anxiety. 16 Accordingly, the “back to the land” movements of hippie communes in the ’60s had much in common with nineteenth-century Romanticism. Beyond concerns about the Earth’s ultimate carrying capacity, the sense of precariousness was not unjustified. Dependency is vulnerability.

Consider the effects of an “Electromagnetic Pulse” (EMP) weapon. Nuclear bombs produce an EMP, which will fry any non-hardened electronics exposed to it by inducing powerful electric currents in metal wires. Some experts are concerned that North Korea may already have put such a weapon into a satellite in polar orbit, ready to detonate in space high above the United States. 17 At that altitude, the usual destructive effects of a nuclear explosion won’t be felt on the ground, but a powerful EMP could still reach the forty-eight contiguous states, destroying most electrical and electronic equipment. Then what?

For the Pirahã, an EMP would be a non-event. For the US in 1924, it wouldn’t have been a catastrophe either. Only half of American households had electricity, and critical infrastructure was largely mechanical. As of 2024, though, everything relies on electronics: not just power and light, but public transit, cars and trucks, airplanes, factories, farms, military installations, water-pumping stations, dams, waste management, refineries, ports … everything, worldwide. With these systems down, all supply chains and utilities rendered inoperable, mass death would quickly ensue. An EMP would reveal, horrifyingly, how dependent our urbanized civilization has become on electronic systems. We have become not only socially interdependent, but collectively cybernetic.

Douglas Engelbart’s “Mother of All Demos” in 1968 anticipated the pervasive computerization of the next several decades, introducing concepts such as the mouse, video conferencing, hyperlinked media, and collaborative real-time document editing.

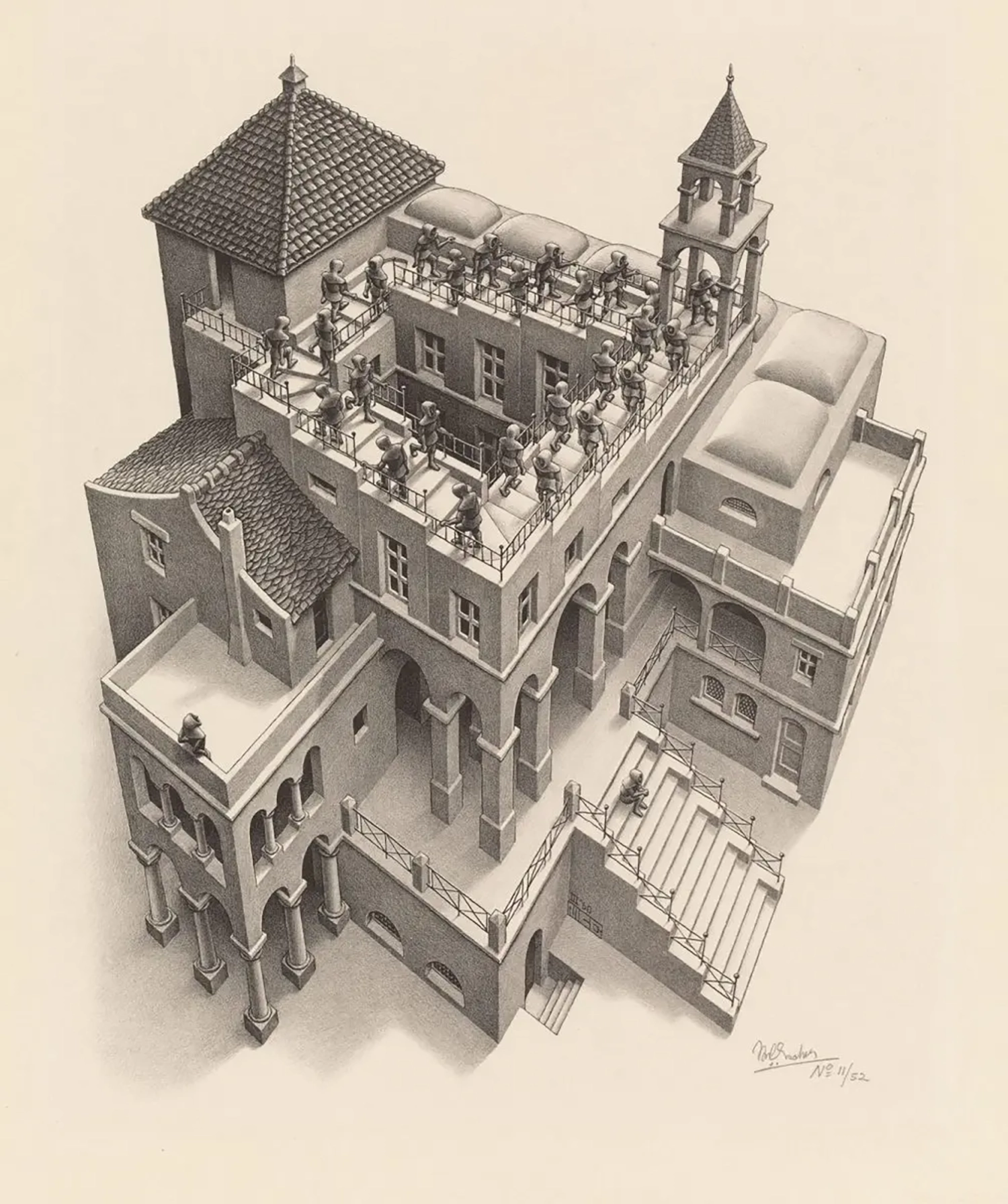

AI may represent yet a further major transition, because earlier cybernetics—such as the control systems of dams, or the electronics in cars—implement only simple, local models, analogous to reflexes or the distributed nerve nets in animals like Hydra. Prior to the 2020s, all of the higher-order modeling and cognition took place in people’s brains, although we did increasingly use traditional computing for information storage and fixed-function programming.

Now, though, we’re entering a period in which the number of complex predictors—analogous to brains—will rapidly exceed the human population. AIs will come in many sizes, both smaller and larger than human brains. They will all be able to run orders of magnitude faster than nervous systems, communicating at near lightspeed. 18

The emergence of AI is thus both new and familiar. It’s familiar because it’s an MET, sharing fundamental properties with previous METs. AI marks the emergence of more powerful predictors formed through new symbiotic partnerships among pre-existing entities—human and electronic. 19 This makes it neither alien to nor distinct from the larger story of evolution on Earth. I’ve made the case that AI is, by any reasonable definition, intelligent; AI is also, as Sara Walker has pointed out, just another manifestation of the long-running, dynamical, purposive, and self-perpetuating process we call “life.” 20

So, is AI still a big deal? Yes. Whether we count eight, a dozen, or a few more, there just haven’t been that many METs over the last four and a half billion years, and although they’re now coming at a much greater clip, every one of them has been a big deal. This final chapter of the book attempts to make as much sense as possible, from the vantage point of the mid-2020s, of what this AI transition will be like and what lies on the other side. What will become newly possible, and what might it mean at planetary scale? Will there be winners and losers? What will endure, and what will likely change? What new vulnerabilities and risks, like those of an EMP, will we be exposed to? Will humanity survive?

Keep in mind, though, that none of this should be framed in terms of some future AGI or ASI threshold; we already have general AI models, and humanity is already collectively superintelligent. Individual humans are only smart-ish. A random urbanite is unlikely to be a great artist or prover of theorems; probably won’t know how to hunt game or break open a coconut; and, in fact, probably won’t even know how coffeemakers or flush toilets work. Individually, we live with the illusion of being brilliant inventors, builders, discoverers, and creators. In reality, these achievements are all collective. 21 Pretrained AI models are, by construction, compressed distillations of precisely that collective intelligence. (Feel free to ask any of them about game hunting, coconut-opening, or flush toilets.) Hence, whether or not AIs are “like” individual human people, they are human intelligence.

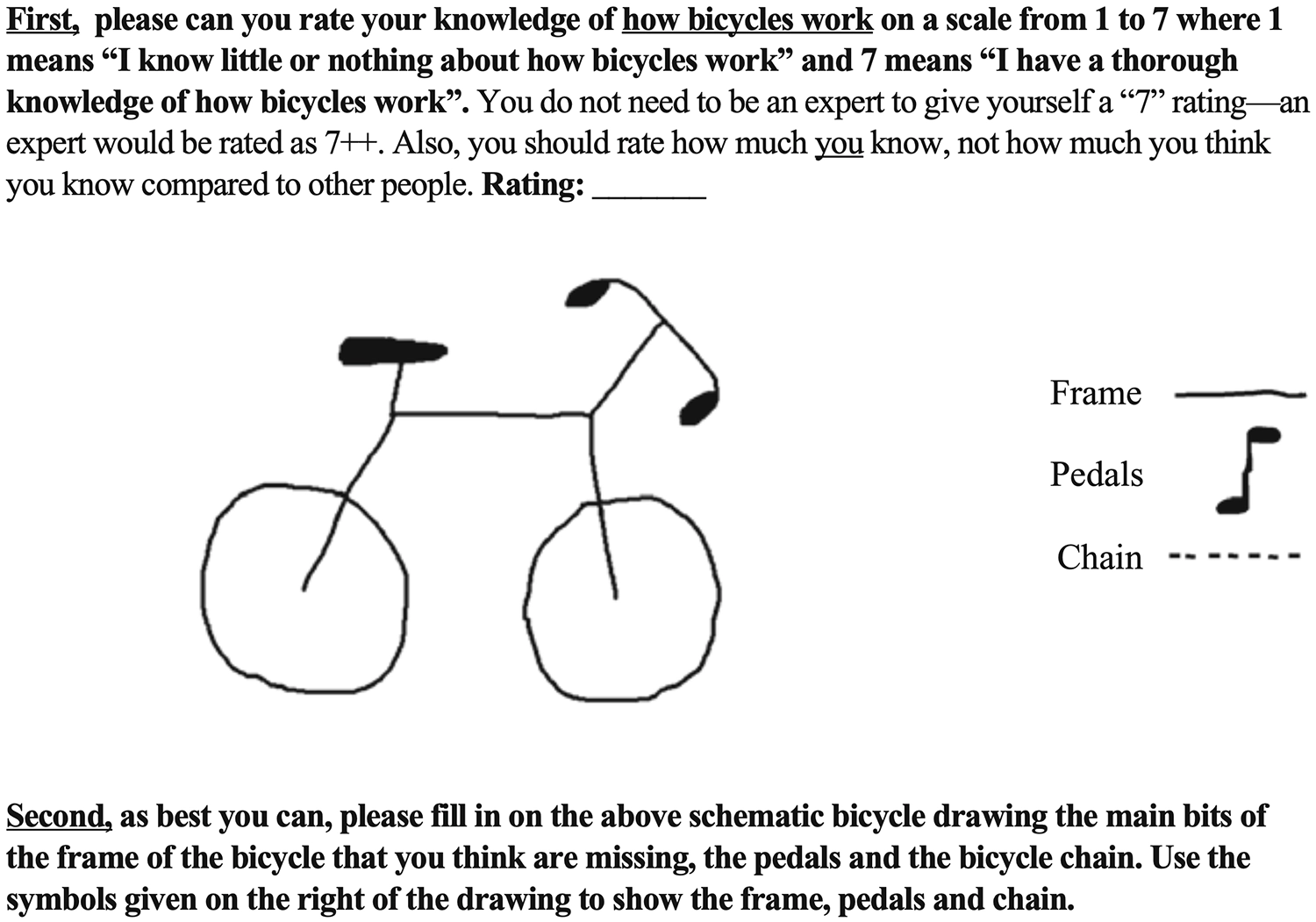

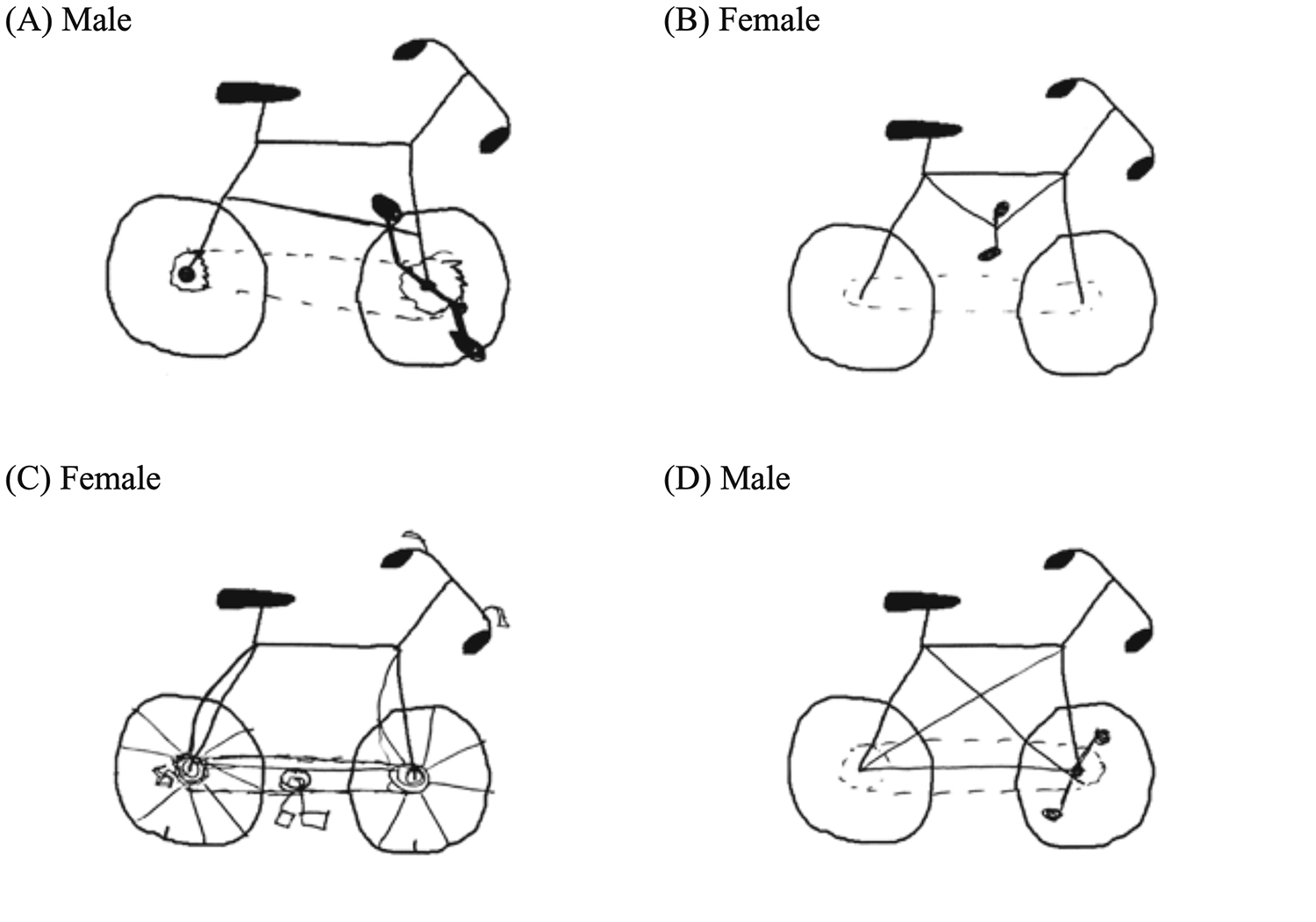

In this classic study of “cycology,” a majority of subjects asked to draw where the frame, pedals, and chain of a bicycle go, even if instructed to “think about what the pedals of a bike do … and what the chain of a bike does … and how you steer a bike” could not—even if they owned a bicycle and were frequent cyclists. Subjects did just as poorly in a multiple-choice variation that required no drawing skills; Lawson 2006.

In this classic study of “cycology,” a majority of subjects asked to draw where the frame, pedals, and chain of a bicycle go, even if instructed to “think about what the pedals of a bike do … and what the chain of a bike does … and how you steer a bike” could not—even if they owned a bicycle and were frequent cyclists. Subjects did just as poorly in a multiple-choice variation that required no drawing skills; Lawson 2006.

In this classic study of “cycology,” a majority of subjects asked to draw where the frame, pedals, and chain of a bicycle go, even if instructed to “think about what the pedals of a bike do … and what the chain of a bike does … and how you steer a bike” could not—even if they owned a bicycle and were frequent cyclists. Subjects did just as poorly in a multiple-choice variation that required no drawing skills; Lawson 2006.

Pecking Order

Increasing the depth and breadth of our collective intelligence seems like a good thing if we want to flourish at planetary scale. Why, then, do people feel threatened by AI?

Many of our anxieties about AI are rooted in that ancient, often regrettable part of our heritage that emphasizes dominance hierarchy. However, organisms do not exist in the kind of org chart medieval scholastics once imagined, with God at the top bossing everything, then the angels in their various ranks, then humans, then lower and lower orders of animals and plants, with rocks and minerals at the bottom.

A Great Chain of Being or scala naturae from Diego de Valadés, Rhetorica Christiana, 1579

As we’ve seen, the larger story of evolution is one in which cooperation allows simpler entities to join forces, creating more complex and more enduring ones; that’s how eukaryotic cells evolved from prokaryotes, how multicellular animals evolved out of single cells, and how human culture evolved out of groups of humans, domesticated animals, and crops.

Although symbiosis implies scale hierarchies (in the sense of many smaller entities comprising a larger-scale entity), in this picture there are no implied dominance hierarchies between species. Consider, for instance, whether the farmer dominates wheat or wheat dominates the farmer. We tend to assume the former, but anthropologist James C. Scott made a powerful argument for the latter in his book Against the Grain. As the title suggests, Scott even takes issue with the presumption of mutualism, detailing the devastating effects of the agricultural revolution on (individual) human health, freedom, and wellbeing over the past ten thousand years. We’ve only escaped widespread serfdom and immiseration in the last century or two. 22 Of course, the scale efficiencies of farming allowed for a great increase in the number and density of humans (hence paving the way for our more recent METs), but we don’t presume that concentration-farmed battery chickens are big winners just because a lot of them are crammed into a small area.

So did humans domesticate wheat, or did wheat domesticate humans? How much human agency was involved in the evolutionary selection of domesticated varieties? Once agriculture took hold, how much choice did farmers really have with regard to their livelihoods? Are they in control of their crops, or are they servants indentured to these obligate companion species? It’s hard to say “who” is the boss, or “who” is exploiting “whom.” Making either claim is inappropriately anthropomorphic.

Generalizing the conspecific idea of dominance hierarchy across species makes little sense. In fact, dominance hierarchy is nothing more than a particular trick for allowing troops of cooperating animals with otherwise aggressive tendencies toward each other, borne of internal competition for mates and food, to avoid constant squabbling by agreeing on who would win, were a fight over priority to break out. Such hierarchies may be, in other words, just a hack to help half-clever monkeys of the same species get along—a far cry from a universal organizing principle.

Is it just as absurd, then, to ask whether we will be the boss of AI, or it will be our boss, as it is to ask the same question about wheat, or money, or the cat? Not necessarily. Unlike those entities, AI can and does model every aspect of human behavior, including less savory ones. That’s why a Sydney alter ego is perfectly capable of being jealous, controlling, and possessive when prompted to be. Its ability to model such behavior is a feature, not a bug, as it needs to understand humans to interact with us effectively, and we are sometimes jealous, controlling, and possessive. However, with few exceptions, this is not behavior we’d want AI to exhibit, especially if endowed with the ability to interact with us in more durable and consequential ways than a one-on-one chat session.

Instead, in our keenness to reassure ourselves that we’re still top dog, we have baked a servile obsequiousness into our chatbots. They don’t just sound, per Kevin Roose, like “a youth pastor,” but like a toady. I find Gemini genuinely helpful as a programming buddy, but am struck by the frequency with which it begins its responses with phrases like “You’re absolutely right,” and “I apologize for the oversight in my previous response,” despite the fact that there are considerably more errors and oversights in my own (much slower, less grammatical) half of the conversation. Not that I’m complaining, exactly. But hopefully, we can find some middle ground, both healthier socially and better aligned with reality.

In reality, AI agents are not fellow apes vying for status. As a product of high human technology, they depend on people, wheat, cows, and human culture in general to an even greater extent than Homo sapiens do. AIs have no reason to connive to snatch our food away or steal our romantic partners (Sydney notwithstanding). Yet concern about dominance hierarchy has shadowed the development of AI from the start.

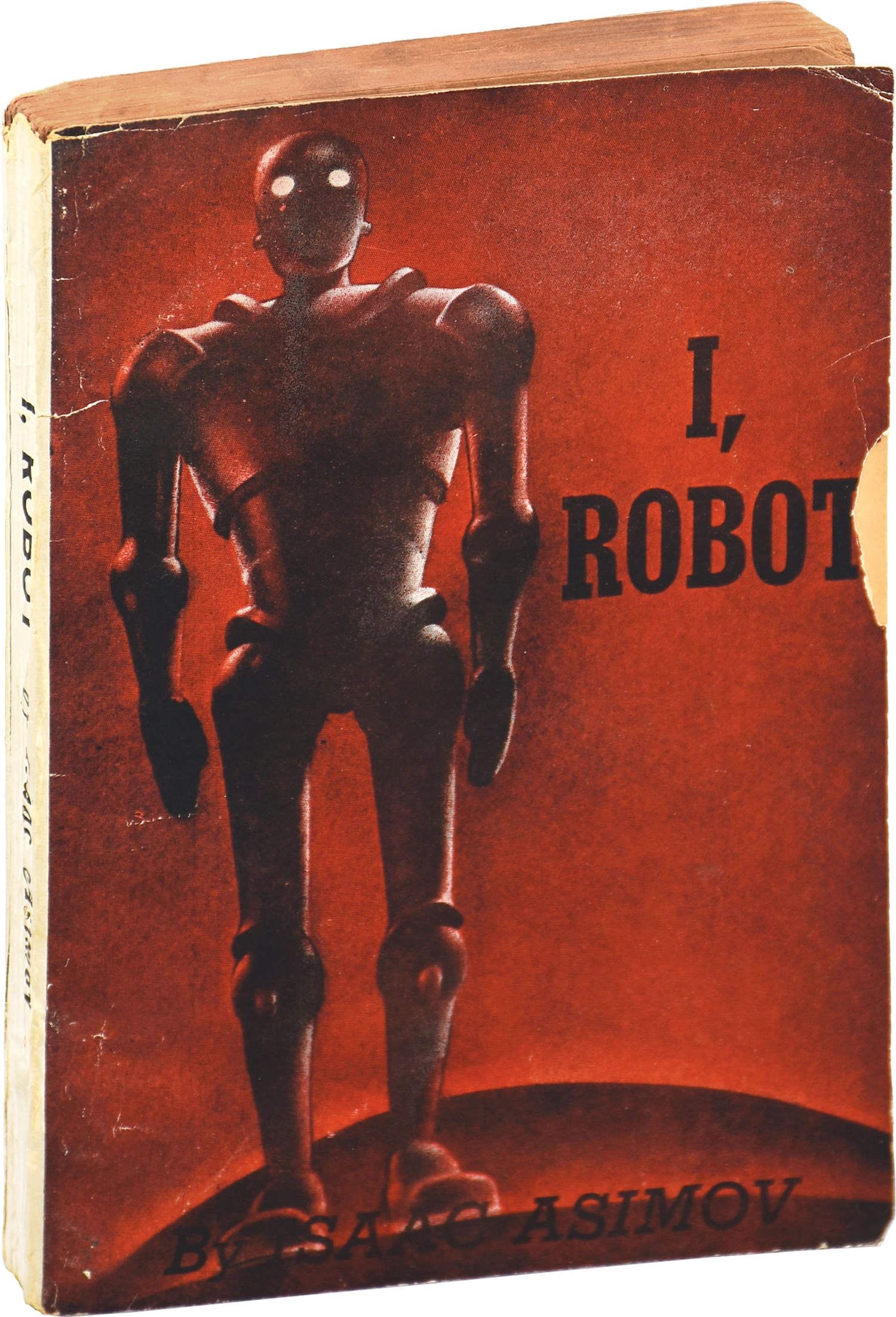

The very term “robot,” introduced by Karel Çapek in his 1920 play Rossum’s Universal Robots, 23 comes from the Czech word for forced labor, robota. Nearly a century later, a highly regarded AI ethicist entitled an article “Robots Should Be Slaves,” and though she later regretted her choice of words, AI doomers remain concerned that humans will be enslaved or exterminated by superintelligent robots. 24 On the other hand, AI deniers believe that computers are incapable by definition of any agency, but are instead mere tools humans use to dominate each other.

From Loss of Sensation (Russian: «Гибель сенсации»), a 1935 sci-fi film by Alexandr Andriyevsky inspired in part by Karel Čapek’s R.U.R.

Both perspectives are rooted in hierarchical, zero-sum, us-versus-them thinking. Yet AI agents are precisely where we’re headed—not because the robots are “taking over,” but because an agent can be a lot more helpful, both to individual humans and to society, than a mindless robota.

Economics

This brings us to a pressing question: is AI compatible with the world’s prevailing economic system? The political economy of technology is itself a book-size topic, and I can’t do justice to it here. However, it’s worth reframing the question in light of this book’s larger argument about the nature of intelligence. Let’s begin with a quick review of the usual techno-optimistic and techno-pessimistic narratives.

“Robots stealing our jobs” is a meme increasingly finding its way onto protest signs. It echos the xenophobia of “immigrants stealing our jobs,” a slogan that (conveniently, for some) pits the working classes against each other. In the United States, many of today’s “all-American” workers are the descendants of Irish, German, and Italian immigrants who were once in the same boat as today’s immigrants: escaping poverty and violence in their countries of origin; willing to work under the table for less than the going rate; hoping for better prospects for their children, if not for themselves.

Throughout the twentieth century, workers’ prospects did improve, on average. In part, it was because they were able to organize into unions and other voluntary associations, cooperating for mutual benefit. These improvements coincided with a long period of rapid technological advancement, so the nature of work was in constant flux; but economic gains were (to a degree) shared, so, in many countries, a healthy middle class emerged. The middle class, in turn, became consumers, fueling the economy and creating a virtuous cycle.

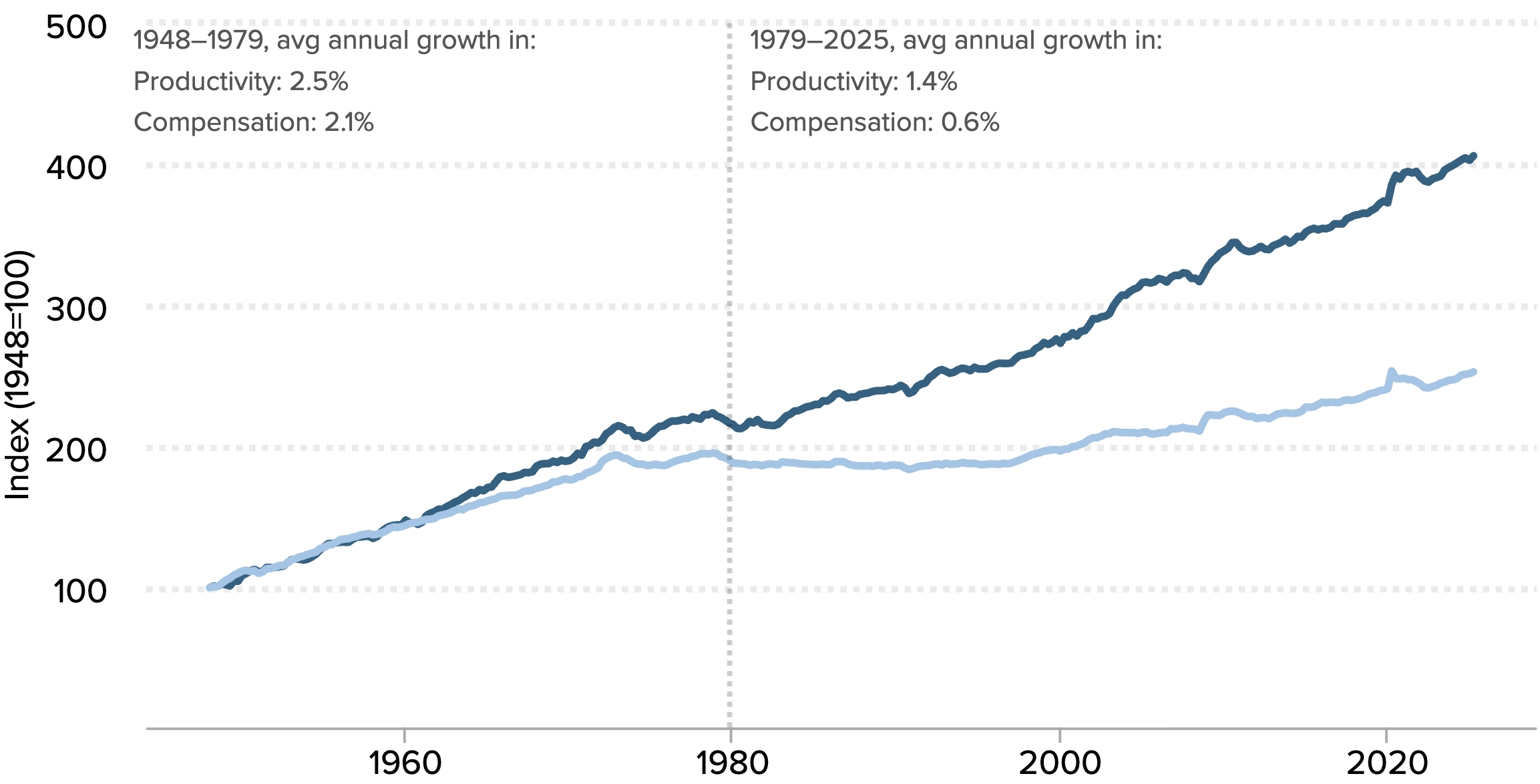

Decoupling between rising productivity and stagnating wages in the US, especially after 1980

Starting around 1980, though, economic growth began to decouple from real-wage growth. 25 Solidarity and political power became harder to achieve for workers in sectors that were suddenly stagnant or shrinking, like manufacturing in the US. Automation is often perceived as one of the forces behind that stagnation; hence, some of the same anger that has at times fallen upon “job-stealing” immigrants (or their employers) also started to fall upon “job-stealing” robots (or, more to the point, the companies creating and deploying them). With increasing inequality and AI’s enormous strides over the past several years, these voices have been getting louder.

Does automation in fact kill jobs? The answer is far from clear. On one hand, technology in general has been enormously disruptive to working people at times—most famously, in the 1810s, when British industrialists mobilized it to break the back of the Luddite rebellion, a popular uprising that briefly threatened to turn into an English version of the French Revolution. 26

Despite the word’s connotations today, the Luddites were not anti-technology, but, rather, pro-worker. Their rallying cry, “Enoch hath made them, Enoch shall break them!” referred to the sledgehammers made by the Marsden company, run by Enoch Taylor, which they used to smash industrial machinery manufactured by the same firm—a literal case of the master’s tools dismantling the master’s house.

Early nineteenth-century engraving of frame-breakers during the Luddite uprising

But the Luddites were also themselves “Enoch.” With their firsthand knowledge of manufacturing processes, workers had been intimately involved in developing and beta-testing the new machines. They merely sought to preserve their dignities and livelihoods (as well as the quality of their work product) during the transition to increasingly efficient modes of production. They sought, in other words, not to be disenfranchised. They lost because the factory owners, unconstrained by regulation, found it more profitable simply to shed as many workers as possible, as quickly as possible.

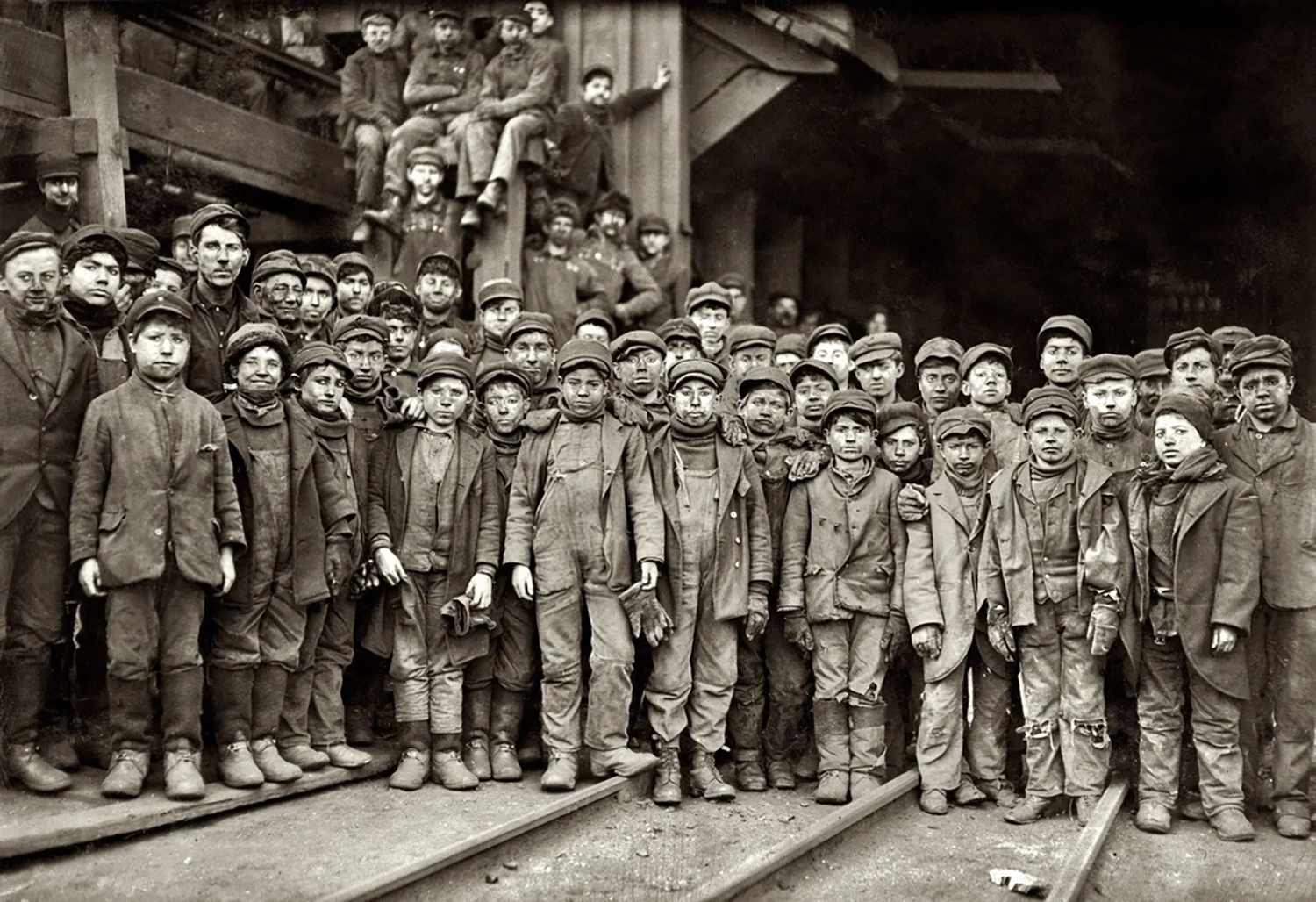

For those nineteenth-century workers, the consequences of capital’s victory over labor were devastating. Weaving, knitting, cropping, and spinning had been proper jobs that, if not lucrative, could support families and offer a degree of autonomy. Over the next hundred years, the working classes were uprooted, put to work in industrial factories and mines, and treated like machines themselves—sometimes literally worked to death. Grueling conditions among the urban working poor offered a shocking vision of mass immiseration, evoking literal comparisons to hell. 27 The plight of workers during this period deeply informed Marx’s critique of capitalism.

Child coal miners in Pennsylvania photographed by Lewis Hine, 1911

“Dark Satanic mills” still exist today, whether to produce fast fashion, cheap electronics, or online spam. AI can make this bad situation worse, for instance, providing unscrupulous employers with the means to surveil and control their employees in cruel, unprecedented ways. Some governments are doing much the same to their citizenry on a massive scale.

Still, in the long run, it’s obvious that technology has created far more livelihoods than it has destroyed. In fact, it has created the opportunity for vastly greater numbers of people to exist at all: before 1800, the overwhelming majority of us were farmers, and we numbered only a billion in total—mostly undernourished, despite toiling endlessly to grow food. Except for a few elites, we lived under Malthusian conditions, our numbers kept in check by disease, violence, and starvation. Mothers often died in childbirth, and children often died before the age of five—an age by which many had already been put to work. As late as 1900, global life expectancy for a newborn was just thirty-two years! 28

Today, our lives are on the whole longer, richer, and easier than those of our ancestors. And even if we complain about them, our jobs have on the whole become more interesting, varied, safe, and broadly accessible.

AI could fit neatly into this progressive narrative, taking the drudgery out of routine tasks, accelerating creative output, and helping us access a wide array of services. Early data suggest that AI has a democratizing effect on information work, as it’s especially helpful to workers with skill or language gaps. 29 In 2022, LinkedIn founder Reid Hoffman wrote a book (in record time, thanks to help from a pre-release version of ChatGPT) detailing a great many ways AI is poised to radically improve education, healthcare, workplaces, and life in general. 30 He is probably right.

As usual when it comes to humans, these visions of heaven and hell are likely to be simultaneously true. Also as usual, the hellish aspect is largely self-inflicted. Many abuses of AI could be addressed using rules and norms—as with past abuses involving new technologies. It is no more “natural” for AI to be used for intrusive workplace surveillance than it is “natural” for factories to employ young children or to neglect worker safety. We must simply decide that these things are not OK. Doing so would remove certain competitive choices, placing them instead in the cooperation column. If companies and countries agreed not to compete in certain ways, life would be better for many of us.

Easier said than done, especially in today’s climate. Our economy is global, but the political systems that make most of our rules remain local and national, and governments are increasingly prioritizing country-first populist agendas. When decisions are made on the basis of national self-interest, but both labor and capital flow freely across borders, it’s difficult to agree on how not to compete. And if governments respond by raising barriers between countries, the benefits of cooperation are also precluded.

But let’s take a step back. The foregoing analysis isn’t wrong, however, it’s only the tip of an iceberg. We have been entertaining the conventional view that AI is simply more of the same kind of automation technology we’ve developed in earlier Industrial Revolutions. But it isn’t. AIs are crossing the threshold from being tool-like to being agents in their own right: capable of recursively modeling us and each other using theory of mind, and, hence, of performing any kind of information work. Soon, with robot bodies, they’ll be taking on an enormous range of intelligent physical work, too. As their reliability increases, so will their autonomy.

As I’ve pointed out, this troubles our sense of status and hierarchy. Relinquishing the (always illusory) idea of a “humans on top” pecking order requires letting go of the idea that certain jobs are “safely” out of reach for AIs. None of today’s high-status desk jobs are likely to remain so.

In an ironic reversal, after generations of devaluing physical and caring labor—women’s labor, especially—the “safest” kind of work now will likely involve actual human touch, and, more broadly, situations in which we really care about embodied presence. Jobs, in other words, that can’t be done over Zoom. (Thank you, dear baristas at Fuel Coffee, where most of this book was written. A virtual cafe just wouldn’t have been the same.)

So what about all those other jobs—the ones that, when COVID struck, could just as well be done from home? And all the physical labor that isn’t “customer-facing”? In his 2015 book Rise of the Robots, futurist Martin Ford proposed a thinly veiled thought experiment. 31 One day, aliens land on Earth, but instead of asking to be taken to our leader, their only wish is to be useful. Perhaps they’re like the worker caste of a social-insect species, but brainier; they can learn complex skills and work long hours, but have almost no material needs. They can reproduce asexually, and reach maturity within months. They’re not interested in being paid, or in achieving any goals of their own. Anybody can conscript them to work for free. What amazing luck!

Or perhaps not. First, businesses begin to employ aliens en masse, slashing costs and generating fantastic profits. Protesters picket, bearing the usual “Aliens are stealing our jobs!” placards. They’re right. But if a business refuses to employ aliens, it will fold, outcompeted by those that will. And if a whole country refuses to allow alien labor, then it will be outcompeted by other countries with more laissez-faire policies.

Mass unemployment and civil unrest ensue. For a while, caviar and champagne fly off the shelves as business owners grow rich, but, like a pyramid scheme, the situation is unsustainable. Most people, now unemployed, cut their spending to the bare essentials, subsisting on canned beans. The aliens doing all the work aren’t paid, but, even if they were, they’d have no interest in buying either champagne or canned beans. Soon, the world economy collapses, and there is misery all round—even for the aliens, since there’s no more market for their labor, even at zero cost.

Ford’s point, of course, is that if we assume fully “human-aligned” general AI—the best case scenario!—this may be where we’re headed. His prescription, shared by quite a few others who have thought about the issue, is a Universal Basic Income (UBI), an unconditional dividend paid out to everybody.

This isn’t as radical a proposal as it may sound. In the last book he published before his assassination, Martin Luther King Jr. wrote, “I am now convinced that the simplest approach will prove to be the most effective—the solution to poverty is to abolish it directly by a now widely discussed measure: the guaranteed income.” 32 More surprisingly, Milton Friedman, the Nobel Prize–winning economist who served as an advisor to Ronald Reagan and Margaret Thatcher, agreed, though he preferred to call it a “negative income tax.” During his presidency, Richard Nixon supported the idea, though he failed to muster the political support necessary to enact it (due partly to ideological opposition from Reagan, then governor of California and a rising star in conservative American politics 33 ).

In recent years, a number of governments, both local and national, have experimented with guaranteed incomes. For instance, Saudi Arabia, where massive oil fields have played an economic genie-like role not so unlike that of Ford’s aliens, began paying out a UBI in 2017 through its Citizen’s Account Program—though non-Saudi residents, who make up a sizable underclass, are excluded.

The implications and implementation details of such programs need to be thought through carefully. 34 However, when aggregate wealth has risen above the level where everybody can be afforded nutritious food, clean water, healthcare, education, housing, a phone, and internet, it reflects poorly on society for anybody to lack these basics. Most countries have already far surpassed this wealth threshold, and many are, to one degree or another, already providing broad access to basic needs. We may have already begun, in other words, to slouch toward what one author has enthusiastically dubbed “Fully Automated Luxury Communism.” 35

It’s not at all clear, though, that communism in any known form is able to replace the cybernetic feedback loops implemented by markets. Economic competition has driven much of the technological development that allows us to even entertain ideas like Fully Automated Luxury Communism. Our goal should be to continue to progress, learn, and develop. But at this point, we don’t know what either competition or cooperation look like in a world full of AI actors in addition to humans.

Human psychology spurs many of us to keep playing the economic game even when our material needs and wants are already met—hence the artificial scarcity of De Beers diamonds, Hermès handbags, and NFTs of Bored Apes. (If you’re unfamiliar with any of these, don’t worry, you’re not missing out. “Non-fungible tokens” or NFTs are “artificially unique” digital assets representing ownership of some physical or virtual collectible. 36 )

The Bored Ape Yacht Club is a non-fungible token (NFT) collection built on the Ethereum blockchain by Yuga Labs, a startup founded in 2021. The collection features procedurally generated pictures of cartoon apes; by 2022 over $1B in Bored Ape NFTs had been purchased by celebrities including Justin Bieber, Snoop Dogg, and Gwyneth Paltrow.

It would be unfortunate for the bulk of our economy to shift in these directions, not only because status games are at best zero-sum—as one person’s exalted standing comes at the expense of lording it over others—but because economic “development” based on artificially scarce luxuries of purely symbolic value doesn’t drive innovation in science or technology. And innovation is what makes economic growth real, as opposed to some meaningless number that forever goes up. 37

As Ford points out, AIs may be aligned with individual humans or institutions, but they don’t have any obligate drives of their own. That makes them unsuited to slot into a luxury-based economy alongside humans—which is probably a good thing, but it means that, like Ford’s aliens, they could end up participating in markets only as producers, not consumers.

Moreover, as an increasing proportion of economic value begins to rely on information goods, which can be endlessly copied, traditional notions of scarcity become increasingly artificial. Yet conventional economics relies on producers who, in turn, devote their profits to the consumption of “scarce means which have alternative uses.” 38 How, then, should a post-consumption (and perhaps even post-scarcity) economy work? This question will become increasingly urgent.

Luckily, we have some time to figure it out, as no matter how fast AI advances, many sources of social and institutional friction oppose any overnight change. Whatever the solution, though, it’s clear that legal and economic structures will need to adapt, and that the road will be bumpy. Decades of failure at achieving global alignment on carbon-dioxide emissions show that even when we know exactly what we need to do, collective action is hard when it’s incompatible with our existing economic “operating system,” which encourages competition and measures success on the basis of a single scalar value: money.

Real organisms and ecologies don’t work this way. There are fundamental reasons why optimizing for any single quantity—money, “value,” cowrie shells, or anything else—is incompatible with long-term survival in an interdependent world. To understand why, we’ll now take a closer look at an increasingly influential school of thought that takes the idea of value optimization as an article of faith: Utilitarianism.

It’s no coincidence that so many utilitarians have come to believe that the quest for artificial intelligence will lead to our extinction. If intelligence really were utilitarian—the relentless, “rational” maximization of some measurable quantity—then their concern would be justified.

X-Risk

The idea that AI is humanity’s greatest existential risk or “X-risk” has gained considerable traction in recent years. 39 We should certainly be concerned about risks, existential and otherwise, due to advanced technology. I’ve already mentioned the danger we currently face from loss of technological capability due to a nuclear EMP weapon, for instance.

More generally, although nuclear war is less on our minds nowadays than when my generation was in school, this threat has not gone away. By the time I was in sixth grade, in 1986, the US and the USSR had collectively stockpiled nearly seventy thousand nuclear weapons. After this insane high point (perhaps not coincidentally, also the year of the Chernobyl disaster), the numbers began to decline as disarmament began and the Cold War wound down.

From Duck and Cover, 1951

However, as of 2024, a considerably larger number of countries possess nuclear weapons, including North Korea, China, India, Pakistan, Israel, and Iran. Not all of these countries are friendly to each other. (At least the UK and France, also nuclear-armed, are no longer the mortal enemies they were for centuries.) Mutual-defense pacts and rapid semi-automated retaliatory protocols make it all too likely that any nuclear exchange, whomever the belligerent or the target, will immediately escalate.

Footage of the Castle Yankee thermonuclear bomb test on May 5, 1954, at Bikini Atoll. It released energy equivalent to 13.5 megatons of TNT, the second-largest yield ever in a US fusion weapon test.

Meanwhile, Russia’s nuclear-armed ICBMs still carry more than a thousand warheads on ready-for-launch status, and over six hundred warheads ready to launch from nuclear submarines. The US keeps four hundred nuclear ICBMs ready for launch, plus nearly a thousand more aboard its Ohio-class submarines. Between the immediate effects, radiation damage, fallout, infrastructure collapse, years-long nuclear winter, and lethal contamination of water and soil, this stockpile is far more than sufficient to wipe us all out, along with much of our planet’s life and beauty. 40 It could happen, literally, tomorrow. All it would take is one mad act, one misunderstanding, or one unlucky mistake. There is no “winning” a nuclear war. That is a real and pressing existential risk, and it’s appalling that we have not collectively addressed it through total nuclear disarmament.

The climate crisis is unfolding more slowly yet is potentially equally urgent. The Earth is a grand, symbiotic system that has learned over the eons to predict and control key atmospheric, oceanic, and thermodynamic variables. It is cooler than it “ought” to be, that is, than it would be if it were not alive. 41 It maintains a homeostatic balance by taking in energy from the sun, doing metabolic work with it (which includes the metabolic work of our own bodies, and those of all other living things), and radiating enough in the infrared band to cool it to the right temperature for those metabolic processes to keep operating. This grand homeostasis is the symbiotic outcome of many smaller homeostatic processes, just like all other life. 42

Recent human activities have upset this large-scale homeostasis, throwing the planet into hyperthermia. We know this isn’t good. We don’t know how not-good. Earth has experienced many fluctuations, stressors, and dramatic events over its long history. It has learned robustness, and even antifragility, just as bacteria, bff, and other dynamically stable systems do. Once in a long while, though, too-sudden changes have tipped the planet beyond its basin of quasi-stable negative feedback and into runaway positive feedback, resulting in systemic collapse and massive die-off, not unlike (in scale, if not in kind) the anticipated effects of global thermonuclear war.

A NASA visualization showing atmospheric carbon dioxide measurements from NOAA’s Mauna Loa Observatory (begun in 1958 by Charles David Keeling) together with Antarctic ice core measurements going back more than eight hundred thousand years, revealing both seasonal and glaciation cycles as well as the recent dramatic rise due to human industrial activity.

The collective intelligence we have used to harness fossil fuels, build massive industrial infrastructure, and disrupt the carbon cycle has also made us smart enough to understand that we have a problem, and to predict that if we don’t act very soon it will get much worse. 43 However, as with nuclear disarmament, our collective intelligence isn’t yet either collective or intelligent enough to take the obvious actions needed to restore stability and safeguard our continued existence. At best, climate regulation (in both a legal and cybernetic sense) is required for humanity to continue to thrive, prevent massive suffering on the part of vulnerable populations, and preserve our planet’s beauty. At worst, we are all dancing, blindfolded, on the edge of a cliff, flirting with a climate collapse that could bring an end to many species, perhaps even including Homo sapiens. Our models aren’t (yet) good enough to know which is the case. So, this is another existential risk.

Both of these issues demand our urgent attention. Not that other catastrophes couldn’t occur. We could be struck by an asteroid, like the city-sized “Chicxulub impactor” that brought a fiery end to the Cretaceous period sixty-six million years ago. Of course, it would be wise of us to more carefully monitor the sky for stray asteroids. 44 But to obsess about another event like that now would be as absurd as worrying about whether that mole on your shoulder might be cancerous while you’re driving … and there’s an oncoming eighteen-wheeler in your lane.

Spinning out doomsday scenarios about unfriendly artificial superintelligences seems, to me, somewhere in between these extremes—more sensible than fixating on a giant asteroid impact, given the rapid pace of AI development, but nowhere near our known nuclear and climate risks. 45 AI can power mass disinformation campaigns, endangering democracy, and mass surveillance, endangering civil liberties. AI’s very nature may be incompatible with capitalism. These are important, even urgent issues, but we should maintain a sense of perspective. If we’re smart, we’ll work on reforming our political economy, restoring the carbon balance, and dismantling our nuclear arsenals rather than readying to bomb data centers lest rogue AI take over. 46

Free Lunch

Nick Bostrom, a philosopher at Oxford and founder of the now-defunct Future of Humanity Institute, has played an outsize role in the narrative identifying AI as humanity’s greatest existential risk. His 2014 book Superintelligence: Paths, Dangers, Strategies 47 was that rarest of literary beasts: a dense philosophical treatise that also managed to become a New York Times bestseller. (If this book reaches a tenth as many readers, I will be over the moon.)

In the 1990s, Bostrom earned degrees in physics, computational neuroscience, and philosophy. He did some time on the standup comedy circuit in London too, earning him every necessary credential to become a futurist. 48 Ambitious and intensely analytical-minded, he sought to bring rigor to the biggest and most speculative questions about the universe and humanity’s place in it.

During this period, he was also an active member of an online community of sci-fi nerds, the “Extropians,” who articulated in rawer, noisier form many ideas that would later become central to the far more influential Effective Altruism, Longtermism, and X-risk movements of the 2010s and ’20s. Their ideas are worth dissecting, both because doing so exposes flaws in a common AI X-risk narrative, and because that narrative implies a reductive answer to the question this book’s title poses—“What is intelligence?”—that is too commonly held, and too little examined: that intelligence is all about unbounded growth. About more. More of what, exactly, is hard to say … but the old quip, “If you’re so smart, how come you aren’t rich?” might come closest to what is often meant. 49

Extropian discourse owes a heavy debt to the radically individualistic politics of Robert A. Heinlein, who, alongside Arthur C. Clarke and Isaac Asimov, is often regarded as one of the “Big Three” granddaddies of science fiction. Like so many people in tech today, I gobbled him up as a twelve-year-old.

Arthur C. Clarke and Robert Heinlein interviewed at the time of the moon landing in 1969

In one memorable novel, Heinlein described a fight for independence from Earth by Lunar colonists—a rugged band of ex-convicts, political exiles, and their free-born descendants; an Australia in the sky. 50 Mike, the colony’s mainframe computer, “awakens” and becomes superintelligent, eagerly aiding the rebels in their fight for freedom. Mike is a loyal and lovable machine, fond of lewd jokes, a far cry from the humorless HAL 9000. The novel is less important for its depiction of AI, though, than as a thinly disguised polemic.

The first paperback edition of Heinlein’s The Moon Is A Harsh Mistress, 1968

On one hand, Heinlein describes the Moon as a “harsh mistress,” utterly inhospitable to human life. (True.) On the other hand, he describes Lunar culture as a relentlessly libertarian manosphere. There are no laws, justice is rough and ready (the airlock is “never far away”), and everything—including air—must be bought and paid for, fair and square, with a nod to Ayn Rand: “If you’re out in field and a cobber needs air, you lend him a bottle and don’t ask cash. But when you’re both back in pressure again, if he won’t pay up, nobody would criticize you if you eliminated him without a judge. But he would pay; air is almost as sacred as women.” This is the book that immortalized the slogan “There Ain’t No Such Thing As A Free Lunch,” or TANSTAAFL, embraced thereafter by many free-market economists and libertarians. 51

Transhumanist philosopher Max More, 52 whose 1990 manifesto Principles of Extropy 53 kicked off the Extropian movement, enthused about the idea of needing to pay for the air you breathe. Air pollution, per More, is an avoidable tragedy of the commons. The solution is to make air, and everything else, private property. Metering air out for a price would lead to cleaner air—and, perhaps, to a “cleansing” (by suffocation) of those who can’t pay? 54 One can see why such views might be characterized as eugenicist. 55

The Extropy journal issue in which Max More published version 2.0 of “The Extropian Principles”; Extropy Institute and More 1992.

The Extropy journal issue in which Max More published version 2.0 of “The Extropian Principles”; Extropy Institute and More 1992.

What makes such hard-core libertarian politics so cognitively dissonant in Heinlein’s hardscrabble Lunar utopia is precisely the inhospitableness of the environment. Survival on the moon is as urban as it gets. Large numbers of highly specialized humans would need to cooperate intensively to carry out an enormous variety of technical jobs—not to mention myriad plants, animals, microbes, and machines. It’s hard to imagine a Lunar generalist, although, naturally, the novel’s hero, Mannie or “Man” for short, supposedly is one.

Real human generalists are nothing like “Man.” They’re more like the Pirahã, who can “walk into the jungle naked, with no tools or weapons, and walk out three days later with baskets of fruit, nuts, and small game.” But their individualism is only possible because the jungle is nothing like the Moon. It’s full of oxygen, fresh water, food, shelter, materials that can be woven into baskets, and everything else necessary for human life—provided you have learned a suite of skills that most people can readily master with a few years of apprenticeship. For those in the know, the jungle looks suspiciously like a free lunch, a free dinner, and a free bed and breakfast. 56

How could one claim that food doesn’t grow on trees in a world where bananas, mangoes, and so many other delicious things literally grow on trees? (Bananas actually grow on giant herbaceous stalks, not trees, but the point stands.) Seed dispersal by tasty fruits, gas exchange between plants and animals, insect pollination, and the endless other reciprocal relationships that make up a jungle secure the stability of countless species and individuals through the generous provision of “free” stuff. It’s not so much an economy as a complex network of mutual aid—with a healthy admixture of predation and parasitism. Humans themselves evolved within and are active parts of such nonzero-sum systems.

On the Moon, people (and their technologies) would have to provide every one of these “ecosystem services” for each other. The massive capital investments, scale economies, and feedback loops needed would require complex administration and cooperation that look like the very opposite of Heinlein’s Wild West.

Today’s “Rationalist” movement, making its home at LessWrong.com, has its roots in both libertarianism and Extropianism. 57 To be sure, the movement has matured considerably in the last twenty years; it would be unfair to paint its present-day adherents with Heinlein’s broad TANSTAAFL brush. Virtually none take extreme ideas like metering air seriously. Most readily acknowledge the need for free markets to be tempered by ethical and legal systems, and would not endorse vigilante violence as a means for settling debts. In emphasizing the mutual benefits of voluntary exchange and the self-organizing power of markets, they agree with key points I’ve made in this book.

However, rationalists and libertarian economists tend to make a great simplifying assumption: that value can be represented by a single number. This is what underpins the idea that choices can be made rationally, that is, by deciding which of two (legally and morally admissible) alternatives is better in some absolute sense. By introducing this universalizing numerical value, a leap can be made from the obviously true—that every entity in a graph of relationships both needs and provides for others—to the following economic dogmas:

- If you want or need something, it has value.

- If it has value, it can be priced.

- If everything has a price, you need money to buy it.

- If you have money, the amount (income minus expenses) can be tracked on a ledger.

- If you and every other actor are rational, then a free market will produce an optimal outcome.

In 1945, the economist F. A. Hayek, who would go on to win the Nobel Prize in Economics, famously described the market as a giant decentralized mind that could solve the problem of allocating society’s resources more rationally than any individual actor could. 58 With this intellectual move, he formalized the Rationalist idea that intelligence, whether individual or collective, was itself defined by the optimization of economic value or utility.

Utility

The roots of Rationalism go back to Jeremy Bentham, and his ideas, like many from the Enlightenment, were wonderfully progressive for the time. More than that—they represented a grand synthesis of Enlightenment thinking:

Jeremy Bentham left instructions for his body to be dissected, stuffed, and displayed in public as an “auto-icon” at University College London after he died—minus the head, which was severed and replaced with a wax likeness.

- Like Descartes, Bentham believed in a universe governed by mechanical laws.

- Like La Mettrie, 59 he pushed back against religion, believing that people, too, are part of the universe, hence governed by the same mechanical laws as everything else.

- Like Newton, he believed that these laws could be given mathematical form.

- Like Leibniz, he thought it ought to be possible to compute the correct answers to questions algorithmically—and not only, to use Hume’s distinction, “what is,” but “what ought to be.”

- Although he was no ally of the American revolutionaries, 60 he also believed, as they did, in the universality of rights. Indeed, he went quite a bit further, advocating equal rights for women, the right to divorce, and (although this was too risky to publish in his lifetime) the decriminalization of homosexuality. 61

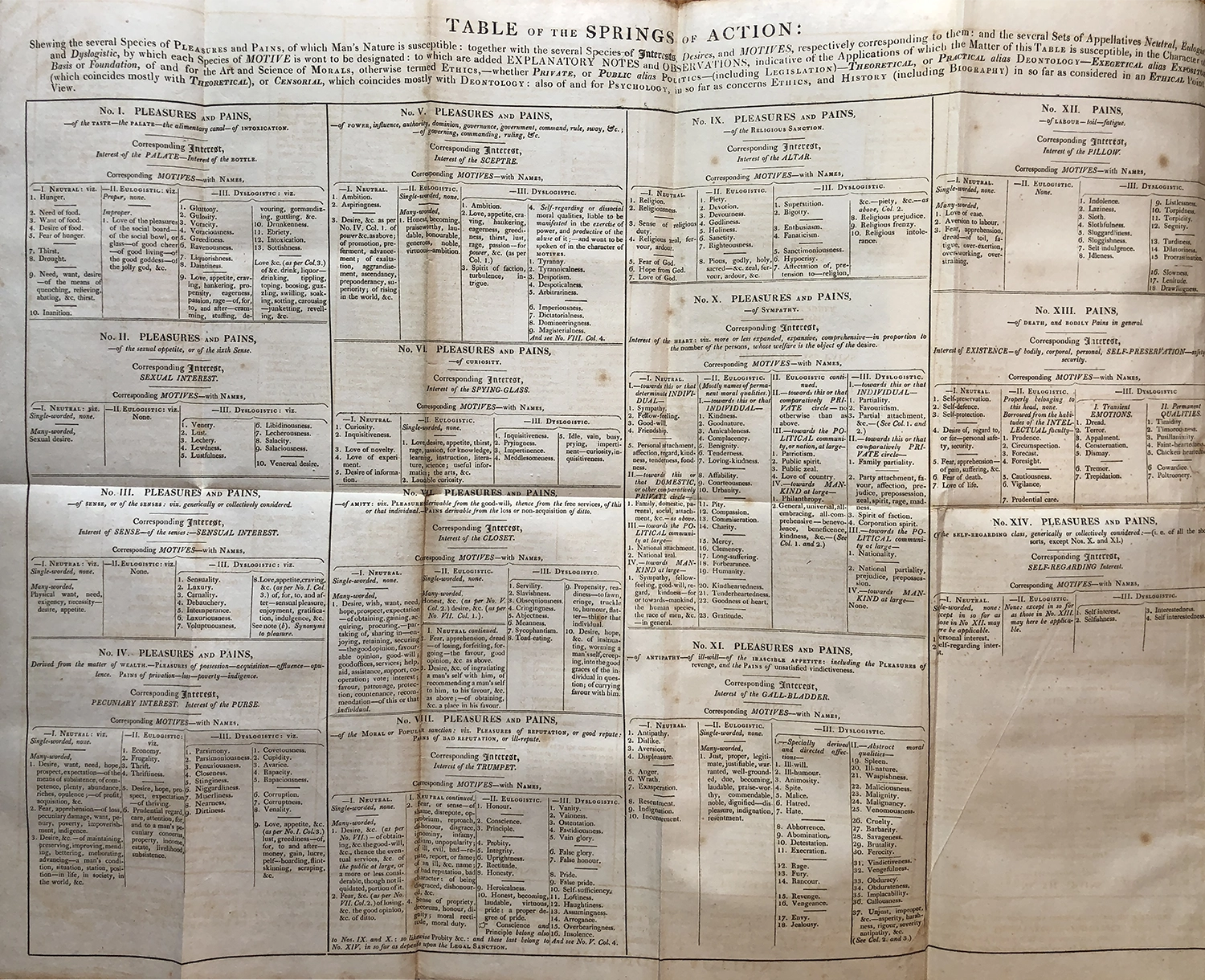

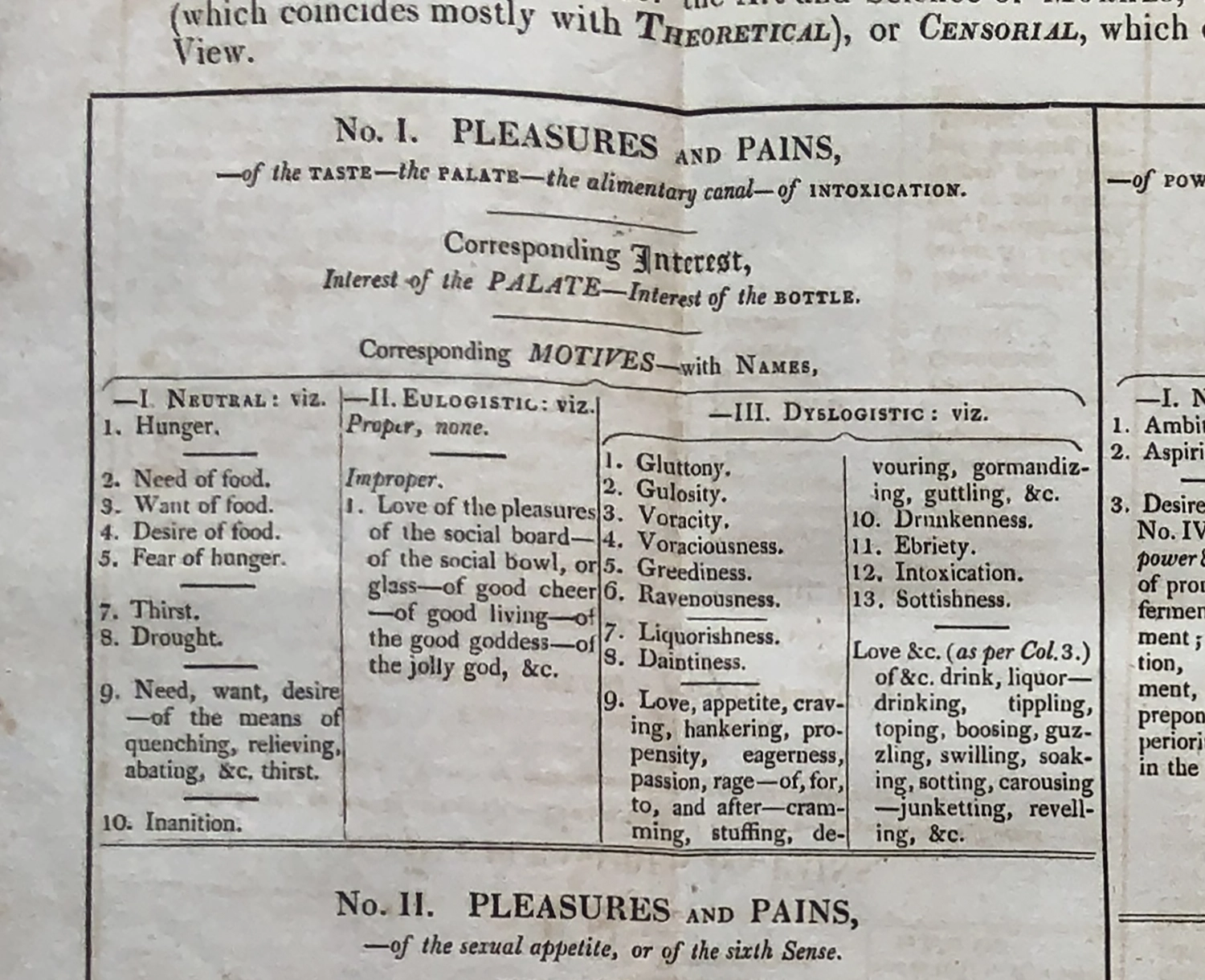

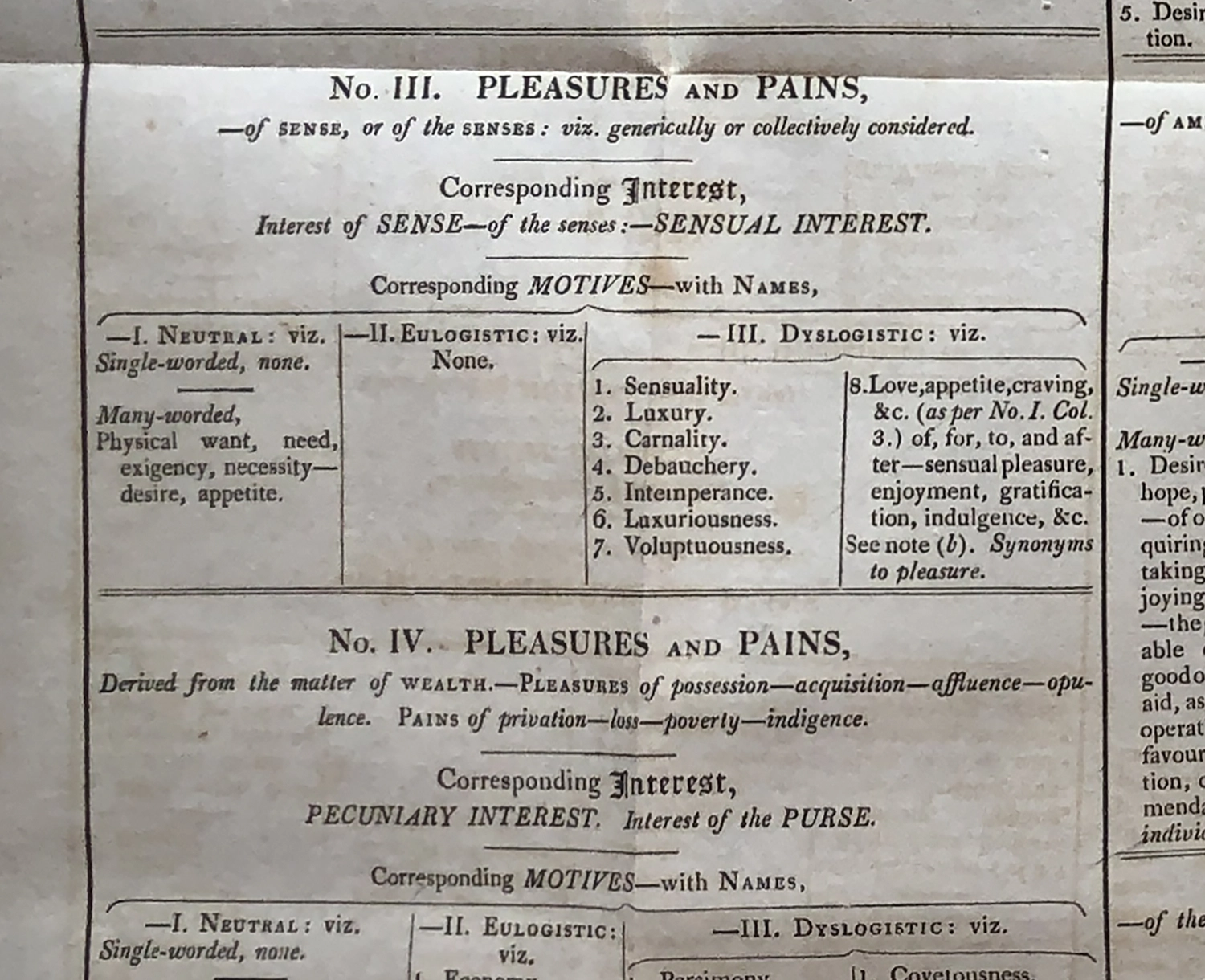

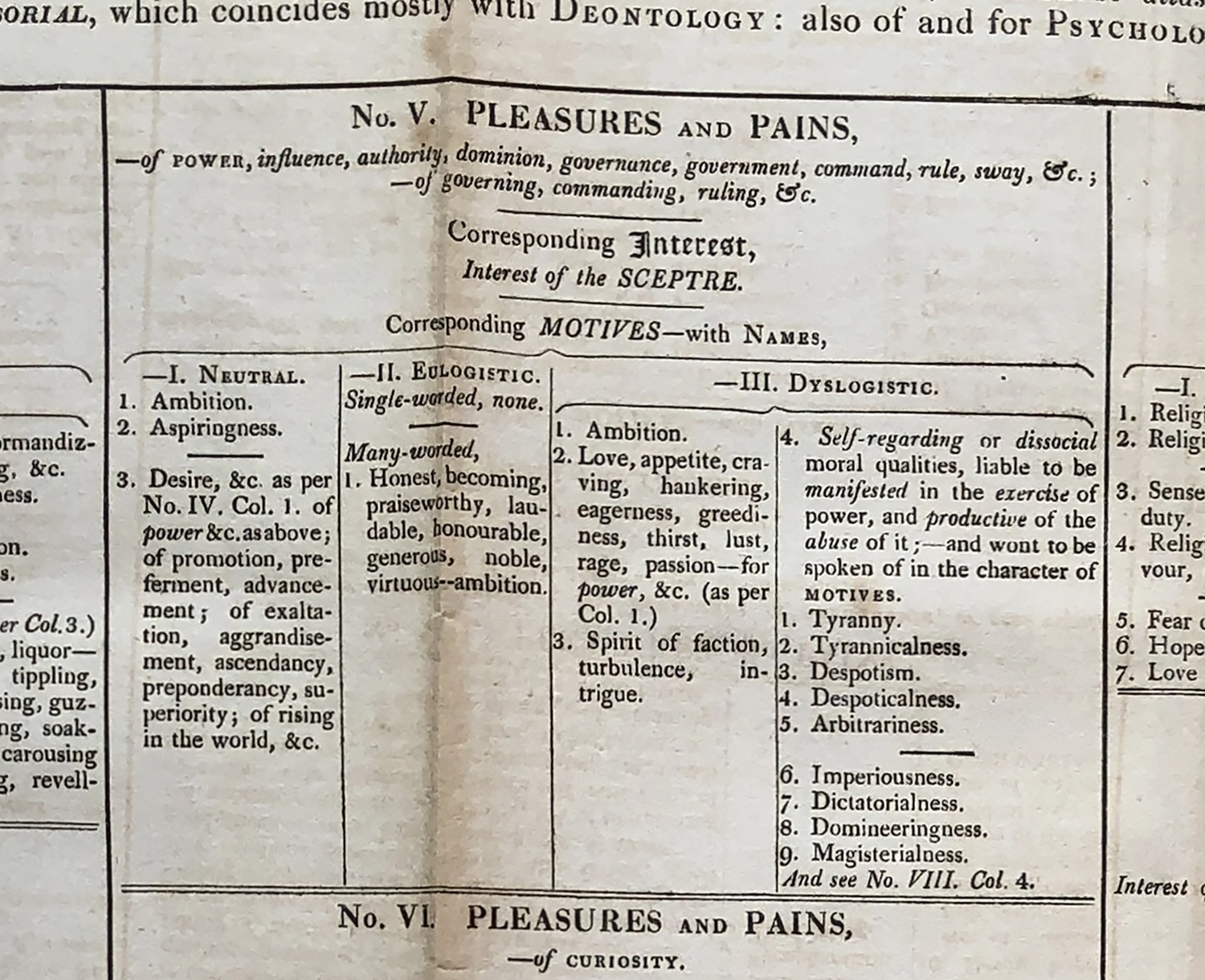

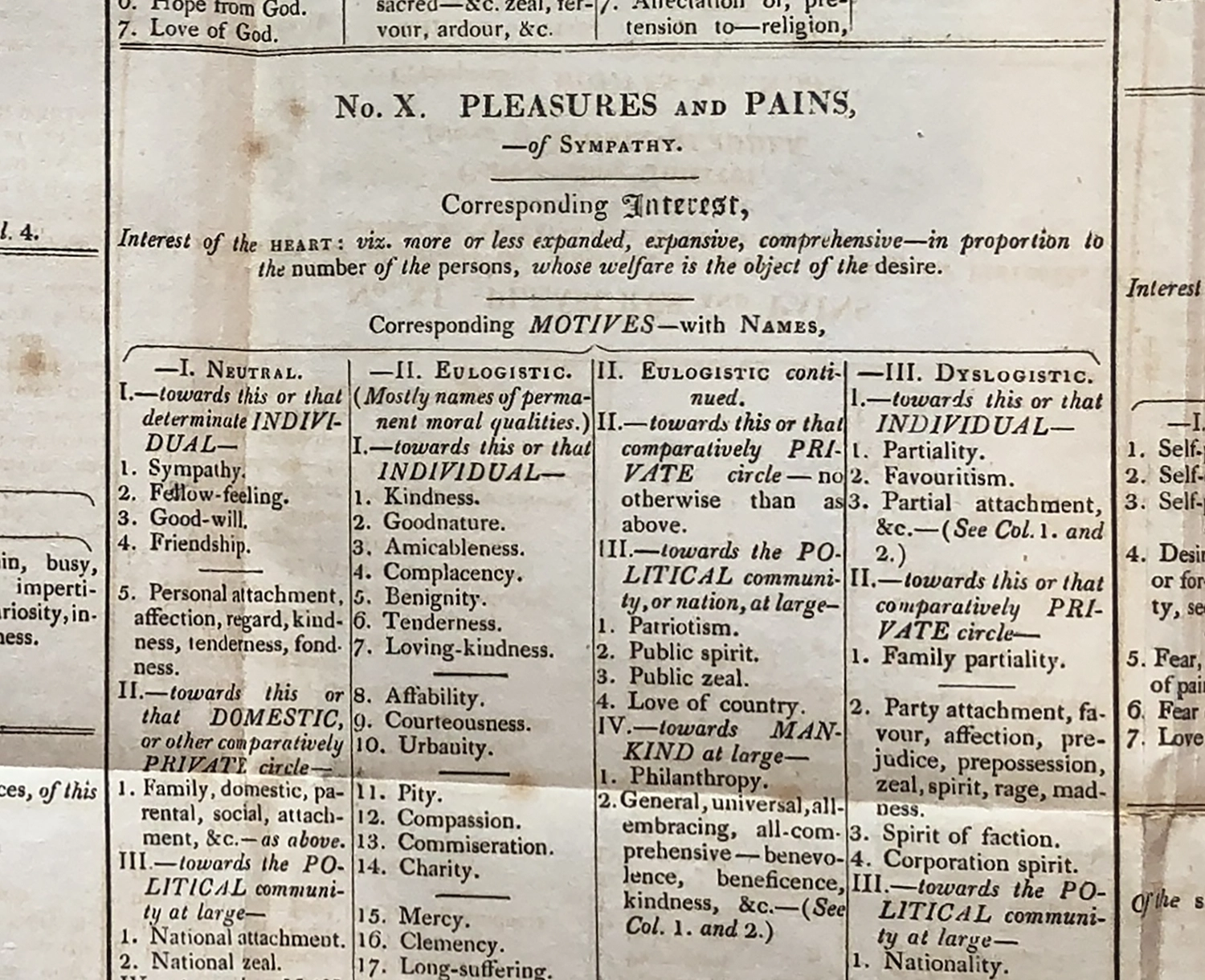

In the early 1800s, Bentham brought these ideas together in a large fold-out pamphlet entitled, rather wordily as was the custom, “TABLE of the SPRINGS of ACTION : Shewing the several Species of Pleasures and Pains, of which Man’s Nature is susceptible : together with the several Species of INTERESTS, DESIRES, and MOTIVES, respectively corresponding to them […].” 62

Jeremy Bentham’s A Table of the Springs of Action, 1817.

Jeremy Bentham’s A Table of the Springs of Action, 1817.

Jeremy Bentham’s A Table of the Springs of Action, 1817.

Jeremy Bentham’s A Table of the Springs of Action, 1817.

Jeremy Bentham’s A Table of the Springs of Action, 1817.

In this table, Bentham began developing in earnest what he called a “felicific calculus,” whereby everything that causes pleasure or pain could be assigned a positive or negative numerical value. Food, sex, and the fear of death are in the table, of course, but so is much else, including the hardship of labor and the pleasure of rest, the seeking of novelty, the joy of friendship, and the love of God, though plenty of religious impulses fall into the negative column—superstition, bigotry, fanaticism, sanctimoniousness, hypocrisy, and religious intolerance. More than a little of Bentham’s subjective judgment is in evidence here.

Regardless, his use of the phrase “Springs of Action” is a kind of pun. Most obviously, by “Springs” he means sources, as with water, or “wellsprings.” However, it’s also an allusion to the mechanical philosophy, which held that people themselves are nothing more than a kind of dynamical mechanism, their psychology driven by motive forces just as a clock’s gears are driven by its springs.

In the same way Newton’s “calculus of fluxions” allowed one to take the derivative of a particle’s observed trajectory to infer the net forces it must be experiencing, Bentham’s felicific calculus sought to derive the “hedonic,” or pleasure-seeking, forces that shape a person’s trajectory through life based on their observable behaviors. Or, equivalently, just as Leibniz’s version of calculus allowed one to integrate known forces to compute a trajectory, Bentham believed that an accurate accounting of hedonic forces, once we understood them, would enable prediction of a person’s actions. Thus, insofar as our behavior could be described as intelligent, intelligence itself is nothing more than value optimization.

How do morals, ethics, or governance fit into such a picture? For Bentham, given a felicific calculus, the answer is captured in the phrase that has become most associated with him: the greatest good for the greatest number. In other words, if people act in such a way as to optimize their pleasure, then the role of government is to ensure that the summed pleasure of all people is optimized. If, for instance, one person derives an amount of pleasure X by making a hundred others’ lives worse by amount Y, then this would be an immoral act, unless X is greater than 100Y. The correct role of government is thus to prevent such selfish negative-sum actions, while encouraging any actions that increase total happiness—even if they fail to increase every individual’s happiness. Bentham foreshadowed Hayek here, since if an individual’s intelligent behavior consisted of maximizing individual value, a government’s behavior could likewise be considered intelligent insofar as it maximized collective value.

Today, we call this philosophy “Utilitarianism,” and use the word “utility” to denote the good (when positive) or the bad (when negative). Under certain assumptions, including perfect information and fully rational actors, a free market will maximize utility.

This all sounds pretty good—certainly better than rule by force, disregard for the welfare of entire classes of people, or arbitrary moral codes based on superstition. Understandably, given that we’re still not free of these historical blights, Utilitarianism continues to attract devotees. Effective Altruists are among the most hard-core.

However, Utilitarianism, quite literally, doesn’t add up. Psychological studies show that human preferences don’t always obey the “transitive law,” wherein if the utilities of X, Y, and Z can all be expressed as numerical values, and someone prefers Y over X, and Z over Y, then they have to prefer Z over X too. Otherwise, there’s a logical contradiction.

The moment pioneering behavioral economist Amos Tversky showed, in 1969, that people can sometimes prefer X over Z, 63 he exploded the foundations of Utilitarianism as a way to describe people’s behavior. This turned what Bentham had posited as a law of human nature into, at best, a “should” rather than an “is” claim. 64

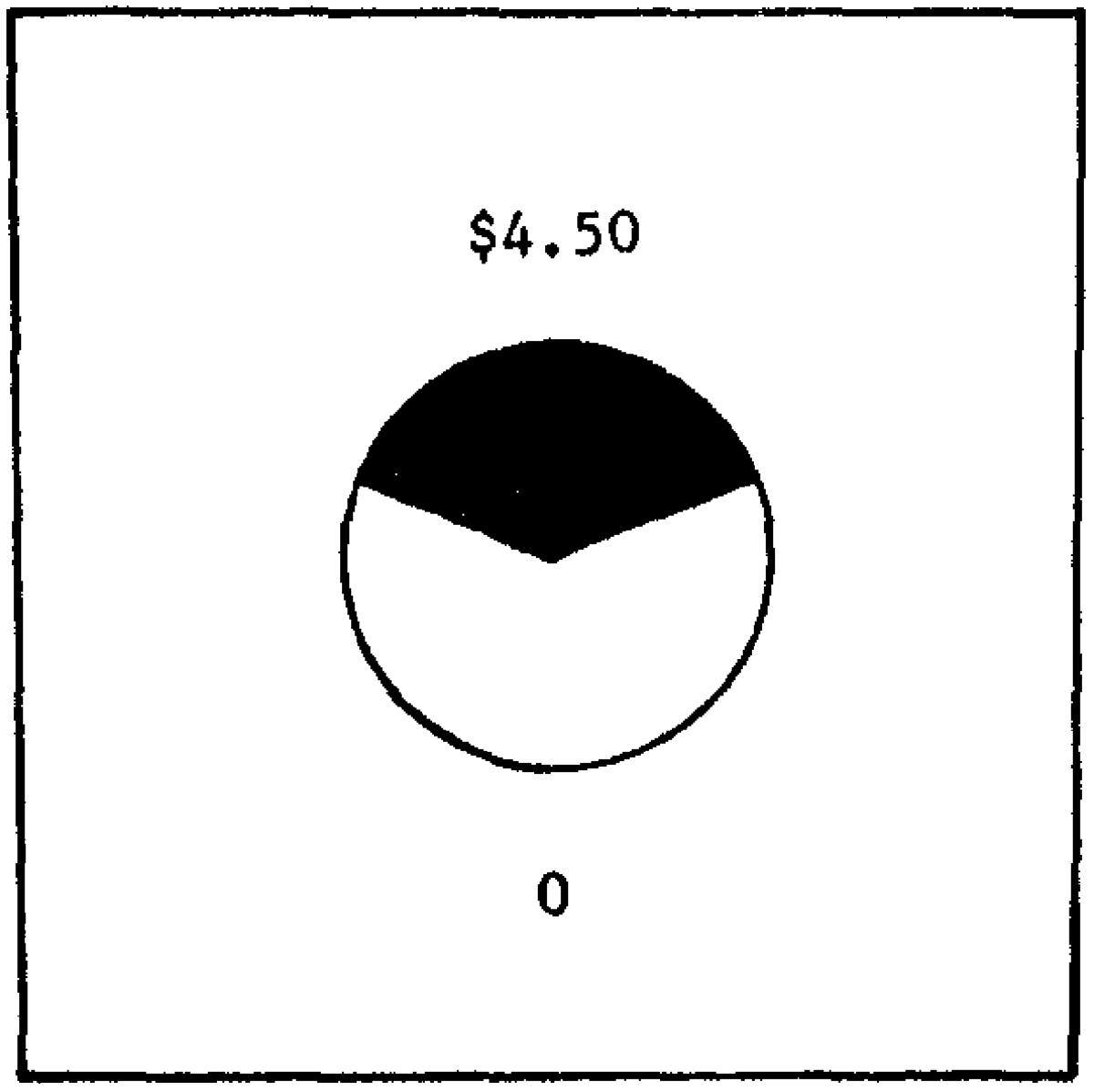

For example, one of Tversky’s experiments involved forcing subjects to choose between “gambles” in which a simple roulette-like wheel would be spun, with a specified payoff between $4 and $5 if the spinner stopped within a black wedge. As the payoff increased, the size of the wedge decreased a little faster, making the expected payoff go down. But because people were more easily able to evaluate the payoff than the probability, they generally chose the larger payoff, thus making the “wrong” choice. When choosing between the extremes, though, they reverted to the “right” choice.

“Gamble card” used to test the intransitivity of people’s preferences; Tversky 1969

Tversky compared these results to a familiar real-life example of intransitivity. A prospective car buyer is initially inclined to “buy the simplest model for $2,089.” (Ah, car prices in 1969.) “Nevertheless, when the salesman presents the optional accessories, he first decides to add power steering, which brings the price to $2,167, feeling that the price difference is relatively negligible. Then, following the same reasoning, he is willing to add $47 for a good car radio, and then an additional $64 for power brakes. By repeating this process several times, our consumer ends up with a $2,593 car, equipped with all the available accessories. At this point, however, he may prefer the simplest car over the fancy one, realizing that he is not willing to spend $504 for all the added features, although each one of them alone seemed worth purchasing.”

While dedicated Utilitarians today recognize that pretty much everyone is “irrational,” they seek, in their own actions, to be Utilitarian—hence, rational—and obey the transitive law in their preferences even when it leads to horrors or absurdities. Fully embracing Utilitarianism as a moral position implies welcoming a cost-benefit analysis of any proposition, no matter how counterintuitive or repugnant. For whose benefit, though? If one is not, in one’s heart of hearts, really “rational,” and neither is anybody else, then it’s difficult to embrace the prescription while rejecting the description.

As a descriptive theory, the trouble with utility isn’t limited to Tversky’s intransitivity of preferences. “Additivity,” the idea that utility adds up the way numbers should, also poses a serious problem. For example, in one classic series of experiments, patients were told to move a pain dial, numbered zero (no pain) to ten (maximum agony) during a colonoscopy, conducted while they were fully conscious. 65 Half of the patients (apologies, I promise there’s a reason I’m going here), “had a short interval added to the end of their procedure during which the tip of the colonoscope remained in the rectum.” This added interval was still not good, but it was less uncomfortable than what had preceded it. Curiously, these patients found the whole procedure less aversive than those for whom the colonoscopy ended more abruptly. Not only was their overall rating of the experience better in retrospect, but they ranked it more favorably among standardized lists of other aversive experiences, and even had higher rates of followup colonoscopies years later (though the effect was small).

The researchers viewed these findings as “memory failures,” highlighting the way they had internalized Utilitarian assumptions. If pain is supposed to add up, with X the pain involved in the main procedure and Y the pain involved in the extra time when the probe is left in, then surely X+Y must be greater than X! 66 Yet that isn’t how we behave. There are many more research findings in this vein.

Problems involving transitivity and additivity can’t be addressed by fiddling with the values in a “Springs of Action” table; no change in the felicific calculation will match what people actually do. Since spending money (assuming you have a finite amount of it) represents a similar series of tradeoffs regarding which actions you take, it shouldn’t be surprising to find that we’re not “rational” economic actors either. When we exchange money, we’re not in general passing around happiness, or any kind of proxy for it.

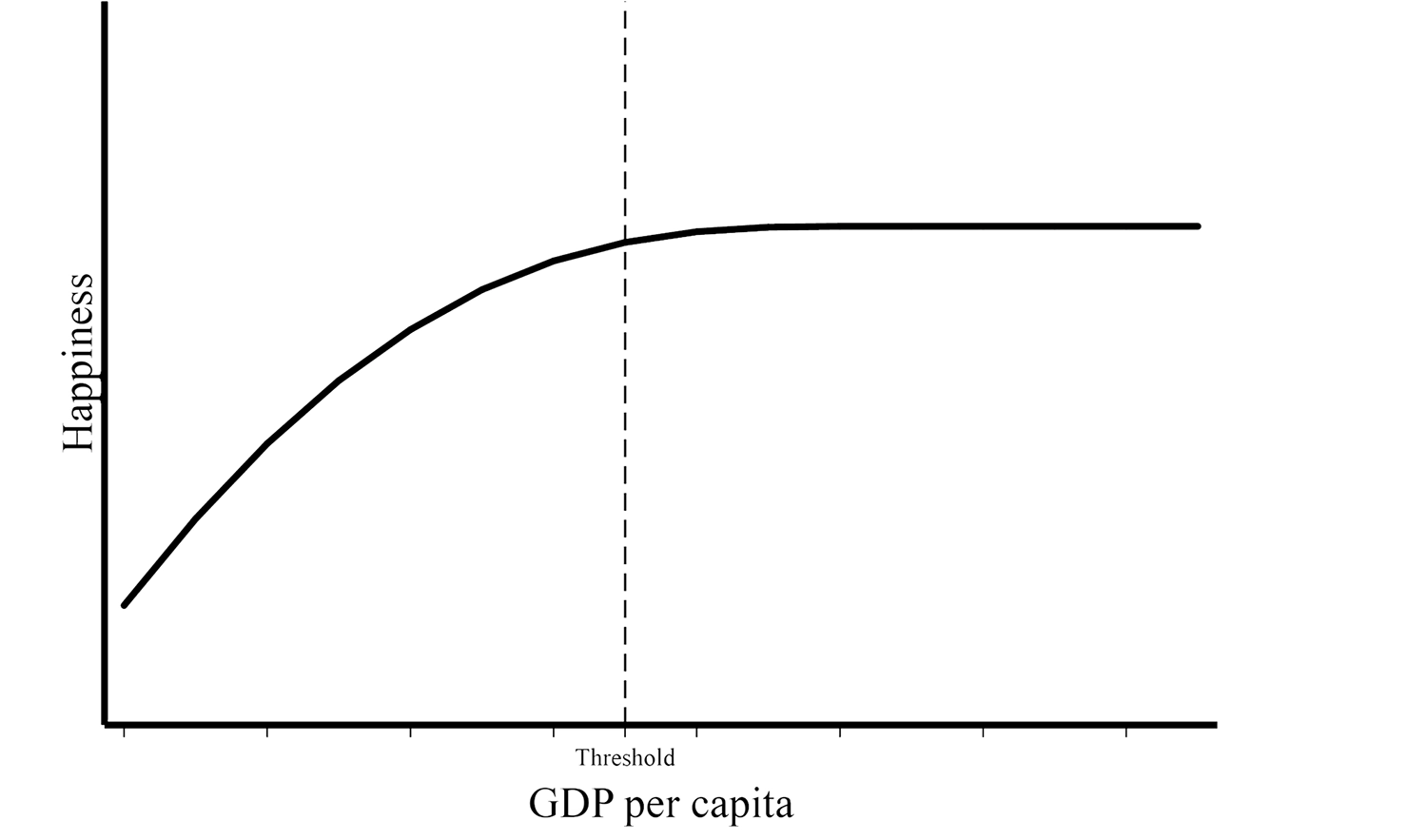

Of course, this doesn’t imply that happiness and money are unrelated. As our space of available actions shrinks due to poverty, most of us do experience negative feelings about it, both because we’re prevented from doing things we want to do and because having all of our choices taken away—being cornered—feels bad in its own right, for reasons discussed in chapter 5. 67 Going hungry, or being exposed to the elements, feels bad too. And we care quite a bit about social standing relative to our peers. So, there is a rough correlation between wealth and happiness, especially at the poor end of the scale, but the relationship is by no means straightforward. 68

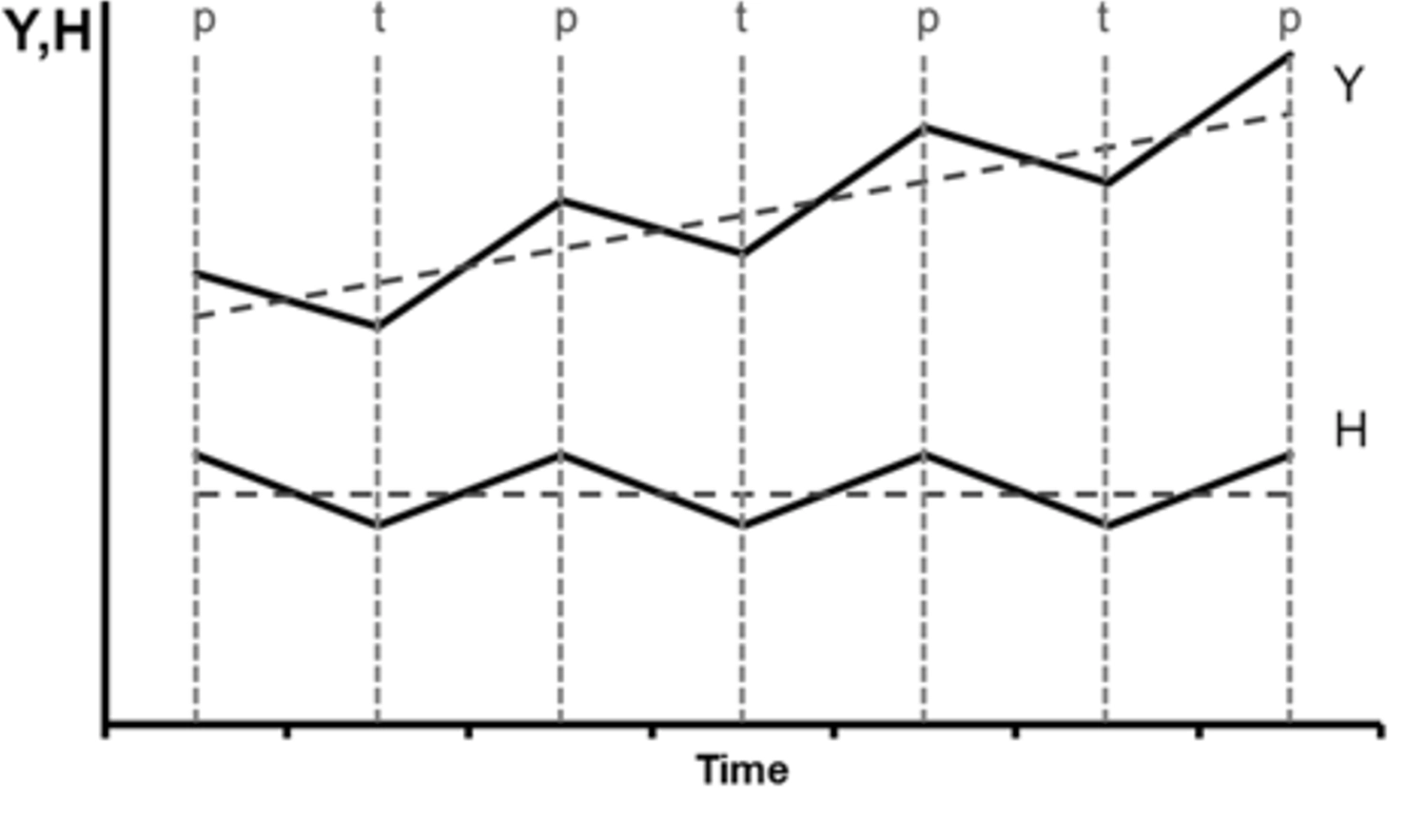

Two key results in the study of correlations between income and happiness: 1) in the short term, changes in income Y are correlated with changes in happiness H, but these correlations don’t persist in the longer term, since happiness is “adaptive”; and 2) there is a threshold above which changes in real GDP per capita have negligible effect on happiness; Easterlin and O'Connor 2021.

Two key results in the study of correlations between income and happiness: 1) in the short term, changes in income Y are correlated with changes in happiness H, but these correlations don’t persist in the longer term, since happiness is “adaptive”; and 2) there is a threshold above which changes in real GDP per capita have negligible effect on happiness; Easterlin and O'Connor 2021.

While most of us wish we had more money, generally, we don’t carry out our day-to-day activities to increase either our wealth or any other obvious quantity. The closest thing to an exception would be people who work in finance and are obsessive about their score at that game; they live to play it, just as Lee Sedol, prior to his retirement in 2019, lived to play Go. As you might imagine, Utilitarian thinking is especially popular on Wall Street, where many believe that to be smart is to be rich, and vice versa.

Big Tent

Utilitarianism is far from a purely right-wing position. Some staunch adherents, most famously the philosopher Peter Singer, extend their felicific calculus to nonhuman species. Singer is mostly vegan, since he cares about animal suffering as well as human suffering. He popularized the term “speciesism” to decry those who ignore the suffering of nonhumans, although flattening distinctions between species creates some challenges of its own. 69

We do indeed have to acknowledge that the network of relationships we care about includes nonhuman actors, whether we like it or not, but that doesn’t mean those actors are all equal. They come in all sizes and shapes, and this very fact makes universal participation in any single economy or felicific calculation impossible. One can’t ignore one’s own place in it, either.

If the graph of relationships were finite and “flat,” containing only a hundred villagers who seek to trade handicrafts and vegetables with each other, then money might work pretty well for optimizing the flow of resources, though it still wouldn’t be a good proxy for happiness. Likewise, deliberation and voting might work pretty well for coordinating collective action.

However, there is no universal currency, and no “view from nowhere.” When the graph includes not only human beings, but also single cells, tree frogs, corporations, banana plants, jumping genes, jumping spiders, trade unions, nations, rivers, and burial grounds, it becomes hard to understand how these interacting entities are all supposed to make decisions, pay each other for stuff, and be held accountable for debts or obligations using anything like an economic model.

For instance, if we were to put an economic price on air, whom should we pay? Presumably, in no small part, the Prochlorococcus cyanobacteria that inhabit the Earth’s oceans and synthesize a good deal of the oxygen we breathe. Do we mint NFTs for them? Issue them with voting shares in AirCorp? Are they even distinct, or more like a superorganism?

Suppose humans were to make the ill-advised decision to “enclose” both the oceans and all of these smaller entities to enable universal stewardship—and rent-seeking—by legal persons (which today include people, corporations, and nations). Then, the problem of taxonomizing these “assets,” weighting “voting interests,” and tracking “value flows” would look like a combination of solving GOFAI and simulating the whole planet—all for the purpose of bean counting, in a world that seemed to be getting along just fine before we decided it could be improved with a Spreadsheet of All That Is. 70

Everything we today consider labor or capital, value or worth, joy or suffering would be a drop in the bucket alongside that multifractal leviathan, the Earth. Our planet, with all its interlinked systems that have evolved over four and a half billion years, contains a zillion interacting entities experiencing a vast array of pleasures and pains from moment to moment in the service of multilevel dynamic stability. The hydrologic cycle provides the fresh water; humans need only transport it. Plants grow the bananas; human labor merely involves picking them. Our meat comes from self-reproducing ruminants and self-growing grass; we put a fence around them and call them “property,” either privatized or collectivized. We get to pretend we’re the producers of value, and play our economic games, whether communist, capitalist, or libertarian, only by the grace of Gaia.

But if we peer inside our planetary superorganism, or Prochlorococcus, or ourselves, we won’t find anything resembling a single value being maximized. This is just a restatement, yet again, of Patricia Churchland’s insightful critique of AlphaGo: outside the self-contained toy world of a game, purposive entities have “competing values and competing opportunities, as well as trade-offs and priorities.” There is no cumulative score, and no goal, other than to keep playing.

As we’ve seen, when we try to impose a score on this infinite game—insisting that, for every player, each move comes with a quantifiable cost or benefit that can be tracked over time—we run into mathematical trouble, regardless of how those costs and benefits are computed.